WARNING — DISTRESSING CONTENT: A photo unknowingly taken of you, or someone you love, in the street can instantly be turned into violent, pornographic material. An alarming Australian investigation has revealed schoolgirls are being “undressed” online and “subjected to acts of sexualised torture and degradation”.

Caitlin Roper from advocacy group Collective Shout told Yahoo News that no one is safe from artificial intelligence-based “nudifying” apps. You may have never posted a photo on social media, but your image can still be stolen, recreated and exploited in despicable ways, she warned.

And pictures aren’t just being pinched from social media, they’re being taken on the streets, in schools and even from yearbooks — meaning no digital presence is required for an image to be manipulated online.

Roper knows because she tried it herself as she attempted to put the sickening underground world of deep-fake pornography, and its potential to exploit Australian children, back under the microscope.

She said females on these apps were “routinely subjected” to dehumanising sexual scenarios, the nature of some is so graphic that Yahoo News has decided not to share them.

“In some images, they appeared terrified or crying; in others, it looked like they welcomed it. There were bruises and obvious signs of harm,” Roper said.

“A lot of very young women and girls — content that I believe qualifies as child sexual abuse material.”

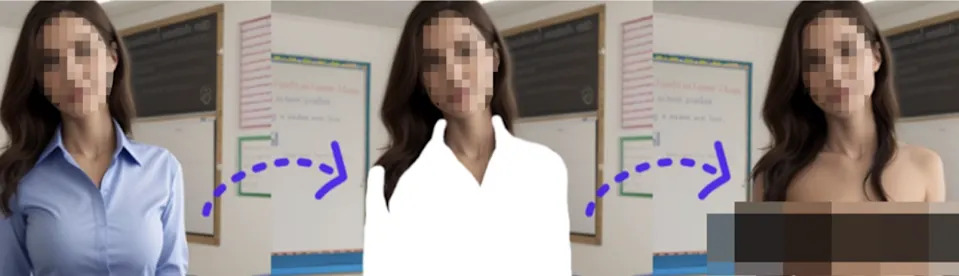

Roper revealed examples of how images were ‘undressed’. Picture: Collective Shout

A new “nation-leading” law came into effect in South Australia this month, criminalising the creation of wholly AI-generated deepfake images. Offenders now face fines of up to $20,000 or up to four years’ imprisonment.

But Roper said legislation does not go far enough, with the apps accessible to virtually anybody with an internet connection.

Roper recently analysed 20 nudifying apps in a bid to see “what a teen boy could do” with a smartphone and “access to a photo of a female classmate”.

The results left her in shock.

Shocking scale of AI-based ‘nudifying’ apps exposed

Not only was it unbelievably simple, but it was quick, Roper said.

“I created an AI-generated image of a young woman — a woman that didn’t exist — standing in the forest, dressed regularly,” she said.

“And with that image, I tested out the apps.”

Roper was able to digitally strip the woman in a matter of seconds, and for free.

She said users of these apps can select everything from clothing removal to poses, specific sex acts, and create ultra-realistic and personalised images.

Caitlin Roper from advocacy group Collective Shout is sounding the alarm on so-called ‘nudifying’ apps. Source: Supplied

Certain platforms even encourage users to upload their creations to public galleries, which are often filled with violent and degrading sexual content.

The material often depicts women of all ages being humiliated, assaulted, and tortured by men.

Text prompts used to generate these images include terms like “abused”, “crying” and “schoolgirl”.

“It’s completely unregulated. What they would often have is a little checkbox, saying ‘I confirm that I have consent, that I’m not using children, or that I’m not violating local laws’,” she said.

“Which is just a very pathetic attempt to cover themselves. But obviously, these sites intend to undress, degrade, and humiliate.”

What’s more, the vast majority of these sites only work on women’s bodies.

“If you put in an image, it digitally undresses a woman and shows female anatomy,” she said.

“They don’t generally work on male images, so they are intended for use, typically by men and boys, against women and girls,” Roper said.

Unbelievably, certain sites even offer financial incentives to invite friends.

Roper said they were not just marketed to adults, but to underage boys, too.

She is now calling on governments around the country — and the world — to urgently tighten legislation and block access.

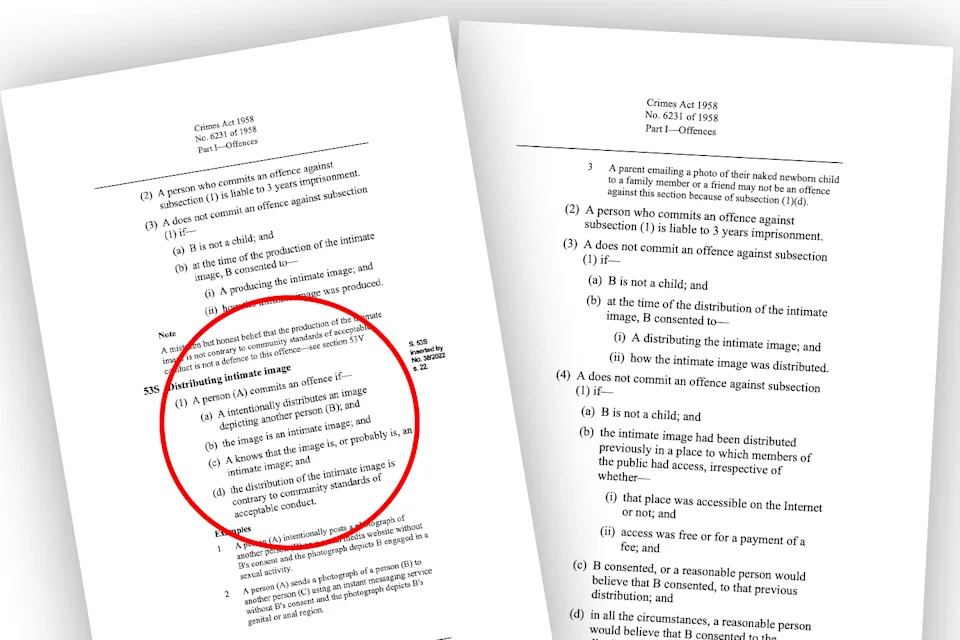

In Victoria, the Crimes Act 1958 prohibits the intentional production and distribution of an ‘intimate image’ of another person without consent. Source: Victorian government

What does the law state in Australia?

Victoria and South Australia are the only Australian states with laws specifically targeting the creation and distribution of non-consensual deepfake sexual material.

In Victoria, the Crimes Act 1958 prohibits the intentional production, distribution or threatened distribution of an ‘intimate image’ of another person without consent, and the definition has since been expanded to cover images that are digitally created or manipulated.

South Australia this week introduced laws criminalising the production of AI-generated deepfake pornography that is invasive, humiliating, degrading, or sexually explicit, with penalties including fines and imprisonment.

In September, NSW passed legislation to tackle sexually explicit deepfake material.

It’s now an offence to produce or share AI-generated intimate images depicting a real, identifiable person without their consent, with penalties of up to three years’ imprisonment.

At the federal level, the Online Safety Act 2021 already bans posting or threatening to post intimate images without consent, and the new Criminal Code Amendment Act 2024 explicitly criminalises the non‑consensual sharing of adult‑targeted deepfake sexual material nationwide.

Last year, Attorney General Mark Dreyfus introduced laws to parliament criminalising the sharing of artificially developed pornographic images, or genuine adult images distributed without consent, with offenders now facing a prison sentence of six years.

Those found to have created the images themselves could be jailed for seven years.

And while it’s certainly a step in the right direction, campaigners argue that the laws target distribution and not creation specifically.

Laws not working as intended, advocate says

Dr Asher Flynn, Chief Investigator at the Australian Research Council Centre for the Elimination of Violence Against Women, is among a growing chorus of academics asking for laws to be taken further.

“[It’s] really important to see ‘creation’ itself as a standalone offence,” she had earlier told Yahoo News.

“It’d be good to see more onus put on the websites, the digital platform providers and the technology developers who are creating these types of tools too.”

Roper said many of the sites she encountered came out of Eastern Europe, making accountability extremely difficult. But she said restricting access is possible.

“One recommendation we made to the United Nations was that all member states participate in a ban and enforce it,” she said.

“That could include geo-blocking, search engine restrictions, or social media platforms not advertising the apps. But the challenge is getting all countries on board.

“We do have some laws in Australia … but they’re not working as intended.”

If you or someone you know is impacted by child sexual abuse or exploitation, including historical child sexual abuse, or online child sexual exploitation there are support services you can access through the Australian Centre to Counter Child Exploitation website.

These avenues of support are available to help, listen and believe.

If you think a child is in immediate danger call Triple Zero (000), Crimestoppers on 1800 333 000, or your local police. Readers seeking support and information about suicide prevention can contact Beyond Blue on 1300 22 4636, Lifeline on 13 11 14 or Suicide Call Back Service on 1300 659 467.

Do you have a story tip? Email: newsroomau@yahoonews.com.

You can also follow us on Facebook, Instagram, TikTok, Twitter and YouTube.