Beyth-Marom, R., Fischhoff, B., Quadrel, M. J. & Furby, L. in Teaching Decision Making to Adolescents (eds Baron, J. & Brown, R. V.) (Routledge, 1991).

Stanovich, K. E. & West, R. F. What intelligence tests miss. Psychologist 27, 80–83 (2014).

Toplak, M. E., Sorge, G. B., Benoit, A., West, R. F. & Stanovich, K. E. Decision-making and cognitive abilities: a review of associations between Iowa Gambling Task performance, executive functions, and intelligence. Clin. Psychol. Rev. 30, 562–581 (2010).

Stanovich, K. E., West, R. F. & Toplak, M. E. The Rationality Quotient: Toward a Test of Rational Thinking (MIT Press, 2016).

Aczel, B., Bago, B., Szollosi, A., Foldes, A. & Lukacs, B. Is it time for studying real-life debiasing? Evaluation of the effectiveness of an analogical intervention technique. Front. Psychol. 6, 1120 (2015).

Yagoda, B. The cognitive biases tricking your brain. The Atlantic (September 2018).

Featherston, R. et al. Interventions to mitigate bias in social work decision-making: a systematic review. Res. Soc. Work Pract. 29, 741–752 (2019).

Prakash, S., Sladek, R. M. & Schuwirth, L. Interventions to improve diagnostic decision making: a systematic review and meta-analysis on reflective strategies. Med. Teach. 41, 517–524 (2019).

Ludolph, R. & Schulz, P. J. Debiasing health-related judgments and decision making: a systematic review. Med. Decis. Making 38, 3–13 (2018).

Tversky, A. & Kahneman, D. Judgment under uncertainty: heuristics and biases. Science 185, 1124–1131 (1974).

Gigerenzer, G. & Gaissmaier, W. Heuristic decision making. Annu. Rev. Psychol. 62, 451–482 (2011).

Kahneman, D. & Klein, G. Conditions for intuitive expertise: a failure to disagree. Am. Psychol. 64, 515–526 (2009).

Funder, D. C. Errors and mistakes: evaluating the accuracy of social judgment. Psychol. Bull. 101, 75–90 (1987).

Pronin, E. Perception and misperception of bias in human judgment. Trends Cogn. Sci. 11, 37–43 (2007).

Saposnik, G., Redelmeier, D., Ruff, C. C. & Tobler, P. N. Cognitive biases associated with medical decisions: a systematic review. BMC Med. Inform. Decis. Mak. 16, 138 (2016).

Featherston, R., Downie, L. E., Vogel, A. P. & Galvin, K. L. Decision making biases in the allied health professions: a systematic scoping review. PLoS ONE 15, e0240716 (2020).

Edmond, G. & Martire, K. A. Just cognition: scientific research on bias and some implications for legal procedure and decision-making. Modern L. Rev. 82, 633–664 (2019).

Blanco, F. in Encyclopedia of Animal Cognition and Behavior (eds Vonk, J. & Shackelford, T. K.) (Springer, 2022).

Odds of dying. Injury facts. National Safety Council https://injuryfacts.nsc.org/all-injuries/preventable-death-overview/odds-of-dying/ (2017).

Stanovich, K. E. & West, R. F. The assessment of rational thinking: IQ ≠ RQ. Teach. Psychol. 41, 265–271 (2014).

Bruine de Bruin, W., Parker, A. M. & Fischhoff, B. Decision-making competence: more than intelligence? Curr. Dir. Psychol. Sci. 29, 186–192 (2020).

Ghazal, S., Cokely, E. T., Garcia-Retamero, R. & Feltz, A. Cambridge Handbook of Expertise and Expert Performance (Cambridge Univ. Press, 2018).

Primi, C., Donati, M. A., Chiesi, F. & Panno, A. in Individual Differences in Judgement and Decision-Making (eds Toplak, M. E. & Weller, J.) 58–76 (Psychology Press, 2016).

Wechsler, D. WAIS-IV: Wechsler Adult Intelligence Scale—Fourth Edition (Pearson, 2008).

Stanovich, K. E. The comprehensive assessment of rational thinking. Educ. Psychol. 51, 23–34 (2016).

Todd, B. Notes on good judgement and how to develop it. 80,000 Hours https://80000hours.org/2020/09/good-judgement/ (2020).

Kahneman, D. Thinking, Fast and Slow (Penguin, 2011).

Stanovich, K. E. Miserliness in human cognition: the interaction of detection, override and mindware. Think. Reason. 24, 423–444 (2018).

Toplak, M. E., West, R. F. & Stanovich, K. E. Real-world correlates of performance on heuristics and biases tasks in a community sample. J. Behav. Decis. Mak. 30, 541–554 (2017).

Chandler, J., Paolacci, G., Peer, E., Mueller, P. & Ratliff, K. A. Using nonnaive participants can reduce effect sizes. Psychol. Sci. 26, 1131–1139 (2015).

Haigh, M. Has the standard cognitive reflection test become a victim of its own success? Adv. Cogn. Psychol. 12, 145–149 (2016).

Bruine de Bruin, W., Parker, A. M. & Fischhoff, B. Individual differences in adult decision-making competence. J. Pers. Soc. Psychol. 92, 938–956 (2007).

Parker, A. M. & Fischhoff, B. Decision-making competence: external validation through an individual-differences approach. J. Behav. Decis. Mak. 18, 1–27 (2005).

Ro, C. The complicated battle over unconscious-bias training. BBC (29 March 2021).

Sukhera, J. Starbucks and the impact of implicit bias training. The Conversation (27 May 2018).

Walker, T. B. & Feloni, R. Here’s the presentation Google gives employees on how to spot unconscious bias at work. Business Insider (2020).

Cantarelli, P., Belle, N. & Belardinelli, P. Behavioral public HR: experimental evidence on cognitive biases and debiasing interventions. Rev. Public Pers. Adm. 40, 56–81 (2020).

Morewedge, C. K. et al. Debiasing decisions: improved decision making with a single training intervention. Policy Insights Behav. Brain Sci. 2, 129–140 (2015).

Davies, M. in Higher Education: Handbook of Theory and Research (ed. Perna, L. W.) 41–92 (Springer, 2015).

Stanovich, K. E. The Oxford Handbook Of Thinking And Reasoning (Oxford Univ. Press, 2012).

Ennis, R. H. The Palgrave Handbook of Critical Thinking in Higher Education (Palgrave Macmillan, 2015).

Common core state standards. National Governors Association https://preview.fadss.org/resources/webinars/webinar2/FSBAPresentationforCommunities_transcribed.pdf (2010).

Next Generation Science Standards: for States, by States (National Academies Press, 2013).

Abrami, P. C. et al. Strategies for teaching students to think critically: a meta-analysis. Rev. Educ. Res. 85, 275–314 (2015).

Mao, W., Cui, Y., Chiu, M. M. & Lei, H. Effects of game-based learning on students’ critical thinking: a meta-analysis. J. Educ. Comput. Res. 59, 1682–1708 (2022).

Xu, E., Wang, W. & Wang, Q. The effectiveness of collaborative problem solving in promoting students’ critical thinking: a meta-analysis based on empirical literature. Humanit. Soc. Sci. Commun. 10, 16 (2023).

Ennis, R. H. Critical thinking and subject specificity: clarification and needed research. Educ. Res. 18, 4 (1989).

Siegel, H. Education’s Epistemology: Rationality, Diversity, and Critical Thinking (Oxford Univ. Press, 2017).

Hattie, J. Visible Learning: A Synthesis of over 800 Meta-Analyses Relating to Achievement (Routledge, 2008).

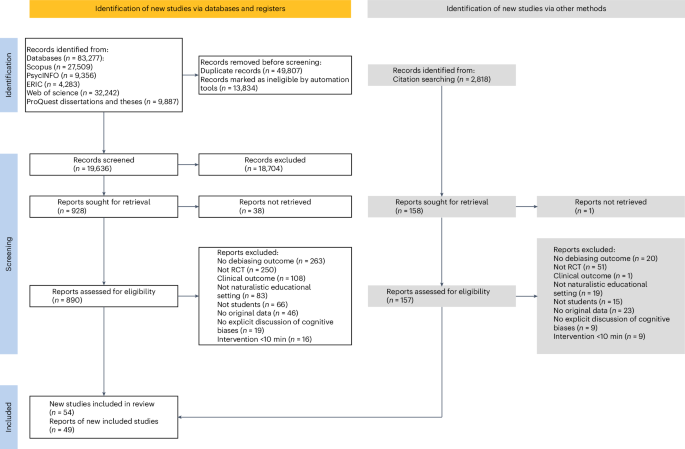

Page, M. J. et al. PRISMA 2020 explanation and elaboration: updated guidance and exemplars for reporting systematic reviews. Br. Med. J. 372, n160 (2021).

Haddaway, N. R., Page, M. J., Pritchard, C. C. & McGuinness, L. A. PRISMA2020: an R package and Shiny app for producing PRISMA 2020-compliant flow diagrams, with interactivity for optimised digital transparency and open synthesis. Campbell Syst. Rev. 18, e1230 (2022).

Calvillo, D. P., Bratton, J., Velazquez, V., Smelter, T. J. & Crum, D. Elaborative feedback and instruction improve cognitive reflection but do not transfer to related tasks. Think. Reason. 29, 276–304 (2022).

Salvatore, J. & Morton, T. A. Evaluations of science are robustly biased by identity concerns. Group Process. Intergr. Relat. 24, 568–582 (2021).

van Brussel, S., Timmermans, M., Verkoeijen, P. & Paas, F. Teaching on video as an instructional strategy to reduce confirmation bias—a pre-registered study. Instr. Sci. 49, 475–496 (2021).

Rhodes, R. E. et al. Teaching decision making with serious games: an independent evaluation. Games Cult. 12, 233–251 (2017).

Dwyer, C. P., Hogan, M. J. & Stewart, I. The effects of argument mapping-infused critical thinking instruction on reflective judgement performance. Think. Skills Creat. 16, 11–26 (2015).

Sellier, A. L., Scopelliti, I. & Morewedge, C. K. Debiasing training improves decision making in the field. Psychol. Sci. 30, 1371–1379 (2019).

Frederick, S. Cognitive reflection and decision making. J. Econ. Perspect. 19, 25–42 (2005).

Dawson, T. L. Metacognition and Learning in Adulthood. Prepared in Response to Tasking from ODNI/CHCO/IC Leadership Development Office (Developmental Testing Service LLC, 2008).

Burgoyne, A. P., Mashburn, C. A., Tsukahara, J. S., Hambrick, D. Z. & Engle, R. W. Understanding the relationship between rationality and intelligence: a latent-variable approach. Think. Reason. 29, 1–42 (2023).

Lectical reflective judgment assessment. LecticaLive https://lecticalive.org/about/lrja (2024).

Dunbar, N. E. et al. Implicit and explicit training in the mitigation of cognitive bias through the use of a serious game. Comput. Hum. Behav. 37, 307–318 (2014).

Dunbar, N. E. et al. Mitigation of cognitive bias with a serious game: two experiments testing feedback timing and source. Int. J. Game Based Learn. 7, 86–100 (2017).

Shaw, A. et al. Serious efforts at bias reduction: the effects of digital games and avatar customization on three cognitive biases. J. Media Psychol. 30, 16–28 (2018).

Legaki, N.-Z., Karpouzis, K., Assimakopoulos, V. & Hamari, J. Gamification to avoid cognitive biases: an experiment of gamifying a forecasting course. Technol. Forecast. Soc. Change 167, 120725 (2021).

Gutierrez, B. Fair Play: A Video Game Designed to Reduce Implicit Racial Bias (Univ. Wisconsin, 2013).

Lee, Y.-H. et al. Training anchoring and representativeness bias mitigation through a digital game. Simul. Gaming 47, 751–779 (2016).

Roelle, J., Schmidt, E. M., Buchau, A. & Berthold, K. Effects of informing learners about the dangers of making overconfident judgments of learning. J. Educ. Psychol. 109, 99–117 (2017).

van Peppen, L. M. et al. Learning to avoid biased reasoning: effects of interleaved practice and worked examples. J. Cogn. Psychol. 33, 304–326 (2021).

Martínez, N., Rodríguez-Ferreiro, J., Barberia, I. & Matute, H. A debiasing intervention to reduce the causality bias in undergraduates: the role of a bias induction phase. Curr. Psychol. 42, 32456–32468 (2023).

Evans, J. S. B. T. & Stanovich, K. E. Dual-process theories of higher cognition: advancing the debate. Perspect. Psychol. Sci. 8, 223–241 (2013).

Alter, A. L., Oppenheimer, D. M., Epley, N. & Eyre, R. N. Overcoming intuition: metacognitive difficulty activates analytic reasoning. J. Exp. Psychol. Gen. 136, 569–576 (2007).

Wisniewski, B., Zierer, K. & Hattie, J. The power of feedback revisited: a meta-analysis of educational feedback research. Front. Psychol. 10, 3087 (2019).

Muehlhauser, L. New web app for calibration training. Open Philanthropy https://www.openphilanthropy.org/research/new-web-app-for-calibration-training/ (2018).

Swart, E. K., Nielen, T. M. J. & Sikkema-de Jong, M. T. Supporting learning from text: a meta-analysis on the timing and content of effective feedback. Educ. Res. Rev. 28, 100296 (2019).

Chi, M. T. & Wylie, R. The ICAP Framework: linking cognitive engagement to active learning outcomes. Educ. Psychol. 49, 219–243 (2014).

Tomcho, T. J. & Foels, R. Meta-analysis of group learning activities: empirically based teaching recommendations. Teach. Psychol. 39, 159–169 (2012).

Noetel, M. et al. Video improves learning in higher education: a systematic review. Rev. Educ. Res. 91, 204–236 (2021).

Noetel, M. et al. Multimedia design for learning: an overview of reviews with meta-meta-analysis. Rev. Educ. Res. 92, 413–454 (2022).

Chernikova, O. et al. Simulation-based learning in higher education: a meta-analysis. Rev. Educ. Res. 90, 499–541 (2020).

Ahmadi, A. et al. A classification system for teachers’ motivational behaviors recommended in self-determination theory interventions. J. Educ. Psychol. 115, 1158–1176 (2023).

Bureau, J., Howard, J. L., Chong, J. X. Y. & Guay, F. Pathways to student motivation: a meta-analysis of antecedents of autonomous and controlled motivations. Rev. Educ. Res. 92, 46–72 (2022).

Korteling, J. E. H., Gerritsma, J. Y. J. & Toet, A. Retention and transfer of cognitive bias mitigation interventions: a systematic literature study. Front. Psychol. 12, 629354 (2021).

Halpern, D. F. Teaching critical thinking for transfer across domains: disposition, skills, structure training, and metacognitive monitoring. Am. Psychol. 53, 449–455 (1998).

van Peppen, L. M., Verkoeijen, P. P. J. L., Heijltjes, A. E. G., Janssen, E. M. & van Gog, T. Enhancing students’ critical thinking skills: is comparing correct and erroneous examples beneficial? Instr. Sci. 49, 747–777 (2021).

Tiruneh, D. T., Verburgh, A. & Elen, J. Effectiveness of critical thinking instruction in higher education: a systematic review of intervention studies. High. Educ. Stud. 4, 1–17 (2014).

Willingham, D. T. in Critical Thinking: Why It Is So Hard to Teach? 8–19 (American Federation of Teachers, 2007).

Tversky, A. & Kahneman, D. Extensional versus intuitive reasoning: the conjunction fallacy in probability judgment. Psychol. Rev. 90, 293–315 (1983).

McKenzie, C. R. M. Rational models as theories—not standards—of behavior. Trends Cogn. Sci. 7, 403–406 (2003).

Szollosi, A. & Newell, B. R. People as intuitive scientists: reconsidering statistical explanations of decision making. Trends Cogn. Sci. 24, 1008–1018 (2020).

Alexander, S. Confirmation bias as misfire of normal Bayesian reasoning. Slate Star Codex https://slatestarcodex.com/2020/02/12/confirmation-bias-as-misfire-of-normal-bayesian-reasoning/ (2020).

Abendroth, J. & Richter, T. How to understand what you don’t believe: metacognitive training prevents belief-biases in multiple text comprehension. Learn. Instr. 71, 101394 (2021).

Sibbald, M. et al. Debiasing versus knowledge retrieval checklists to reduce diagnostic error in ECG interpretation. Adv. Health Sci. Educ. Theory Pract. 24, 427–440 (2019).

Kyaw, B. M. et al. Virtual reality for health professions education: systematic review and meta-analysis by the digital health education collaboration. J. Med. Internet Res. 21, e12959 (2019).

Schulz, K. F., Altman, D. G. & Moher, D. & CONSORT Group. CONSORT 2010 statement: updated guidelines for reporting parallel group randomised trials. BMC Med. 8, 18 (2010).

Cooper, H. & Cooper, H. M. Reporting Quantitative Research in Psychology: How to Meet APA Style Journal Article Reporting Standards (American Psychological Association, 2020).

Joy-Gaba, J. A. From Learning to Doing: The Effects of Educating Individuals on the Pervasiveness of Bias (Univ. Virginia, 2011).

Scopelliti, I. et al. Bias blind spot: structure, measurement, and consequences. Manage. Sci. 61, 2468–2486 (2015).

Oeberst, A. & Imhoff, R. Toward parsimony in bias research: a proposed common framework of belief-consistent information processing for a set of biases. Perspect. Psychol. Sci. 18, 1464–1487 (2023).

Roth, S., Robbert, T. & Straus, L. On the sunk-cost effect in economic decision-making: a meta-analytic review. Bus. Res. 8, 99–138 (2015).

Stanovich, K. E. & West, R. F. On the relative independence of thinking biases and cognitive ability. J. Pers. Soc. Psychol. 94, 672–695 (2008).

Stanovich, K. E., West, R. F. & Toplak, M. E. Myside bias, rational thinking, and intelligence. Curr. Dir. Psychol. Sci. 22, 259–264 (2013).

Aczel, B., Bago, B., Szollosi, A., Foldes, A. & Lukacs, B. Measuring individual differences in decision biases: methodological considerations. Front. Psychol. 6, 1770 (2015).

Toplak, M. E. & Stanovich, K. E. Measuring rational thinking in adolescents: the assessment of rational thinking for youth (ART‐Y). J. Behav. Decis. Mak. 37, e2381 (2024).

Kahneman, D. Thinking, Fast and Slow (Macmillan, 2011).

Di Battista, A., Grayling, S. & Hasselaar, E. Future of Jobs Report 2023 (World Economic Forum, 2023).

Fisher, D. J., Carpenter, J. R., Morris, T. P., Freeman, S. C. & Tierney, J. F. Meta-analytical methods to identify who benefits most from treatments: daft, deluded, or deft approach? Br. Med. J. 356, j573 (2017).

Does debiasing training improve rationality? A systematic review and meta-analysis of randomised trials in educational settings. OSF https://osf.io/xrm4g (2022).

Benjamini, Y. & Hochberg, Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J. R. Stat. Soc. Series B Stat. Methodol. 57, 289–300 (1995).

Moher, D., Liberati, A., Tetzlaff, J., Altman, D. G. & PRISMA Group. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Int. J. Surg. 8, 336–341 (2010).

Torgerson, C. J. & Torgerson, D. J. Randomised Trials in Education: An Introductory Handbook (Education Endowment Foundation, 2013).

Kuss, O., Blettner, M. & Börgermann, J. Propensity score: an alternative method of analyzing treatment effects. Dtsch. Arztebl. Int. 113, 597–603 (2016).

Antognelli, S. L., Sharrock, M. J. & Newby, J. M. A randomised controlled trial of computerised interpretation bias modification for health anxiety. J. Behav. Ther. Exp. Psychiatry 66, 101518 (2020).

Matute, H. et al. Illusions of causality: how they bias our everyday thinking and how they could be reduced. Front. Psychol. 6, 888 (2015).

Sklad, M. & Diekstra, R. The development of the heuristics and biases scale (HBS). Procedia Soc. Behav. Sci. 112, 710–718 (2014).

Thomson, K. S. & Oppenheimer, D. M. Investigating an alternate form of the cognitive reflection test. Judgm. Decis. Mak. 11, 99–113 (2016).

Jacobson, D. et al. Improved learning in US history and decision competence with decision-focused curriculum. PLoS ONE 7, e45775 (2012).

Hausner, E., Guddat, C., Hermanns, T., Lampert, U. & Waffenschmidt, S. Prospective comparison of search strategies for systematic reviews: an objective approach yielded higher sensitivity than a conceptual one. J. Clin. Epidemiol. 77, 118–124 (2016).

EndNote (The EndNote Team, 2013).

Marshall, I. J., Noel-Storr, A., Kuiper, J., Thomas, J. & Wallace, B. C. Machine learning for identifying randomized controlled trials: an evaluation and practitioner’s guide. Res. Synth. Methods 9, 602–614 (2018).

Covidence Systematic Review Software (Veritas Health Innovation, 2023).

Pigott, T. D. & Polanin, J. R. Methodological guidance paper: high-quality meta-analysis in a systematic review. Rev. Educ. Res. 90, 24–46 (2020).

Higgins, J. P. T. et al. Cochrane Handbook for Systematic Reviews of Interventions (Wiley, 2019).

Rohatgi, A. WebPlotDigitizer. https://automeris.io/WebPlotDigitizer/ (2022).

Sterne, J. A. C. et al. RoB 2: a revised tool for assessing risk of bias in randomised trials. Br. Med. J. 366, l4898 (2019).

Flemyng, E. et al. Using Risk of Bias 2 to assess results from randomised controlled trials: guidance from Cochrane. BMJ Evid. Based Med. 28, 260–266 (2023).

Shea, B. J. et al. AMSTAR 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. Br. Med. J. 358, j4008 (2017).

Hedges, L. V. Distribution theory for Glass’s estimator of effect size and related estimators. J. Educ. Behav. Stat. 6, 107–128 (1981).

Viechtbauer, W. The metafor package ver. 4.6-0. (2017).

R: A Language and Environment for Statistical Computing (R Foundation for Statistical Computing, 2020).

Borenstein, M., Hedges, L. V., Higgins, J. P. T. & Rothstein, H. R. Introduction to Meta-Analysis (Wiley, 2011).

Schwarzer, G. meta: an R package for meta-analysis (ver. 7.0-0). R News 7, 40–45 (2007).

Mathur, M. B. & VanderWeele, T. J. New metrics for meta-analyses of heterogeneous effects. Stat. Med. 38, 1336–1342 (2019).

Wickham, H. Ggplot2. Wiley Interdiscip. Rev. Comput. Stat. 3, 180–185 (2011).

Krzywinski, M. & Altman, N. Comparing samples—part II. Nat. Methods 11, 355–356 (2014).

Glickman, M. E., Rao, S. R. & Schultz, M. R. False discovery rate control is a recommended alternative to Bonferroni-type adjustments in health studies. J. Clin. Epidemiol. 67, 850–857 (2014).

Polanin, J. R. & Pigott, T. D. The use of meta-analytic statistical significance testing. Res. Synth. Methods 6, 63–73 (2015).

Shaffer, J. Multiple hypothesis testing. Annu. Rev. Psychol. 46, 561–584 (1995).

Hedges, L. V. & Vevea, J. In Publication Bias in Meta‐Analysis: Prevention, Assessment and Adjustments (eds Rothstein, H. R. et al.) (John Wiley & Sons, 2005).

Adame, B. J. Training in the mitigation of anchoring bias: a test of the consider-the-opposite strategy. Learn. Motiv. 53, 36–48 (2016).

Schmalhofer, F. & Glavanov, D. Three components of understanding a programmer’s manual: verbatim, propositional, and situational representations. J. Mem. Lang. 25, 279–294 (1986).

Almashat, S., Ayotte, B., Edelstein, B. & Margrett, J. Framing effect debiasing in medical decision making. Patient Educ. Couns. 71, 102–107 (2008).

Barberia, I., Blanco, F., Cubillas, C. P. & Matute, H. Implementation and assessment of an intervention to debias adolescents against causal illusions. PLoS ONE 8, e71303 (2013).

Blanco, F., Matute, H. & Vadillo, A. M. Mediating role of activity level in the depressive realism effect. PLoS ONE 7, e46203 (2012).

Barberia, I., Tubau, E., Matute, H. & Rodríguez-Ferreiro, J. A short educational intervention diminishes causal illusions and specific paranormal beliefs in undergraduates. PLoS ONE 13, e0191907 (2018).

Díaz-Vilela, L. & Álvarez-González, C. J. Differences in paranormal beliefs across fields of study from a Spanish adaptation of Tobacyk’s RPBS. J. Parapsychol. 68, 405–421 (2004).

Bessarabova, E. et al. Mitigating bias blind spot via a serious video game. Comput. Hum. Behav. 62, 452–466 (2016).

Pronin, E., Lin, D. Y. & Ross, L. The bias blind spot: perceptions of bias in self versus others. Pers. Soc. Psychol. Bull. 28, 369–381 (2002).

Botta, V. A. The Effect of Instructional Method on use of Heuristics and Statistics Comprehension (Georgia State Univ., 1998).

Bou Khalil, R., Sleilaty, G., Kassab, A. & Nemr, E. Decontextualisation for framing effect reduction. Clin. Teach. 19, 121–128 (2022).

Toplak, M. E., West, R. F. & Stanovich, K. E. The Cognitive Reflection Test as a predictor of performance on heuristics-and-biases tasks. Mem. Cogn. 39, 1275–1289 (2011).

Baron, J., Scott, S., Fincher, K. & Emlen Metz, S. Why does the Cognitive Reflection Test (sometimes) predict utilitarian moral judgment (and other things)? J. Appl. Res. Mem. Cogn. 4, 265–284 (2015).

Clegg, B. A. et al. Effective mitigation of anchoring bias, projection bias, and representativeness bias from serious game-based training. Procedia Manuf. 3, 1558–1565 (2015).

Rassin, E. Blindness to alternative scenarios in evidence evaluation. J. Investig. Psychol. Offender Profil. 7, 153–163 (2010).

Wason, P. C. Reasoning about a rule. Q. J. Exp. Psychol. 20, 273–281 (1968).

Riggio, H. R. & Garcia, A. L. The power of situations: Jonestown and the fundamental attribution error. Teach. Psychol. 36, 108–112 (2009).

Emory, B. & Luo, T. Metacognitive training and online community college students’ learning calibration and performance. Community Coll. J. Res. Pract. 46, 240–256 (2022).

Morrison, J. R., Bol, L., Ross, S. M. & Watson, G. S. Paraphrasing and prediction with self-explanation as generative strategies for learning science principles in a simulation. Educ. Technol. Res. Dev. 63, 861–882 (2015).

Fitterman-Harris, H. F. & Vander Wal, J. S. Weight bias reduction among first-year medical students: a quasi-randomized, controlled trial. Clin. Obes. 11, e12479 (2021).

Lewis, R. J., Cash, T. F., Jacobi, L. & Bubb-Lewis, C. Prejudice toward fat people: the development and validation of the antifat attitudes test. Obes. Res. 5, 297–307 (1997).

Latner, J. D., O’Brien, K. S., Durso, L. E., Brinkman, L. A. & MacDonald, T. Weighing obesity stigma: the relative strength of different forms of bias. Int. J. Obes. 32, 1145–1152 (2008).

Greenwald, A. G., McGhee, D. E. & Schwartz, J. L. Measuring individual differences in implicit cognition: the implicit association test. J. Pers. Soc. Psychol. 74, 1464–1480 (1998).

Gagne, D. A. Evaluation of an Obesity Stigma Intervention in Reducing Implicit and Explicit Weight Bias (Saint Louis Univ., 2014).

Gutierrez, A. P. Enhancing the Calibration Accuracy of Adult Learners: A Multifaceted Intervention (Univ. Nevada, 2012).

Heijltjes, A., van Gog, T., Leppink, J. & Paas, F. Improving critical thinking: effects of dispositions and instructions oneconomics students’ reasoning skills. Learn. Instr. 29, 31–42 (2014).

Fong, G. T., Krantz, D. H. & Nisbett, R. E. The effects of statistical training on thinking about everyday problems. Cogn. Psychol. 18, 253–292 (1986).

De Neys, W. & Glumicic, T. Conflict monitoring in dual process theories of thinking. Cognition 106, 1248–1299 (2008).

Tversky, A. & Kahneman, D. The framing of decisions and the psychology of choice. Science 211, 453–458 (1981).

Stanovich, K. E. in In Two Minds: Dual Processes and Beyond (ed. Evans, J.) Vol. 369, 55–88 (Oxford Univ. Press, 2009).

Evans, J. S. B. T. In two minds: dual-process accounts of reasoning. Trends Cogn. Sci. 7, 454–459 (2003).

Huff, J. D. & Nietfeld, J. L. Using strategy instruction and confidence judgments to improve metacognitive monitoring. Metacogn. Learn. 4, 161–176 (2009).

Pronin, E. & Kugler, M. B. Valuing thoughts, ignoring behavior: the introspection illusion as a source of the bias blind spot. J. Exp. Soc. Psychol. 43, 565–578 (2007).

Kolić-Vehovec, S., Pahljina-Reinić, R. & Rončević Zubković, B. Effects of collaboration and informing students about overconfidence on metacognitive judgment in conceptual learning. Metacogn. Learn. 17, 87–116 (2022).

Schraw, G. A conceptual analysis of five measures of metacognitive monitoring. Metacogn. Learn. 4, 33–45 (2009).

Cox, C. & Mouw, J. T. Disruption of the representativeness heuristic: can we be perturbed into using correct probabilistic reasoning? Educ. Stud. Math. 23, 163–178 (1992).

Legaki, N. Z. & Assimakopoulos, V. F-LaurelXP: a gameful learning experience in forecasting. In Proc. 2nd International GamiFIN Conference Vol. 2186 (CEUR, 2018).

Morsanyi, K., Handley, S. J. & Serpell, S. Making heads or tails of probability: An experiment with random generators. Br. J. Educ. Psychol. 83, 379–395 (2013).

Fox, C. R. & Levav, J. Partition-edit-count: naive extensional reasoning in judgment of conditional probability. J. Exp. Psychol. Gen. 133, 626–642 (2004).

Green, D. R. Probability Concepts in School Pupils Aged 11–16 Years (Loughborough Univ, 1982).

Onal, I. G. & Kumkale, G. T. Effectiveness of source‐monitoring training in reducing halo error and negativity bias in a performance appraisal setting. Appl. Psychol. 71, 1635–1653 (2022).

Martell, R. F. & Evans, D. P. Source-monitoring training: toward reducing rater expectancy effects in behavioral measurement. J. Appl. Psychol. 90, 956–963 (2005).

Ramdass, D. H. Improving Fifth Grade Students’ Mathematics Self-Efficacy Calibration and Performance through Self -Regulation Training (City Univ. of New York, 2009).

Gertner, A., Zaromb, F., Schneider, R., Roberts, R. D. & Matthews, G. The assessment of biases in cognition: development and evaluation of an assessment instrument for the measurement of cognitive bias. MITRE Technical Report MTR160163 https://www.mitre.org/news-insights/publication/assessment-biases-cognition (2016).

Rodríguez-Ferreiro, J., Vadillo, M. A. & Barberia, I. Debiasing causal inferences: over and beyond suboptimal sampling. Teach. Psychol. 50, 230–236 (2023).

Matute, H., Yarritu, I. & Vadillo, M. A. Illusions of causality at the heart of pseudoscience. Br. J. Psychol. 102, 392–405 (2011).

Rowland, K. Counselor Attributional Bias (Ball State Univ., 1981).

Storms, M. D. Videotape and the attribution process: reversing actors’ and observers’ points of view. J. Pers. Soc. Psychol. 27, 165–175 (1973).

Morton, T. A., Haslam, S. A., Postmes, T. & Ryan, M. K. We value what values us: the appeal of identity‐affirming science. Polit. Psychol. 27, 823–838 (2006).

Scopelliti, I., Min, H. L., McCormick, E., Kassam, K. S. & Morewedge, C. K. Individual differences in correspondence bias: measurement, consequences, and correction of biased interpersonal attributions. Manag. Sci. 64, 1879–1910 (2018).

Cook, M. B. & Smallman, H. S. Human factors of the confirmation bias in intelligence analysis: decision support from graphical evidence landscapes. Hum. Factors 50, 745–754 (2008).

Silver, E. M. Cognitive Style as a Moderator Variable in Rater Training to Reduce Illusory Halo (Kansas State Univ., 1986).

Silver, E. M. Halo Bias, Implicit Personality Theory, and Cognitive Complexity: Possible Relationships and Implications for Improving the Psychometric Quality of Ratings (Kansas State Univ., 1982).

Swift, J. A. et al. Are anti-stigma films a useful strategy for reducing weight bias among trainee healthcare professionals? Results of a pilot randomized control trial. Obes. Facts 6, 91–102 (2013).

Allison, D. B., Basile, V. C. & Yuker, H. E. The measurement of attitudes toward and beliefs about obese persons. Int. J. Eat. Disord. 10, 599–607 (1991).

Crandall, C. S. Prejudice against fat people: ideology and self-interest. J. Pers. Soc. Psychol. 66, 882–894 (1994).

Testa, I. et al. Effects of instruction on students’ overconfidence in introductory quantum mechanics. Phys. Rev. Phys. Educ. Res. 16, 010143 (2020).

Boone, W. J., Staver, J. R. & Yale, M. S. Rasch Analysis in the Human Sciences (Springer, 2016).

Van Bockstaele, B., van der Molen, M. J., van Nieuwenhuijzen, M. & Salemink, E. Modification of hostile attribution bias reduces self-reported reactive aggressive behavior in adolescents. J. Exp. Child Psychol. 194, 104811 (2020).

Houtkamp, E. O., van der Molen, M. J., de Voogd, E. L., Salemink, E. & Klein, A. M. The relation between social anxiety and biased interpretations in adolescents with mild intellectual disabilities. Res. Dev. Disabil. 67, 94–98 (2017).

Snyder, M. & Swann, W. B. Hypothesis-testing processes in social interaction. J. Pers. Soc. Psychol. 36, 1202–1212 (1978).

West, R. F., Toplak, M. E. & Stanovich, K. E. Heuristics and biases as measures of critical thinking: associations with cognitive ability and thinking dispositions. J. Educ. Psychol. 100, 930–941 (2008).

Stanovich, K. E. Rationality and the Reflective Mind (Oxford Univ. Press, 2011).

Stanovich, K. E. & West, R. F. Individual differences in reasoning: implications for the rationality debate? Behav. Brain Sci. 23, 645–665 (2000).

Veinott, E. S. et al. The effect of camera perspective and session duration on training decision making in a serious video game. In International Games Innovation Conference (IEEE, 2013).

Whitaker, E. et al. The effectiveness of intelligent tutoring on training in a video game: an experiment in student modeling with worked-out examples for serious games. In International Games Innovation Conference (IEEE, 2013).