Artificial intelligence has revolutionized medical imaging, particularly in diagnosis and segmentation. Numerous studies have employed artificial neural networks to identify diseases and predict prognosis in various fields, including neurosurgery and plastic surgery13,14,15,16. Subsequently, convolutional neural network (CNN) models were developed to recognize abnormal regions, diagnose conditions, and predict outcomes from clinical photographs17,18.

Mantelakis et al. provided a comprehensive review of artificial intelligence applications in plastic surgery, ranging from early non-neural network models to contemporary CNN approaches. They reported that AI systems analyzing visual images for lesion assessment and treatment planning demonstrated remarkably high accuracy19.

Free flap reconstruction is a crucial treatment modality for both functional and aesthetic perspective following tumor resection or trauma20. Although this procedure has a success rate that exceeds 95%, rare instances of vascular compromise can lead to complicated results2,3. Such complications can often be averted through frequent monitoring and prompt salvage procedures4,5,6.

Current monitoring methods include traditional observation, Doppler systems, color duplex sonography, and near-infrared spectroscopy. Despite their popularity, each of these methods has critical limitations: they may produce meaningless signals, prove challenging for inexperienced resident staff to interpret, or involve prohibitively large observational devices that preclude frequent use21,22. Moreover, none of the existing monitoring methods provide quantifiable measurements of flap changes6.

In response to these limitations, Hsu et al. developed a supervised learning approach to generate quantified results for extraoral flap changes12. However, to date, no such attempt has been made for monitoring free flaps inserted inside the oral cavity. Monitoring flaps located within the oral cavity presents greater challenges compared to observing flaps positioned in external sites. Despite regular oral cleaning procedures, the flap is often covered by blood mixed with saliva, making complete visualization difficult. Additionally, the complex anatomical structure of the oral cavity can make the photographic documentation itself challenging23,24. Furthermore, some of the aforementioned monitoring devices are entirely inapplicable within the oral cavity.

Our model utilizes only 2D images of the flap, eliminating the discomfort associated with inserting large probes into the oral cavity. While the cave-like structure of the oral cavity inevitably leads to significant lighting variations between images, this challenge was overcome through random adjustments within the model. Consequently, only a smartphone flash was required for image capture.

Given that the success rate of vascular anastomosis exceeds 95%, only 10 out of 131 patients in our study underwent salvage procedures or experienced total flap loss. We addressed the inherent dataset imbalance by applying class weighting and incorporating focal loss during the training process. The combination of class weighting and focal loss presents a synergistic approach to addressing class imbalance in machine learning models. Class weighting mitigates the under representation of minority classes by assigning them higher importance, while focal loss dynamically adjusts the learning process to focus on hard-to-classify samples. This integrated method enhances the model’s ability to learn discriminative features from underrepresented classes and challenging instances, thereby improving overall classification performance. Such an approach is particularly effective in highly skewed datasets, where it can significantly boost the model’s generalization capabilities and increase the recognition rate of critical minority classes encountered in real-world applications.

Despite the presence of complex structures such as teeth, lips, and tongue in the images, often obscured by blood and saliva, our model successfully recognizes the flap and detects changes in its viability. This capability persists even when these structures deviate from their normal appearance due to surgical intervention and postoperative conditions.

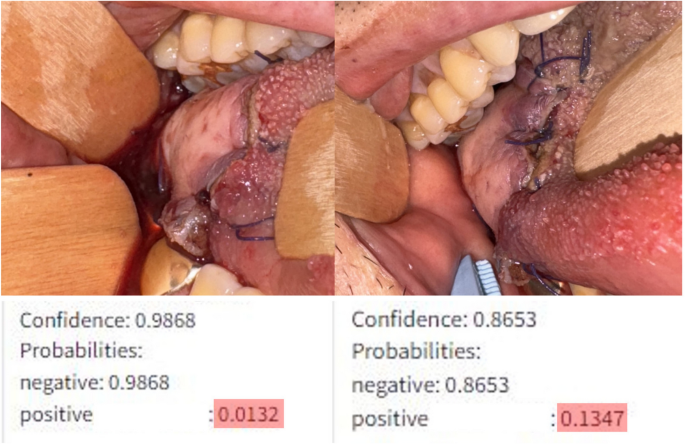

The model’s ability to quantify changes allows for more sensitive detection of alterations compared to human observers. As illustrated in Fig. 2, two input images were captured with a 3-h interval (A: 2 h post-initial salvage procedure, B: 5 h post-initial salvage procedure). While the second image shows a slight increase in the congestive margin compared to the first, the difference was subtle enough that clinicians deemed the flaps similar and deferred further salvage procedures. However, the model indicated a significant change, estimating a 1.3% probability of vascular compromise in the flap in the first image, which increased nearly tenfold to 13% in the second image. Subsequently, this patient underwent a second salvage procedure 15 h after the initial one. Despite these interventions, the vascular compromise remained unresolved, ultimately necessitating flap removal.

Input images from a 58-year-old male patient who underwent glossectomy and pharyngectomy for oropharyngeal cancer, with the defect repaired using an anterolateral thigh flap. The patient exhibited congestive symptoms following the initial surgery, prompting vein re-anastomosis after 11 h. The image on the left shows the model’s analysis 2 h after re-anastomosis, while the image on the right represents the analysis 5 h after re-anastomosis.

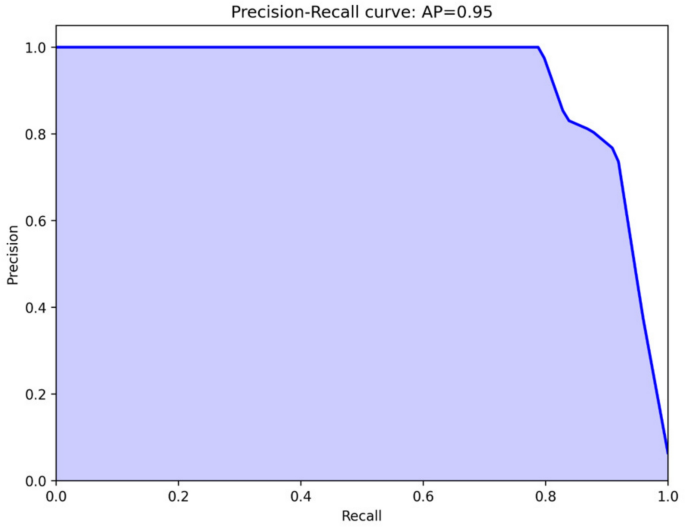

Despite the model’s overall fine performance (F1 score = 0.9863), the relatively low recall value(0.83) observed for class 1 requires improvement. This indicates that the model may incorrectly classify actual cases of vascular compromise as normal with approximately 17% probability, which could potentially lead to significant clinical risks in practice. These errors primarily stem from the limited dataset, with only a few hundred images representing vascular compromise, as well as suboptimal configuration of the confidence level threshold for determining vascular compromise. As previously described, the current model does not directly print class 1 when the confidence level falls below 50%; however, it does indicate potential vascular disorders through increased confidence levels. Therefore, prior to clinical deployment, it appears necessary to recalibrate the confidence level thresholds based on images where blood flow disorders are suspected. In clinical settings, where missing a compromised flap (false negative) could lead to irreversible tissue loss, we would recommend setting a lower threshold for flagging potential compromise, accepting a higher false positive rate as a reasonable trade-off for enhanced patient safety. A clinically acceptable recall should approach 0.95 or higher, given the severe consequences of missed vascular compromise.

In the test set of 376 images, the model misclassified only five. One healthy flap was incorrectly classified as necrotic (false negative), while four flaps with vascular compromise were misclassified as healthy (false positives). The false-negative case involved a glossectomy defect repaired with an ALT free flap, where the contralateral tongue appeared necrotic. The model mistakenly identified this darkened tongue as the flap due to similar proportions in the image. All four false positive cases exhibited vascular compromise. In three of these cases, the flap occupied only a small portion of the image, and the majority of the photograph consisted of normal tissue, which likely contributed to the model’s misclassification. The remaining case involved an image taken 12 h after a forearm flap was transplanted to the buccal cheek. In this image, the color and margins of the flap closely resembled those of a healthy flap, leading the model to diagnose it as having no vascular compromise. However, by postoperative day four, the flap showed signs of necrosis, including partial skin sloughing.

This model, initially developed for diagnostic purposes, demonstrates potential as a sensitive detector of vascular compromise in flaps, serving as an assistant to surgeons. With the capability to analyze images taken under various conditions and lighting, it could provide round-the-clock, location-independent results, supporting decision-making processes for post-operative surgeons.

However, it is widely acknowledged that AI should augment, not replace, the surgeon’s decision-making process20. As the model quantifies the probability of vascular compromise rather than dictating treatment timing, clinical judgment remains crucial in determining the threshold for initiating salvage procedures.

This study presents several areas for potential improvement. While the model currently provides a binary classification based on the probability of vascular compromise, future iterations could incorporate expert annotations to offer more nuanced explanations for the flap’s apparent condition, potentially providing clinicians with additional insights for treatment decisions. Moreover, this study was conducted at a single institution with patients of a single nationality, limiting the patient cohort to Asian ethnicity. To expand the model’s applicability, further training on more diverse skin complexions would be necessary.

Objectively, our model demonstrates high performance in identifying flaps and assessing their viability. Notably, this represents the first deep learning model developed for intraoral flap monitoring.