The dataset and medical data preprocessing

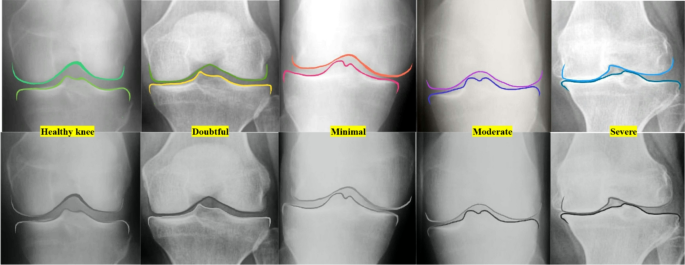

The Knee Osteoarthritis Severity Grading Dataset by Pingjun Chen is designed specifically for the classification and prediction of knee osteoarthritis severity using radiographic images. It contains X-ray images of knees labeled according to the Kellgren–Lawrence grading system, which classifies the severity of osteoarthritis on a scale from 0 (no OA), 1 (doubtful OA), 2 (minimal OA), 3 (moderate OA) and 4 (severe OA), Fig. 3.

The demonstration of the stages of joint narrowing from healthy knee (green color shadows), doubtful with possible low risk of osteophytes (yellow), minimal with high possible risk of osteophytes (red color shadows), moderate osteophytes with high joint narrowing (purple color shadows) and last one is severe totally narrowed joint (blue color shadows).

This scale assesses OA based on features like joint space narrowing, osteophyte formation, and subchondral sclerosis, which are observable in X-rays. Researchers use this dataset to train machine learning models, particularly convolutional neural networks, to automate the detection and grading of knee OA. The dataset serves not only as a tool for developing diagnostic models but also supports clinical research into OA progression and treatment evaluation. For our study we use this dataset to detect early stages of OA by using only two classes 0 (no OA) is a healthy knee and 1 (doubtful OA) is early stage of OA. While working with medical image datasets, such as the Knee OA Severity Grading Dataset, effective preprocessing is essential to improve model performance and ensure accurate predictions. To achieve this, we normalize pixel values to a consistent scale, reducing variations in brightness and contrast and enabling the model to focus on structural features. Noise reduction is applied using filters like Gaussian blur or advanced techniques such as Non-Local Means to remove unwanted artifacts. Images are resized to a standard resolution while preserving their aspect ratio, sometimes using padding to prevent distortion. Data augmentation is employed to increase variability, introducing random rotations, translations, flips, scaling, and adjustments in brightness and contrast to simulate diverse imaging conditions. Additionally, contrast enhancement techniques, such as histogram equalization or CLAHE, are used to highlight important image details, improving the clarity of structural features. These preprocessing steps collectively ensure that the model can learn effectively from high-quality, standardized input data.

The dataset used in this study consists of a total of 1600 radiographic knee images, evenly divided between healthy knees (Kellgren–Lawrence grade 0) and early-stage OA knees (grade 1). To ensure balanced class representation, we employed a stratified 70-15-15 split. As a result, the training set contained 1120 images, with 560 healthy and 560 early OA cases. The validation and test sets each comprised 240 images, again balanced with 120 healthy and 120 early OA images, respectively. This careful distribution mitigates class imbalance and enhances the model ability to learn discriminative features effectively for both categories.

The metrics

In our study on medical image analysis for grading knee OA severity using a classification model, selecting evaluation metrics that account for both prediction accuracy and clinical significance is crucial. We use accuracy to measure the overall correctness of the model, though it can be less reliable in imbalanced datasets:

$$\:Accuracy=\:\frac{TP+TN}{TP+TN+FP+FN}$$

(6)

.

Precision evaluates the proportion of true positive predictions out of all positive predictions, making it essential for scenarios where minimizing false positives is important:

$$\:Precision=\:\frac{TP}{TP+FP}$$

(7)

.

Recall assesses the model ability to identify all actual positive cases, a critical aspect in medical diagnostics to avoid missing true case:

$$\:Recall=\:\frac{TP}{TP+FN}$$

(8)

.

In addition to these, we consider the model complexity and efficiency through metrics like the number of parameters, which reflects the model capacity and size, and FLOPs, which quantify the computational complexity required for a single inference. These metrics ensure a balanced evaluation of both the model predictive performance and its feasibility for practical applications.

The comparison of the results with baseline models

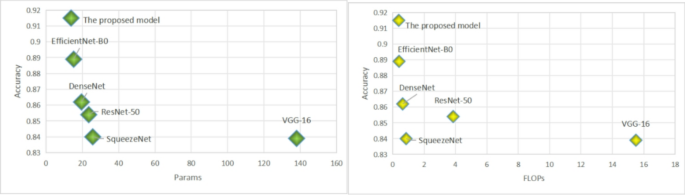

In Table 2, we provide a detailed performance comparison of various deep learning architectures on the task of early osteoarthritis detection. Our evaluation focuses on several critical metrics including accuracy, the number of parameters in millions, floating-point operations per second in billions, recall, and precision. The proposed model demonstrates a remarkable performance, achieving the highest metrics in accuracy and precision among all the models considered. It achieves this superior performance with an efficient computational profile, using fewer parameters and FLOPs than traditional architectures such as VGG-16 and ResNet-50 Fig. 4.

The graph of the comparison of the models by Accuracy and Params, Accuracy and FLOPs.

This computational efficiency is particularly advantageous for real-time clinical applications where both high accuracy and low computational overhead are crucial. The analysis reveals that while models like ResNet-50 and VGG-16 consume a considerable amount of computational resources, they do not necessarily provide the best performance, which emphasizes the importance of optimizing both the architectural design and the operational efficiency for medical imaging tasks. On the other hand, DenseNet shows a good balance between performance and computational demands, suggesting its potential utility in environments with some computational constraints.

Table 2 Comparative analysis of deep learning models for osteoarthritis Detection.

On the other hand, DenseNet shows a good balance between performance and computational demands, suggesting its potential utility in environments with some computational constraints. Meanwhile, SqueezeNet, despite its moderate use of resources, falls short in precision, which could limit its applicability for precise medical diagnostics. Our proposed model not only excels in accurately detecting and grading osteoarthritis but also does so with commendable efficiency in resource usage. This dual advantage makes it an excellent candidate for widespread clinical deployment, particularly in settings that demand quick and reliable diagnostic results without taxing computational resources.

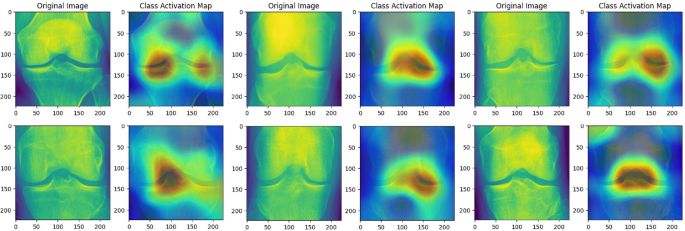

CAM highlights the areas related to the typical osteophytes locations to focus more on relevant features to early detection of the osteophytes.

Moreover, in Fig. 5, the Class Activation Maps (CAM) reveal the focus of the proposed model by emphasizing the performance of the model when assessing knee X-ray images for the presence of osteoarthritis. The CAMs corresponding to healthy knees exhibit general and mild activation, suggesting an absence of notable pathological features. Conversely, the CAMs associated with early-stage osteoarthritis display pronounced activation in specific regions, notably around the joint spaces and bone edges. These areas are key indicators of osteoarthritic changes, such as joint space narrowing and the formation of osteophytes. This visualization not only aids in understanding the diagnostic process of the proposed model but also confirms its capability to detect clinically relevant features, thereby supporting its use in AI-enhanced diagnostic tools and informing clinical decision-making. This blend of high precision, recall, and computational efficiency underscores the potential of our proposed model to set a new standard in osteoarthritis detection within the clinical landscape.

The computational efficiency of the proposed model not only reduces memory and power demands but also directly contributes to faster inference times, which is critical for real-time clinical applications. On a standard NVIDIA RTX 3080 GPU, the model processes a single radiographic image in under 100 milliseconds, enabling rapid screening in high-throughput environments such as outpatient clinics or sports medicine facilities. Compared to conventional deep architectures like ResNet-50, which require approximately 2–3× longer processing time under similar conditions, our model significantly reduces diagnostic latency. Furthermore, this acceleration supports seamless integration into telemedicine platforms and point-of-care diagnostic tools, where immediate feedback is essential.

The comparison of results with state-of-the-art models

Recent advancements in deep learning for medical imaging have significantly improved diagnostic precision and efficiency. This section provides a comparative analysis of our proposed model against SOTA architectures. Several contemporary studies have highlighted the role of efficient architectures in medical image analysis. For instance, Reference25 introduced an enhanced ResNet architecture for knee osteoarthritis grading, integrating hybrid attention mechanisms to improve feature localization. While their model achieved high accuracy, the computational demands were substantial, limiting its real-time applicability. Similarly, Reference3 proposed a feature extraction method by replacing the fully connected layer with a global average pooling (GAP) layer. A comparative analysis of 16 CNN feature extractors and three machine learning classifiers demonstrated the efficacy of the proposed approach.

In another relevant study, Reference8 proposed a lightweight MobileNetV3-based framework for diagnosing musculoskeletal disorders. Despite the reduced computational overhead, their approach showed limitations in handling high-resolution medical images, which are critical for OA diagnostics. A Densely Connected Fully Convolutional Network (DFCN)26 was developed for knee osteoarthritis classification, leveraging multiple learning strategies. A KOA-CCTNet model27, leveraging a modified Compact Convolutional Transformer. The approach surpasses transfer learning models in KOA classification and provides an efficient solution for handling large-scale datasets with advanced pre-processing techniques. Reference28 deep learning and a whale optimization algorithm was proposed for knee osteoarthritis classification. Combining EfficientNet-B0 and DenseNet201 for feature extraction, surpassing recent techniques and incorporating Explainable AI for interpretability. Reference29 OsteoHRNet model, built on a High-Resolution Network (HRNet) with an attention mechanism, for Kellgren and Lawrence grade classification of knee osteoarthritis. The model effectively captures multi-scale features and utilizes Grad-CAM for interpretability. Our proposed model, an optimized EfficientNet-B0 architecture enhanced with the Efficient Channel Attention (ECA) module, addresses these limitations effectively. Compared to the aforementioned models, it delivers superior accuracy (91.5%), precision (89.5%), and recall (92%) while maintaining minimal computational overhead with 13.78 M parameters and 0.382 GFLOPs. Notably, the lightweight nature of the ECA module eliminates the inefficiencies associated with traditional attention mechanisms like Squeeze-and-Excitation (SE) blocks, making our model well-suited for real-time clinical applications. A detailed comparison of metrics is provided in Table 1, which highlights the advantages of our approach over other SOTA models. Furthermore, Class Activation Maps (CAMs) validate the model ability to localize osteophytes and joint space narrowing with high precision, particularly in early-stage OA cases. This capability underscores the clinical relevance of our model, as early detection significantly improves patient outcomes.

Table 3 The table provides a comparative analysis of various SOTA models used for grading knee OA severity in terms of accuracy, model complexity (Params), computational efficiency (FLOPs), recall, and precision.

This study sets a new standard for automated OA detection by combining state-of-the-art accuracy with computational efficiency, addressing the critical need for scalable diagnostic tools in healthcare. The proposed model demonstrates superior performance with an accuracy of 92%, which is the highest among the listed models Table 3. To ensure a fair comparison among models listed in Table 3, all architectures were trained under identical conditions unless otherwise specified by their original configurations. The training employed the Adam optimizer with an initial learning rate of 0.0001 and a batch size of 32. A StepLR learning rate scheduler with a step size of 10 and gamma of 0.1 was used to gradually reduce the learning rate during training. Early stopping was implemented based on validation loss with a patience of 10 epochs to prevent overfitting. Each model was trained for a maximum of 100 epochs using the same training-validation-test split described earlier. These consistent hyperparameters help ensure that performance differences stem from architectural differences rather than tuning variations. Additionally, the proposed model achieves a significant balance between high performance and computational efficiency. With a parameter count of 13.78 million and 0.382 GFLOPs, the proposed model is notably lightweight compared to models like DST-CNN and ResNet-50, which have significantly higher parameter counts and FLOPs. The recall of 92% highlights the model effectiveness in identifying true positive cases, a critical factor in medical diagnostics. The precision of 91% ensures the model minimizes false positives while maintaining high reliability. Compared to other models such as Mo-bileNetV3 and KOA-CCTNet, the proposed model achieves better recall and precision while maintaining a competitive parameter count and computational cost. This balance underscores the model suitability for real-time clinical applications, where both accuracy and efficiency are paramount.

It is important to note that performance metrics such as precision may vary significantly depending on the classification setup. For example, the OsteoHRNet model reported a precision of 0.39 for Grade 1 cases, reflecting the difficulty of early-stage OA detection in a multi-class setting involving all Kellgren–Lawrence grades (0–4). In our study, however, the classification task is binary (KL 0 vs. KL 1), which reduces inter-class confusion and allows the model to focus on subtle differences relevant to early OA. The use of a lightweight attention mechanism and targeted preprocessing further enhances the model’s ability to distinguish early-stage cases, resulting in improved precision and recall.

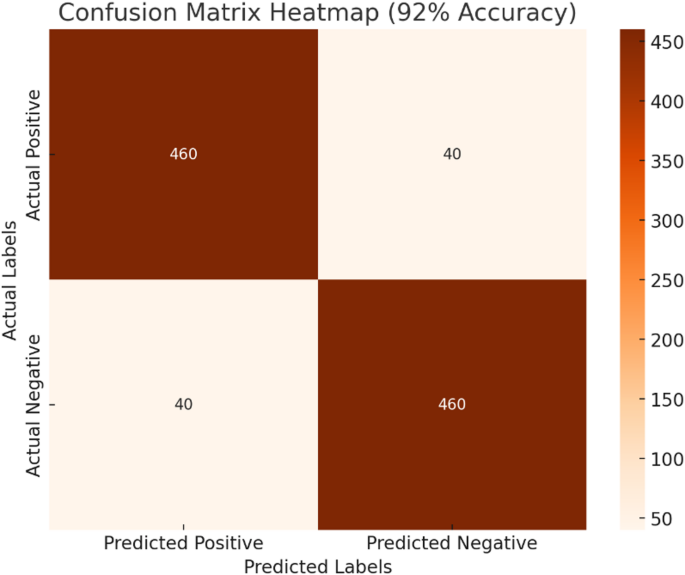

Illustrates the confusion matrix for the proposed model, highlighting its performance in classifying knee X-ray images into two categories: healthy (no osteoarthritis) and early-stage osteoarthritis.

The confusion matrix with a 92% accuracy demonstrates the robustness of the proposed model in detecting early-stage knee OA Fig. 6. The model accurately identifies most cases with 460 true positives and 460 true negatives, reflecting its strong diagnostic capability. The false positives and false negatives at 40 indicate areas where further refinement could enhance performance. From a clinical perspective, the high true positive rate ensures that most early-stage OA cases are detected, allowing timely intervention for athletes, which is critical in preventing disease progression. At the same time, the model maintains a reasonable balance by minimizing false alarms, as seen in the low false-positive count. While the model performs well overall, the presence of 40 false negatives highlights the importance of ongoing efforts to refine detection sensitivity. Missing early-stage cases could delay critical treatment, which is significant for athletes whose physical health is paramount. Reducing these errors would increase the reliability of the model in real-world applications. The proposed model achieves a commendable balance between accuracy and computational efficiency, making it a strong candidate for deployment in clinical settings. Its ability to maintain high performance while operating in resource-constrained environments underscores its practicality and innovation in medical diagnostics. While our proposed model effectively detects early-stage OA through radiographic features such as joint space narrowing and osteophyte formation, it is important to acknowledge the limitations inherent to X-ray imaging, particularly in assessing soft-tissue structures. The boundary between bone and soft tissue plays a critical role in OA progression, especially in cartilage degradation, which is not directly visible in conventional radiographs. MRI, in contrast, provides superior soft-tissue contrast and allows for direct visualization of cartilage loss, meniscal damage, and synovial inflammation—all vital indicators of OA. Although our model is currently optimized for 2D radiographic analysis, future work will explore its extension to MRI data using publicly available datasets such as fastMRI or the OAI. Such an adaptation would enable the model to capture soft-tissue pathology more accurately, enhancing its clinical utility in comprehensive OA diagnosis. Furthermore, we recognize the potential of multimodal learning approaches that integrate X-ray and MRI features to improve diagnostic performance and facilitate early intervention strategies, particularly for athlete populations where rapid, non-invasive, and reliable screening tools are essential.