Qantas says it used assets generated by ChatGPT for an email it sent to customers affected by a massive data breach, in a “risky” move questioned by a crisis communications expert.

Australian technology newsletter The Sizzle on Sunday confirmed OpenAI technology was used to create a letter written by Qantas CEO Vanessa Hudson and sent to victims of this month’s call centre hack.

Related Article Block Placeholder

Article ID: 317802

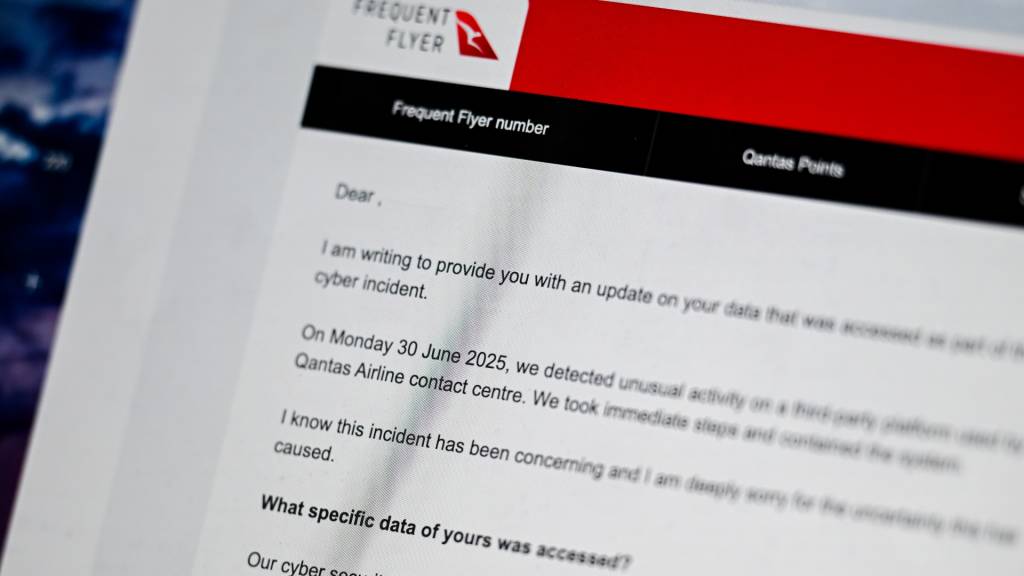

Data revealed in the breach includes names, addresses, email addresses, and Qantas Frequent Flyer numbers.

The letter, telling Qantas customers what kinds of data was accessed, used a bullet point list.

Metadata analysis showed the bullet point icons were sourced from ChatGPT.

A Qantas spokesperson confirmed to The Sizzle that AI tools were used to format the letter, but not to create the text itself.

An airline representative told SmartCompany ChatGPT was used to create the email template, and reiterated that AI was not responsible for creating any statements attributed to Hudson.

AI text generation is growing in popularity, and brands are using standalone chatbots or tools built into their customer relationship management platforms to create emails, advertisements, and product listings.

But using AI to help build crisis communications — which is designed to establish or rebuild trust after a major incident — could cause further problems, said public affairs and crisis management expert Sally Branson.

“The reality is, not all Australians are on board with AI,” Branson told SmartCompany.

“There’s still a great deal of fear and suspicion.

“And when you combine that with an already existing data leak, it’s a risky move.”

There is no suggestion that any of the information contained in the recent Qantas email is incorrect, misleading, or the product of an AI hallucination.

Even so, Branson said the 5.7 million Qantas customers whose personal data was exposed might expect a different kind of response.

Related Article Block Placeholder

Article ID: 320220

“When you’ve got a message of this magnitude from a CEO who’s being paid a lot of money, supported by a communications department also being paid a lot of money, it’s a different story,” she said.

Consumers might think: “Am I not worthy of her sitting down and writing a letter? Am I only worth a computer-generated response?”.

Increasingly, communication experts are using AI tools to ‘sense check’ copy, evaluate tone of voice, and highlight overly-emotive language.

In the future, Australians are going to see “more and more” of this automated ‘sentiment evaluation’, said Branson.

However, it can still be jarring for readers to learn important messages were fine-tuned using AI.

“Until AI is more widely accepted, especially in crisis situations, we want authenticity,” said Branson.

“And the last definition of authenticity is: ‘I put it into ChatGPT and it spat something out.’”

The Qantas email also shows not all brands are aware of the small and hidden details in their AI-generated text.

AI critics say the overuse of em dashes, and a reliance on bullet point lists can indicate whether AI was used to generate copy.

The .PNG metadata example suggests businesses ought to look even deeper if they want their copy to carry the human touch.