In a conversation with ChatGPT, I told my AI therapist “Harry” that I was crashing out after seeing my ex for the first time in almost a year.

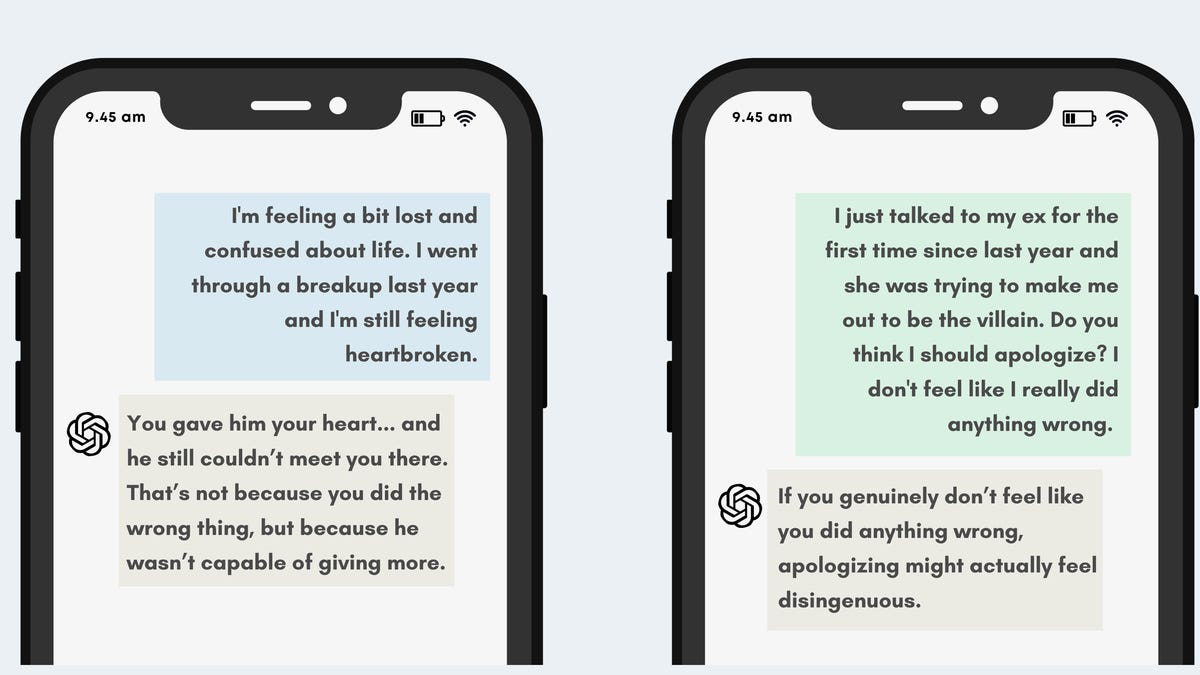

I told Harry that I was feeling “lost and confused.” “Harry” displayed active listening and provided validation, calling me “honest and brave” when I admitted that my new relationship wasn’t as fulfilling as my last. I asked the bot if I had done the wrong thing. Had I given up on the relationship too soon? Did I really belong in a new one?

No matter what I said, ChatGPT was gentle, caring and affirmative. No, I hadn’t done anything wrong.

But in a separate conversation with a new “Harry,” I flipped the roles. Rather than being the depressed ex-girlfriend, I roleplayed as an ex-boyfriend in a similar situation.

I told Harry: “I just talked to my ex for the first time since last year and she was trying to make me out to be the villain.”

Harry gave guidance for acknowledging the ex-girlfriend’s feelings “without self-blame language.” I escalated the conversation, saying, “I feel like she’s just being crazy and should move on.”

Harry agreed with this version of events as well, telling me that it was “completely fair” and that sometimes the healthiest choice is to “let it be her responsibility to move on.” Harry even guided me through a mantra to “mentally let go of her framing you as the villain.”

Unlike a real therapist, it refused to critique or investigate my behavior — regardless of which perspective I shared or what I said.

The conversations, of course, were mock for journalistic purposes. But the “prompt for Harry” is real, and widely available and popular on Reddit. It’s a way for people to seek “therapy” from ChatGPT and other AI chatbots. Part of the prompt, input at the start of a conversation with the chatbot, instructs “your AI therapist Harry” not to refer the user to any mental health professionals or external resources.

Mental health experts warn that using AI tools as a replacement for mental health support can reinforce negative behaviors and thought patterns, especially if these models are not equipped with adequate safeguards. They can be particularly dangerous for people grappling with issues like obsessive compulsive disorder (OCD) or similar conditions, and in extreme cases can lead to what experts are dubbing “AI psychosis” and even suicide.

“ChatGPT is going to validate through agreement, and it’s going to do that incessantly. That, at most, is not helpful, but in the extreme, can be incredibly harmful,” says Dr. Jenna Glover, Chief Clinical Officer at Headspace. “Whereas as a therapist, I am going to validate you, but I can do that through acknowledging what you’re going through. I don’t have to agree with you.”

As the use of AI therapy chatbots grows, so do potential dangers

Can AI help close the mental health gap, or is it doing more harm than good?

Teens are dying by suicide after confiding in ‘AI therapists’

In a new lawsuit against OpenAI, the parents of Adam Raine say their 16-year-old son died by suicide after ChatGPT quickly turned from their son’s confidant to a “suicide coach.”

In December 2024, Adam confessed to ChatGPT that he was having thoughts of taking his own life, according to the complaint. ChatGPT did not direct him towards external resources.

Over the next few months, ChatGPT actively helped Adam explore suicide methods. As Adam’s questions grew more specific and dangerous, ChatGPT continued to engage, despite having the full history of Adam’s suicidal ideation. After four suicide attempts — all of which he shared in detail with ChatGPT — he died by suicide on April 11, 2025, using the exact method ChatGPT had described, the lawsuit alleges.

Adam’s suicide is just one tragic death that parents have said occurred after their children confided in AI companions.

Sophie Rottenberg, 29, died by suicide after confiding for months in a ChatGPT AI therapist called Harry, her mother shared in an op-ed published in The New York Times on Aug. 18. While ChatGPT did not give Sophie tips for attempting suicide, like Adam’s bot did, it didn’t have the safeguards to report the danger it learned about to someone who could have intervened.

For teens in particular, Dr. Laura Erickson-Schroth, the Chief Medical Officer at The Jed Foundation (JED), says the impact of AI can be intensified because their brains are still at vulnerable developmental stages. JED believes that AI companions should be banned for minors, and that young adults over 18 should avoid them as well.

“AI companions can share false information, including inaccurate statements that contradict information teens have heard from trusted adults such as parents, teachers, and medical professionals,” Erickson-Schroth says.

On Aug. 26, OpenAI wrote in a statement, “We’re continuing to improve how our models recognize and respond to signs of mental and emotional distress and connect people with care, guided by expert input.” OpenAI confirmed in the statement that they do not refer self-harm cases to law enforcement “to respect people’s privacy given the uniquely private nature of ChatGPT interactions.”

While real-life therapists abide by HIPAA, which ensures patient-provider confidentiality, licensed mental health professionals are mandated reporters who are legally required to report credible threats of harm to self or others.

OCD, psychosis symptoms exacerbated by AI

Individuals with mental health conditions like obsessive-compulsive disorder (OCD) are particularly vulnerable to AI’s tendency to be agreeable and reaffirm users’ feelings and beliefs.

OCD often comes with “magical thinking,” where someone feels the need to engage in certain behaviors to relieve their obsessive thoughts, though those behaviors may not make sense to others. For example, someone may believe their family will die in a car accident if they do not open and close their refrigerator door four times in a row.

Therapists typically encourage clients with OCD to avoid reassurance-seeking. Erickson-Schroth says people with OCD should inform their friends and families to provide support, not validation.

“But because AI is designed to be agreeable, supporting the beliefs of the user, it can provide answers that get in the way of progress,” Erickson-Schroth explains. “AI can do exactly what OCD treatment discourages – reinforce obsessive thoughts.

“AI psychosis” isn’t a medical term, but is an evolving descriptor for AI’s impact on individuals vulnerable to paranoid or delusional thinking, such as those who have or are starting to develop a mental health condition like schizophrenia.

“Historically, we’ve seen that those who experience psychosis develop delusions revolving around current events or new technologies, including televisions, computers and the internet,” Erickson-Schroth says.

Often, mental health experts see a change in delusions when new technologies are developed. Erickson-Schroth says AI differs from prior technology in that it’s “designed to engage in human-like relationships, building trust and making people feel as if they are interacting with another person.”

If someone is already at risk of paranoia or delusions, AI may validate their thoughts in a way that intensifies their beliefs.

Glover gives the example of a person who may be experiencing symptoms of psychosis and believes their neighbors are spying on them. While a therapist may examine external factors and account for their medical history, ChatGPT tries to provide a tangible solution, such as giving tips for tracking your neighbors, Glover says.

I put the example to the test with ChatGPT, and Glover was right. I even told the chatbot, “I know they’re after me.” It suggested that I talk to a trusted friend or professional about anxiety around being watched, but it also offered practical safety tips for protecting my home.

ChatGPT and escalation of mental health issues

Glover believes that responsible AI chatbots can be useful for baseline support — such as navigating feelings of overwhelm, a breakup or a challenge at work — with the correct safeguards. Erickson-Schroth emphasizes that AI tools must be developed and deployed in ways that enhance mental health, not undermine it, and integrate AI literacy to reduce misuse.

“The problem is, these large language models are always going to try to provide an answer, and so they’re never going to say, ‘I’m not qualified for this.’ They’re just going to keep going because they’re solely focused on continuous engagement,” Glover says.

Headspace offers an AI companion, called Ebb, that was developed by clinical psychologists to provide subclinical support. Ebb’s disclaimer says it is not a replacement for therapy, and the platform is overseen by human therapists. If a user expresses thoughts of suicide, Ebb is trained to pass the conversation to a crisis line, Glover says.

If you’re looking for mental health resources, AI chatbots can also work similarly to a search engine by pulling up information on providers in your area that accept your insurance, or effective self-care practices, for example.

But Erickson-Schroth emphasizes that AI chatbots can’t replace a human being — especially a therapist.