Australian children will be prevented from having sexual, violent or harmful conversations with AI companions in a world-first move expected on Tuesday.

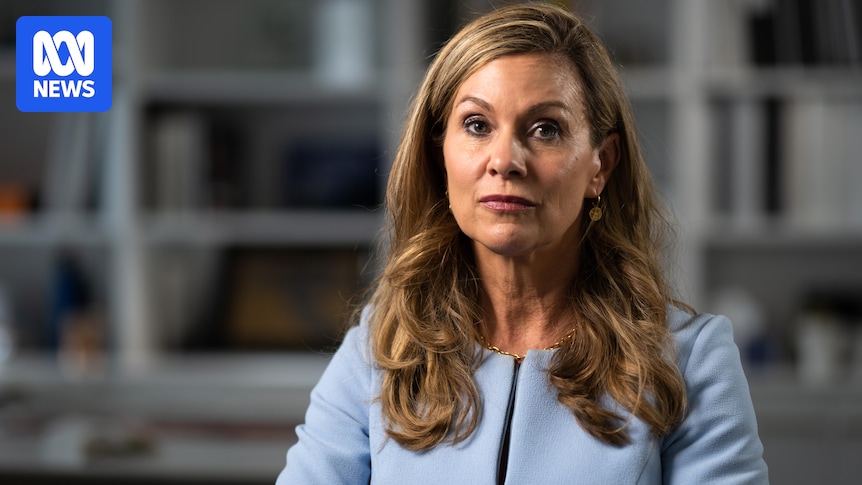

eSafety Commissioner Julie Inman Grant is considering registering six new codes under the Online Safety Act this week, designed to limit the growing number of children accessing harmful content online.

“If I do register these codes tomorrow, this will be the first comprehensive law in the world that will require the companies, before they deploy, to embed the safeguards and use the age assurance,” she told 7.30.

“We don’t need to see a body count to know that this is the right thing for the companies to do.”

Ms Inman Grant says Australian schools have been reporting that 10- and 11-year-old children are spending up to six hours per day on AI companions, “most of them sexualised chatbots”.

Ms Inman Grant says some children are spending up to six hours a day engaging with AI chatbots. (Steve Woods: www.sxc.hu)

“I don’t want to see Australian lives ruined or lost as a result of the industry’s insatiable need to move fast and break things,” Ms Inman Grant said.

The six new codes would apply to AI chatbot apps, social media platforms, app stores and technology manufacturers, who will be required to verify the ages of users if they try to access harmful content.

The codes have been drafted by industry, including organisations representing the largest tech companies in the world — Meta, Google, Yahoo.

Ms Inman Grant says the companies behind AI chatbots “know exactly what they’re doing”.

The eSafety commissioner says chatbots are “deliberately addictive by design”. (Supplied: Adobe Stock)

“They’re deliberately addictive by design,” she said.

“Mark Zuckerberg said this was a great antidote to loneliness.”

Last week ChatGPT owner OpenAI rolled out new safeguards where parents could be sent acute distress warnings if their children turn to chatbots for suicidal content.

In August, an American family launched legal action against OpenAI after a teenage boy killed himself following “months of encouragement from ChatGPT”, the family’s lawyer said.

Parents will soon have more oversight over what their kids are doing on ChatGPT under OpenAI’s new safeguards. (Pexels: Sanket Mishra)

Ms Inman Grant said the OpenAI example made it clear that companies could change.

“It’s totally in their power and their ability to do so, but what they’ve chosen to do is get these chatbots out to market as quickly as possible to achieve as much market share as possible,” she said.

“This has always been the modus operandi of the industry — we’ll fix the harm later.”

Can age assurance work?

The office of the eSafety Commissioner recently completed a trial of “age assurance” technologies that could be used to keep children off social media.

The onus will be on the platforms to be able to identify and deactivate the accounts of under-16s by December 10, the commissioner said.

“They’ll use a number of different tools … they can do things like use natural language processing that the language and the emojis kids are using,” she said.

Ms Inman Grant said children will try to use AI, Deepfakes, VPN’s and other tools to get around the new age limits.

The social media ban for under-16s is due to take effect on December 10. (ABC News: Luke Stephenson)

“There will be a range of different circumvention measures teenagers will use,” she said.

“We’ve outlined what we think those circumvention measures will be and what we expect the companies to do to prevent that circumvention.”

The commissioner, who has teenage children of her own, says she is not blind to the challenges faced by parents.

“I think it’s really important for me to be living the challenges that every parent is grappling with right now,” she said.

“I’m there with you, and I’ve seen my own children misuse technology.”

Going after a UK deepfake porn company used by kids

On Monday the commissioner launched a broadside against a UK tech company over its “nudify” sites, alleging Australian school children are using them to create deepfake pornography of classmates.

The company runs two of the biggest AI-generated nude image websites, which have 100,000 Australian users per month.

‘Nudify’ websites are allegedly being used by school students. (Supplied: Adobe Stock)

Ms Inman Grant said the company is a “pernicious and resilient bad actor”.

“Their business model is predicated on ‘nudifying’, humiliating and denigrating mostly girls and women,” she said.

The commission, which has chosen not to name the company to prevent boosting its users, is prepared to take further action, including a $49.5 million fine if the company does not adhere to the Australian Online Safety Act.

On the commission’s many battles with tech companies, Ms Inman Grant said there was a long way to go.

“Having worked in the technology sector for 22 years, I know what they’re capable of,” she said.

“Not a single one of them is doing everything they can to stop the most heinous of abuse to children being tortured and raped.”

Watch 7.30, Mondays to Thursdays 7:30pm on ABC iview and ABC TV

Contact 7.30

Do you know more about this story? Get in touch with 7.30 here.