As AI improves and becomes increasingly accessible, it has made it easier for people to quickly make AI slop and upload it onto social media and streaming platforms.

Some video channels that publish such AI content have even posted guides and tutorials to help others create their own AI slop.

These guides often recommend tools such as OpenAI’s ChatGPT and Microsoft’s Copilot to generate scripts and story ideas.

Visuals are then created using image or video-generation AI platforms, with creators then assembling short videos using AI editors or basic cut-and-paste editing software.

“There is an increasing number of individuals joining the content farm, as its barrier to entry is incredibly low. They do not need any editing and video production skills. Just a prompt would do the job,” said Assoc Prof Lee.

AI slop has also started creeping into music streaming platforms such as Spotify. The Guardian reported in July this year that a “band” known as The Velvet Sundown put out two albums and garnered over 1 million streams within weeks, but it was later found that everything about it, from the songs to the band’s images and backstory, had been AI-generated.

WHEN SLOP IS INSIDIOUS

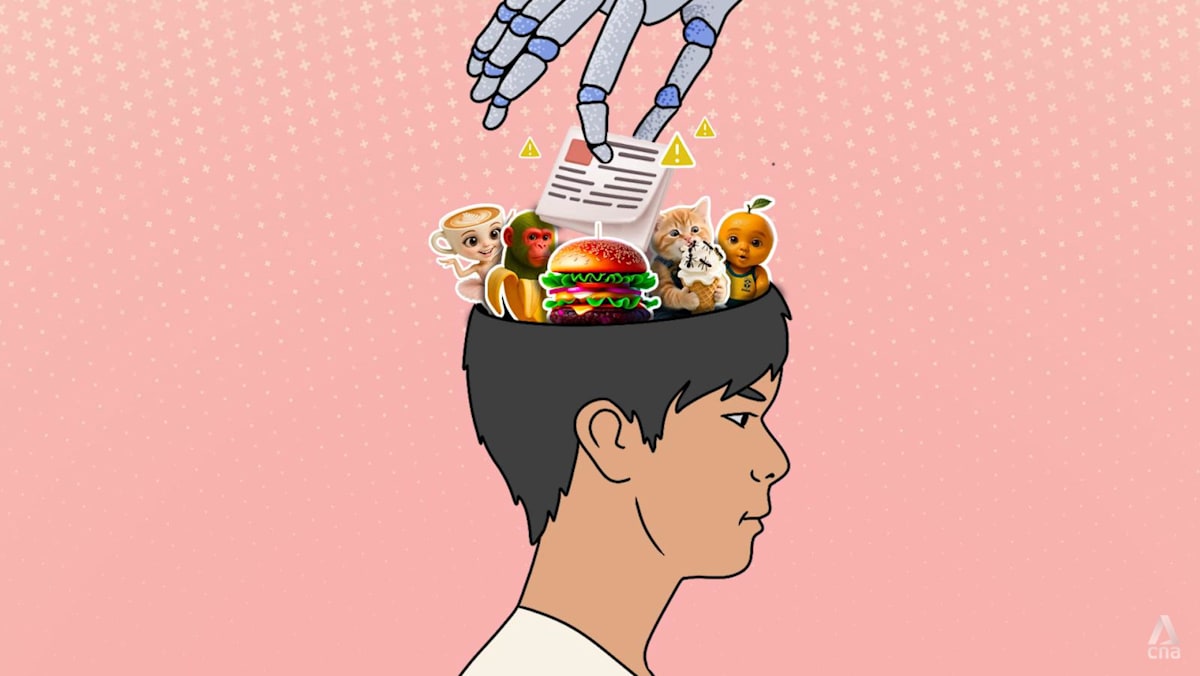

It’s easy enough to identify AI slop when you are looking at a cartoon figure that is a mash-up of a coffee mug and a ballerina. But sometimes, AI slop can be much harder to spot.

Mr Conrad Tallariti, managing director for Asia Pacific at DoubleVerify, said that generative AI (Gen AI) models can easily generate hundreds of pieces of written content within minutes, from fake news to made-up recipes.

“What sets AI slop apart from legitimate generative AI output is the lack of human oversight, poor content authenticity and arbitrage tactics showing little regard for user trust or quality,” he said.

Through sponsored posts, affiliate links and extensive ad placement, AI slop websites can easily be monetised as long as there are enough clicks and engagement.

“Taking the example of recipe sites. Hundreds of AI-written articles and incredibly life-like food photos may be produced and posted, even for recipes that have never been prepared,” he said.

“To gain authenticity and traffic, AI slop sites will often present themselves as personal cooking blogs written by a person, (but it) is in fact an avatar invented by Gen AI, using a made-up bio and images.”

DoubleVerify, a software platform verifying media quality and ad performance, also found over 200 AI-generated and ad-supported websites that were designed to mimic well-known mainstream publishers such as sports website ESPN and news channel CBS, but hosted false clickbait AI-generated content.

“Due to the lack of human oversight and deceit on these websites, advertisers may unintentionally position their ads on counterfeit content farms,” warned Mr Tallariti.

Even more alarming is that AI slop has worsened misinformation during times of actual crises around the world, which has had real-life ramifications for the people involved.

For example, during the floods in North Carolina in the United States last year, AI-generated images showing supposed victims of the flood started spreading wildly online as content farms took the crisis as an opportunity to garner engagement online.

This made the work of first responders much more difficult, as they were relying on social media to locate actual victims, while also making it harder for people on the ground to sift through the slop and find reliable information.