For decades, servers have been the fundamental building block of data centers. Deploying a workload requires enough servers to support that workload. Infrastructure monitoring, power management, and so on also largely occur at the server level.

In the AI era, server-scale approaches to data centers are becoming increasingly inadequate. A better strategy, arguably, is rack-scale computing – an idea that is not all that new, but that has perhaps finally come into its moment.

What Is Rack-Scale Computing?

Rack-scale computing is the practice of provisioning hardware inside data centers using server racks rather than individual servers as the main unit of IT infrastructure.

In other words, when you embrace rack-scale computing, your main goal is to ensure you have enough racks – and the optimum combination of compute, memory, storage, and networking hardware within each rack – to support a given workload.

This is different from traditional infrastructure strategies, which center around individual servers. Most data center administrators are accustomed to thinking in terms of how many servers are assigned to workloads, not how many racks are provisioned for them, which is why it’s common to size Kubernetes environments based on how many nodes they include, for example, or use total servers as a proxy for total data center capacity.

Under a rack-scale approach, a data center’s total number of servers ceases to be the focus. Instead, the key factor for driving infrastructure success becomes the total number of racks and the configuration of each rack.

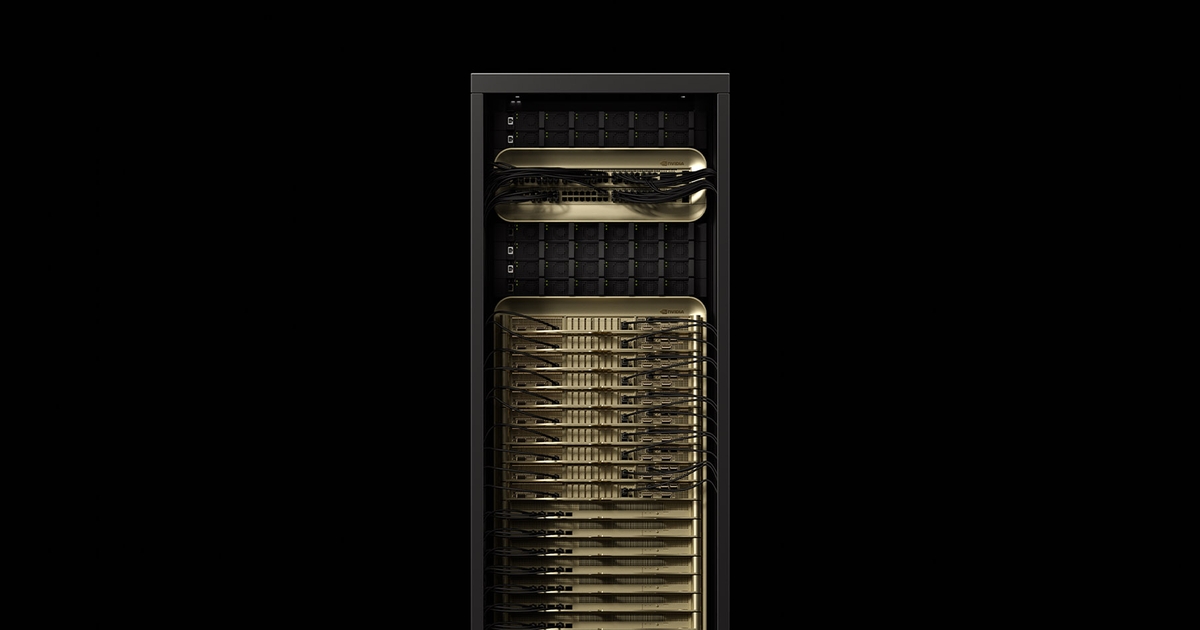

Rack-scale computing finds its moment in the AI era, where 1 MW racks deliver the integrated performance traditional server-centric approaches cannot match.

The Benefits of Going Rack-Scale

At first glance, rack-scale computing might seem like an unconventional approach to data center infrastructure management. After all, the total number of servers that can fit inside a single rack can vary widely depending on rack size. If the computing capacity of a rack is so variable, why would you treat racks as the fundamental building block of data centers?

Part of the answer is that servers, too, are not actually a very consistent way of measuring infrastructure capacity because the computing power of servers can vary widely.

The more compelling reason for embracing rack-scale computing is that it enables a more flexible approach to data center infrastructure management. Specifically, rack-centric infrastructure allows businesses to:

Meet the needs of large-scale workloads. Modern workloads often require multiple servers – and hence benefit from having an entire rack assigned to them.

Build more resilient infrastructures. Individual servers are prone to failure, but it’s rare for an entire rack to go down. Therefore, when you use racks as your building block, your workloads are inherently more reliable.

Optimize infrastructure configurations. Focusing on rack design and components makes it easier to outfit each rack with hardware optimized for a given workload. For example, if a workload generates an especially high amount of network traffic, it can be supported with a rack that includes a high-end switch – or even multiple switches.

A Solution Whose Time Has Finally Come

Interestingly, the concept of rack-scale computing has been around for more than a decade. Microsoft was promoting it in 2013, and vendors like Intel were seizing upon it years ago as part of composable infrastructure strategies.

At the time, rack-scale computing never really caught on fully. The data center industry did not shift to a model wherein racks became the fundamental building blocks of infrastructure.

But the need to support modern AI has catalyzed renewed interest in rack-scale infrastructure strategies. For example, speaking at Data Center World 2025, analyst Jeremie Eliahou Ontiveros pointed to rack-scale architectures as part of the solution to provisioning AI workloads with sufficient infrastructure.

This approach is particularly well-suited to AI workloads. Not only do AI workloads require massive amounts of compute, memory, and (in many cases) storage resources, they also work a lot better when the infrastructure they run on is optimized at the hardware level. Rack-scale computing can help to meet both goals.

For example, 1 MW racks – which can accommodate much higher server capacities than traditional racks – can help ensure that AI workloads have the resources they need to operate. At the same time, rack architectures that optimize the movement of data between individual servers within a rack, while also helping to balance heat dissipation, help to avoid processing bottlenecks and optimize workload performance.

Individual server provisioning cannot achieve comparable optimization since it would be more challenging to integrate those servers optimally.

The Future of Rack-Scale Computing

To be sure, rack-scale computing has drawbacks. Chief among these is that when racks become the primary units, they may limit scalability because it will be challenging to provision more servers than a single rack can handle. However, this concern diminishes if data centers shift toward higher-capacity racks – such as, again, those capable of accommodating up to 1 MW worth of hardware.

Thus, as racks modernize, expect data center architectures to modernize with them as businesses pivot toward rack-scale approaches. Individual servers will remain important because not every workload requires its own dedicated rack. But the most critical – and expensive – workloads are likely to operate at rack scale.