Quantum machine learning promises revolutionary advances, but realising this potential demands a detailed understanding of how quantum circuits translate to real-world hardware, particularly in the current era of noisy intermediate-scale quantum computers. Rupayan Bhattacharjee, Pau Escofet, and Santiago Rodrigo, all from Universitat Politècnica de Catalunya, alongside their colleagues, investigate the scaling of quantum circuit resources after compilation, a crucial step in preparing quantum models for execution on limited hardware. Their work examines both Quantum Kernel Methods and Quantum Neural Networks across various processor architectures, revealing how the strategy used to connect qubits dramatically impacts circuit complexity, measured by factors like gate count and circuit depth. The team demonstrates that certain qubit connection strategies and processor topologies scale more favourably than others, and importantly, establishes a clear link between improvements in hardware capabilities and the maximum number of qubits that can reliably perform calculations, offering vital guidance for designing quantum machine learning models that are truly compatible with existing and near-future quantum computers.

NISQ QML Models, Connectivity and Resource Scaling

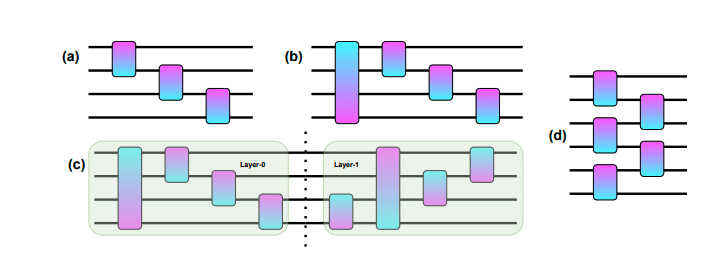

This research delivers crucial insights into the scalability of Quantum Machine Learning (QML) models on Near-Intermediate Scale Quantum (NISQ) devices, specifically focusing on the impact of qubit connectivity and noise. It moves beyond theoretical expressibility to examine resource requirements and fidelity following circuit compilation. Researchers performed a comprehensive analysis of resource scaling, measuring how the number of SWAP gates and other resources scale with the number of qubits, and investigated fidelity, assessing how accuracy degrades with noise and relates to hardware improvements. The study evaluated several QML models, including variational quantum circuits, kernel methods, and tensor networks, alongside different entanglement strategies, analyzing performance across linear and ring qubit connectivity topologies.

The research demonstrates that ring topology consistently shows the lowest overhead in terms of SWAP gates required after compilation, making it a promising architecture for NISQ implementations. Tree Tensor Network circuits lose their theoretical logarithmic depth advantage after compilation, challenging their assumed NISQ advantage. Kernel Pairwise circuits generally maintain higher fidelity than other models and demonstrate linear scaling of threshold qubit counts with improvements in gate fidelity. Even with significant hardware improvements, most models struggle to reliably implement more than 100 qubits, highlighting the need for fault tolerance. The non-machine learning GHZ state preparation circuit outperforms all QML circuits in the star topology.

Topology and Entangling Drive Quantum Scaling

This work delivers crucial insights into scaling quantum circuits for machine learning, focusing on the impact of processor topology and circuit design on resource overhead and fidelity. The team measured SWAP gate overhead, circuit depth, and two-qubit gate count, establishing a detailed understanding of resource scaling for various quantum machine learning models. Experiments demonstrate that entangling strategy profoundly impacts resource scaling, with circular and shifted circular alternating strategies exhibiting the steepest scaling behavior.

Notably, the ring topology consistently demonstrated the slowest resource scaling for most quantum machine learning models, while Tree Tensor Networks lost their logarithmic depth advantage following circuit compilation. Detailed analysis of SWAP overhead revealed that Kernel Circular and SCA circuits are the most expensive to implement, except on ring topologies where TTN circuits exhibit the steepest scaling. The team quantified these effects, showing that TTN circuits exhibit a more aggressive two-qubit gate count scaling on linear and ring topologies. Fidelity estimation revealed that all circuits except GHZ and Kernel Pairwise encountered significant fidelity loss, dropping below 0.

6, at a scale of 100 qubits. The team observed a crossover point between 60 and 70 qubits in linear and ring topologies for Kernel Pairwise and GHZ circuits, after which Kernel Pairwise maintained higher fidelity. Further investigation showed that improvements in gate error rates and coherence times rapidly increase circuit fidelity before saturating. For a fixed qubit count of 256, the team demonstrated that Kernel Pairwise circuits saturate much faster than GHZ and other quantum machine learning circuits in linear and ring topologies. These results establish quantitative relationships between hardware improvements and maximum reliable qubit counts, providing crucial guidance for hardware-aware quantum machine learning model design.

Ring Topologies Optimise Quantum Machine Learning Scaling

This research delivers a comprehensive analysis of resource scaling for quantum machine learning models implemented on processors with limited connectivity. The team demonstrates that, following compilation for realistic hardware, resource requirements generally scale linearly with the number of qubits used. However, significant variations emerge depending on processor topology and the chosen entanglement strategy. Notably, ring topologies consistently exhibit the most favorable scaling characteristics, requiring fewer SWAP gates than other configurations. Through fidelity analysis, the researchers establish quantitative relationships between hardware improvements, specifically reductions in gate error and gate time, and the maximum number of qubits that can be reliably used. This analysis indicates that even with substantial technological advancements, implementing over 100 qubits remains challenging for most models. The authors acknowledge that lowering the target fidelity would improve scalability, but emphasize the importance of high-fidelity computations. Future work should focus on full-stack co-design approaches, integrating hardware constraints directly into the development of quantum machine learning models, rather than relying solely on algorithmic optimization.

👉 More information

🗞 Characterizing Scaling Trends of Post-Compilation Circuit Resources for NISQ-era QML Models

🧠 ArXiv: https://arxiv.org/abs/2509.11980