▶Related Story:

Cutting AI’s Power Consumption Down to 1/100 with Neuromorphic Devices Inspired by the Human Brain | TDK

The challenges facing advanced AI computing

The rise of generative AI is transforming everyday life and industries alike. But alongside its adoption, unavoidable challenges have become increasingly conspicuous. AI’s heavy dependence on cloud computing is creating network congestion and latency, while power consumption in data centers continues to skyrocket. For applications requiring real-time responsiveness, including robotics and human interfaces, a cloud-only approach is beginning to reveal its limitations. To unlock AI’s full potential across society, addressing energy consumption and latency issues by balancing the use of cloud and edge computing is an essential strategy.

Fast and energy-efficient computing

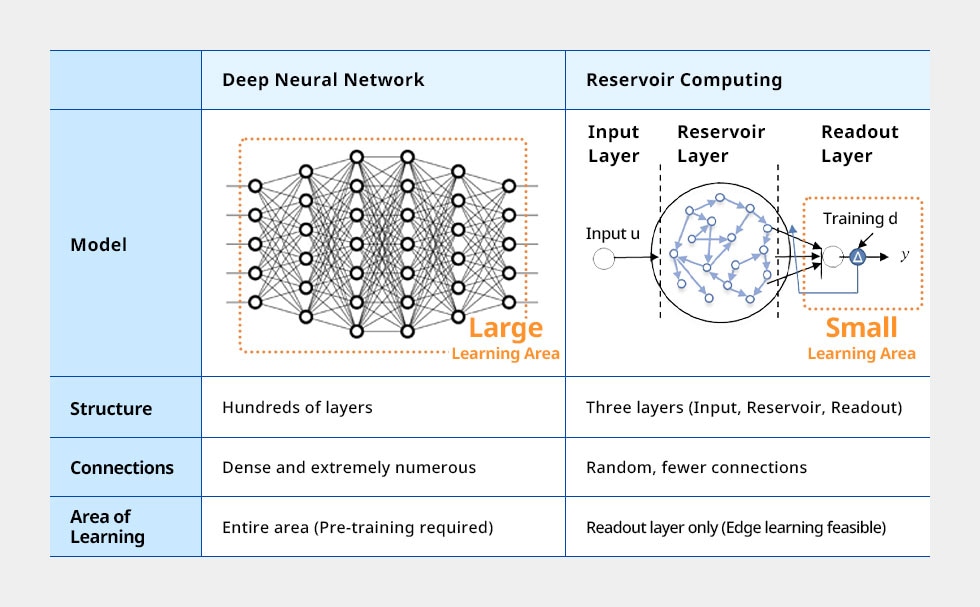

Reservoir computing presents a compelling possibility for addressing these issues. Conventional deep learning consists of an input layer; multiple intermediate layers where data is iterated in stages; and a final output layer. Adding layers enables more complex computation but demands exponentially more processing and thereby power—and inevitably creates more latency.

Reservoir computing, in contrast, consists of just three layers: input, reservoir, and readout. What’s distinctive is the reservoir layer. Instead of carrying out complicated calculations, it harnesses natural phenomena that unfold over time, like ripples on water surfaces or fluctuations in electrical signals. By capturing inputs from natural phenomena through features generated by their propagation and cross-interference, the system dramatically reduces the number of parameters needed for training. The result is faster, more energy-efficient computation compared to conventional methods.

Deep learning and reservoir computing compared

Because reservoir computing devices are simple in structure and learning only takes place in a small area, high-speed computation is possible while saving power.

Bringing reservoir computing to analog circuits

TDK and Hokkaido University set out to implement reservoir computing in analog electronic circuits and, in 2024, successfully created the world’s first prototype analog reservoir AI chip. This achievement builds on earlier work in 2020, when the team developed a large-scale reservoir computing device entirely composed of basic electronic components like capacitors and transistors. By physically reproducing large numbers of processing nodes, the circuit itself functioned as the computing resource—and its performance characteristics were confirmed to be the highest in the world. The new prototype integrates this approach into a compact chip, reducing power consumption to a level suitable for practical applications.

Analog reservoir AI chip (prototype) jointly developed by TDK and Hokkaido University

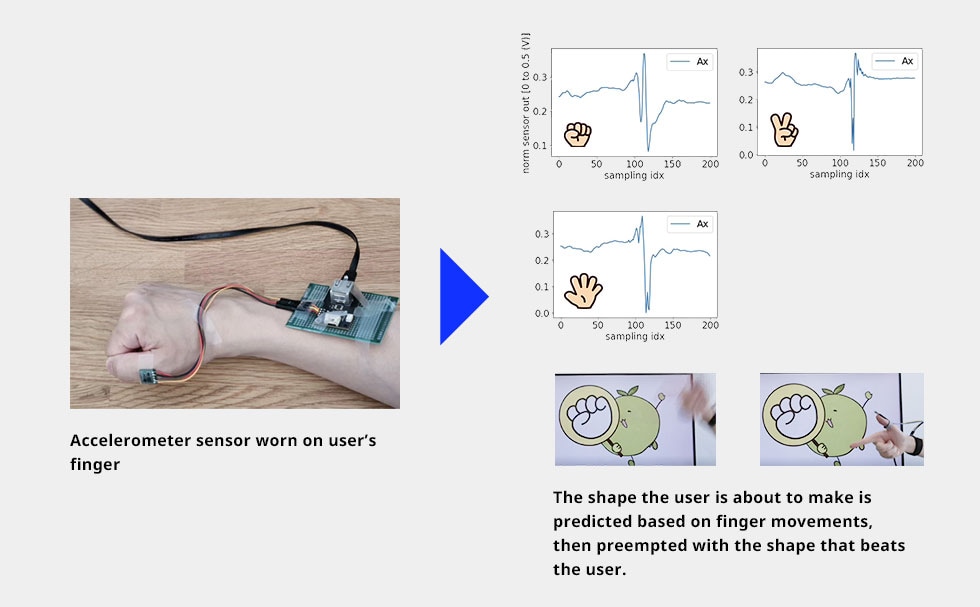

What’s more, this circuit possesses short-term memory—the ability to temporarily retain input and carry its influence into subsequent processes. Similar to how humans recall words heard seconds ago to continue a conversation, the chip briefly holds onto signals to anticipate what comes next. When paired with TDK’s accelerometer sensors, the system can learn finger movements in real time, predicting the next motion even before it is completed.

“Unbeatable rock-paper-scissors” demo

A demonstration unit to be shown at CEATEC 2025 offers an intuitive experience of the chip’s capabilities. Wearing an accelerometer sensor on the hand, users play the classic “rock-paper-scissors” game against the machine. As soon as the system detects the user’s finger movements, the AI predicts the shape the user is about to make—even before it’s fully formed—ensuring the machine always wins. Even if players attempt unconventional shapes (e.g., “old-style” scissors), the AI adapts instantly through real-time learning and will anticipate the same shape if tried again. The demo provides a clear, engaging glimpse into the potential of the analog reservoir AI chip.

Based on finger movements detected by the sensor, the system rapidly predicts the shape the user is about to make and preemptively displays the shape that beats the user. Even if the user makes an unconventional shape (like “old-style” scissors), it learns in real time and always wins.

What reservoir chips can unlock

Shinichiro Mochizuki

Advanced Products Development Center

Technology & IP Headquarters

TDK Corporation

Shinichiro Mochizuki, who leads the reservoir AI chip project at TDK Corporation’s Technology & IP Headquarters, spoke about its prospects.

“Unlike conventional AI, our reservoir chip mimics the cerebellum, enabling ultra-low power consumption and real-time learning at the edge. This can make a profound impact across industries. Thanks to the support of Professor Tetsuya Asai at Hokkaido University, we have been able to create one of the world’s most advanced reservoir AI chips. With TDK’s expertise in sensors and information processing, I envision this analog AI reservoir technology will open the door to advanced sensor applications where reservoirs are embedded near countless sensors, creating new value everywhere.”

Tetsuya Asai

Professor

Graduate School of Information Science and Technology

Hokkaido University

Professor Tetsuya Asai of Hokkaido University’s Graduate School of Information Science and Technology, joint development partner, added,

“I am convinced that the analog reservoir AI chip we developed through this collaboration will pioneer new possibilities for edge AI. Reservoir computing is a next-generation AI technology capable of low-power, real-time learning, and I have high expectations for its deployment in society. TDK Corporation’s advanced sensor and analog circuit technologies are indispensable for making reservoir AI practical, and I look forward to seeing world-leading innovations emerge.”

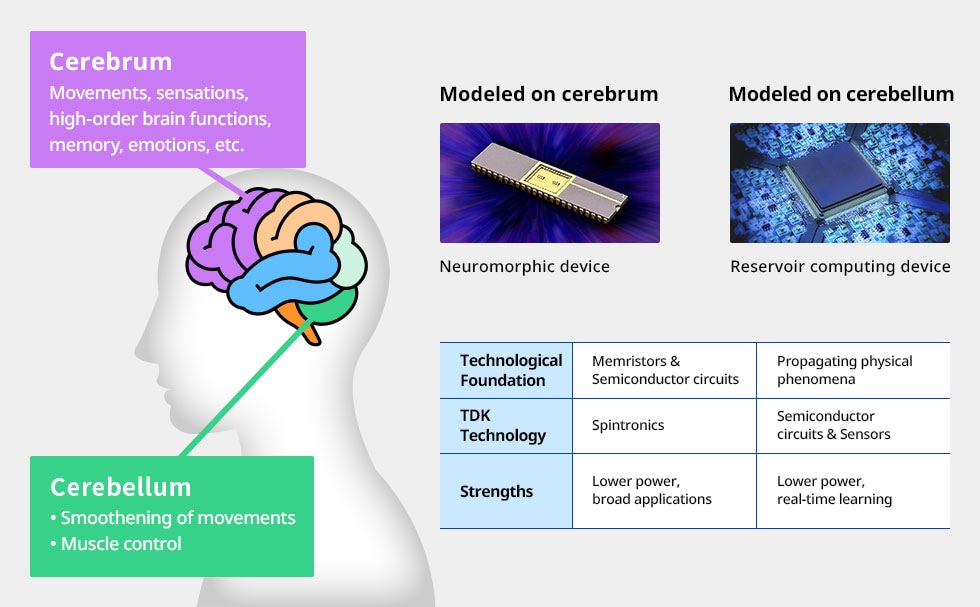

In 2024, TDK unveiled a neuromorphic device modeled on the cerebrum. Reservoir computing, in comparison, mimics the cerebellum. Each complements the other: neuromorphic devices excel at complex computation using little power, while reservoir AI chips handle real-time learning in a time-series manner. Together, they broaden the future of AI.

Comparison of devices modeled on the cerebrum and cerebellum

TDK is advancing the development of computational devices inspired by the functions of the human brain. Leveraging TDK’s core technologies and products, the company has developed the Spin Memristor, a neuromorphic device based on spintronics technology that mimics the cerebrum. The analog reservoir AI chip, a reservoir computing device modeled on the cerebellum, is the latest example.

Looking ahead, TDK will continue to collaborate with Hokkaido University while expanding applications in robotics, human interfaces, wearables, and mobility. Synergies are also expected with TDK SensEI, a group company that offers solutions that combine sensors and edge AI. By fusing its rich portfolio of sensors with AI chips, the company is committed to helping build an AI ecosystem market where humans and AI work together seamlessly.

TDK’s prototype of analog reservoir AI Chip won the Innovation Award in CEATEC 2025.