Kanerva’s book receded from view, and Hofstadter’s own star faded—except when he occasionally poked up his head to criticize a new A.I. system. In 2018, he wrote of Google Translate and similar technologies: “There is still something deeply lacking in the approach, which is conveyed by a single word: understanding.” But GPT-4, which was released in 2023, produced Hofstadter’s conversion moment. “I’m mind-boggled by some of the things that the systems do,” he told me recently. “It would have been inconceivable even only ten years ago.” The staunchest deflationist could deflate no longer. Here was a program that could translate as well as an expert, make analogies, extemporize, generalize. Who were we to say that it didn’t understand? “They do things that are very much like thinking,” he said. “You could say they are thinking, just in a somewhat alien way.”

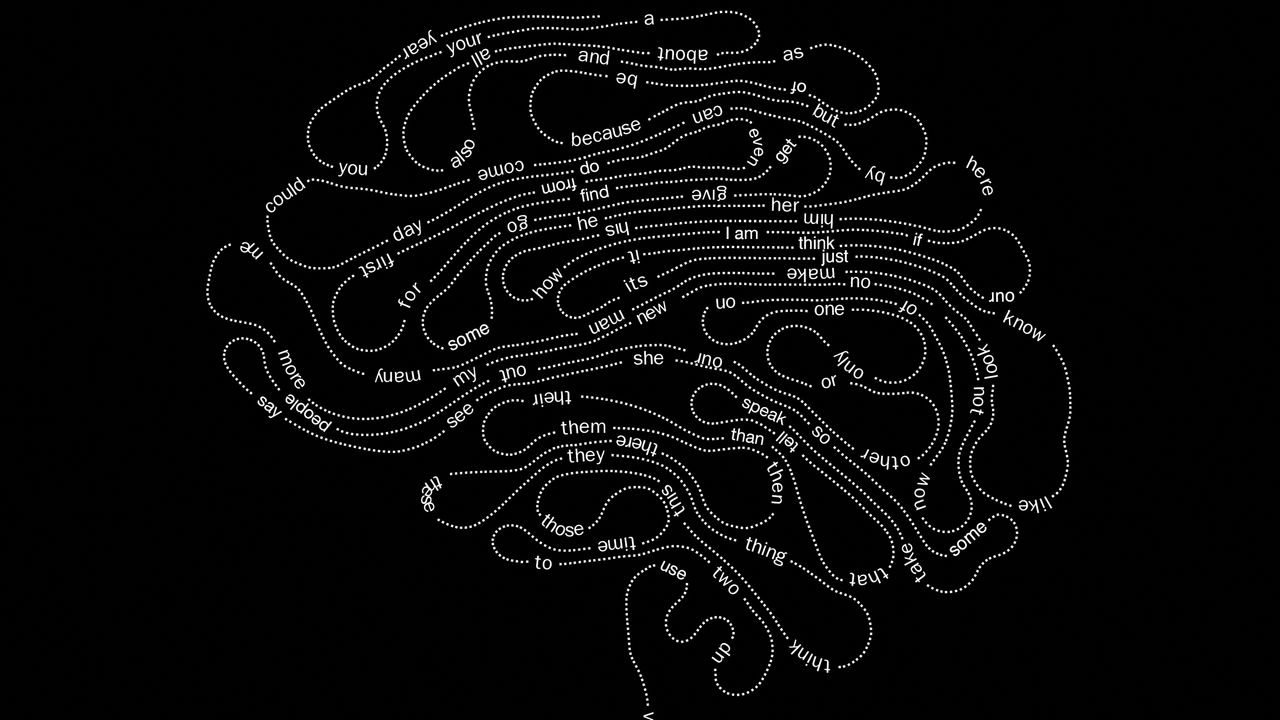

L.L.M.s appear to have a “seeing as” machine at their core. They represent each word with a series of numbers denoting its coördinates—its vector—in a high-dimensional space. In GPT-4, a word vector has thousands of dimensions, which describe its shades of similarity to and difference from every other word. During training, a large language model tweaks a word’s coördinates whenever it makes a prediction error; words that appear in texts together are nudged closer in space. This produces an incredibly dense representation of usages and meanings, in which analogy becomes a matter of geometry. In a classic example, if you take the word vector for “Paris,” subtract “France,” and then add “Italy,” the nearest other vector will be “Rome.” L.L.M.s can “vectorize” an image by encoding what’s in it, its mood, even the expressions on people’s faces, with enough detail to redraw it in a particular style or to write a paragraph about it. When Max asked ChatGPT to help him out with the sprinkler at the park, the model wasn’t just spewing text. The photograph of the plumbing was compressed, along with Max’s prompt, into a vector that captured its most important features. That vector served as an address for calling up nearby words and concepts. Those ideas, in turn, called up others as the model built up a sense of the situation. It composed its response with those ideas “in mind.”

A few months ago, I was reading an interview with an Anthropic researcher, Trenton Bricken, who has worked with colleagues to probe the insides of Claude, the company’s series of A.I. models. (Their research has not been peer-reviewed or published in a scientific journal.) His team has identified ensembles of artificial neurons, or “features,” that activate when Claude is about to say one thing or another. Features turn out to be like volume knobs for concepts; turn them up and the model will talk about little else. (In a sort of thought-control experiment, the feature representing the Golden Gate Bridge was turned up; when one user asked Claude for a chocolate-cake recipe, its suggested ingredients included “1/4 cup dry fog” and “1 cup warm seawater.”) In the interview, Bricken mentioned Google’s Transformer architecture, a recipe for constructing neural networks that underlies leading A.I. models. (The “T” in ChatGPT stands for “Transformer.”) He argued that the mathematics at the heart of the Transformer architecture closely approximated a model proposed decades earlier—by Pentti Kanerva, in “Sparse Distributed Memory.”

Should we be surprised by the correspondence between A.I. and our own brains? L.L.M.s are, after all, artificial neural networks that psychologists and neuroscientists helped develop. What’s more surprising is that when models practiced something rote—predicting words—they began to behave in such a brain-like way. These days, the fields of neuroscience and artificial intelligence are becoming entangled; brain experts are using A.I. as a kind of model organism. Evelina Fedorenko, a neuroscientist at M.I.T., has used L.L.M.s to study how brains process language. “I never thought I would be able to think about these kinds of things in my lifetime,” she told me. “I never thought we’d have models that are good enough.”

It has become commonplace to say that A.I. is a black box, but the opposite is arguably true: a scientist can probe the activity of individual artificial neurons and even alter them. “Having a working system that instantiates a theory of human intelligence—it’s the dream of cognitive neuroscience,” Kenneth Norman, a Princeton neuroscientist, told me. Norman has created computer models of the hippocampus, the brain region where episodic memories are stored, but in the past they were so simple that he could only feed them crude approximations of what might enter a human mind. “Now you can give memory models the exact stimuli you give to a person,” he said.