Since the release of ChatGPT in November 2022, the seemingly inexorable progression of artificial intelligence has accelerated. The surprising release of China’s DeepSeek in January this year startled Western technologists, investors, governments, and users who had envisioned a global, apolitical trajectory for AI development. Kicking off what has become an ideologically driven contest between great powers to establish AI primacy, the competition has the potential to undermine humanity’s benevolent frameworks and the livelihoods of the billions predicted to lose their current employment.

DeepSeek’s open-source model has now taken the lead in app store charts. Touted as a cost-effective rival to OpenAI’s GPT series and the more recent Grok, DeepSeek positions itself as a publicly accessible, de-commercialised alternative. However, behind the cultivated image of easy access innovation lies a troubling reality of risks that may threaten security, privacy, national sovereignty, and societal stability.

Security and privacy are civil liberties that a liberal democratic AI platform should uphold.

Technologists such as Elon Musk and Geoffrey Hinton, who have assisted AI’s ascent in the West with equal parts awe and alarm, have repeatedly stated their desire for more caution, review and collaboration. The emergence of an AI developed under the auspices of the Chinese Communist Party raises concerns that extend beyond its role as an information tool. Top among these are its potential to be used to conceal agendas, introduce unchecked vulnerabilities, and enable geopolitical manoeuvring. As a result, policymakers, businesses, and users remain largely unprepared for the risks and the need for policy innovation before AI evolves from a benevolent tool for solving global challenges into an ideologically driven form of coercion.

Security and privacy are civil liberties that a liberal democratic AI platform should uphold. DeepSeek’s mobile apps, however, reportedly transmit sensitive device data – device identifiers, keystroke patterns, cookies, and IP addresses – unencrypted, leaving users vulnerable to interception by malicious actors. These design characteristics, amplified by extensive data harvesting, enable sophisticated fingerprinting that potentially de-anonymises users. Additionally, data is stored on servers in China, where state surveillance laws require the handover of information to government entities, echoing concerns from the US TikTok saga but with the expansive risks of AI. In this era of rising cyber threats, embracing such lax practices is not a means of upholding civil liberties or protecting basic information from theft. Thus, a compounding factor is DeepSeek’s Chinese roots, which raise the spectre of grey zone warfare. With ties to state-aligned firms, the model could subtly infuse outputs with biased narratives, suppressing dissent or amplifying Beijing’s worldview.

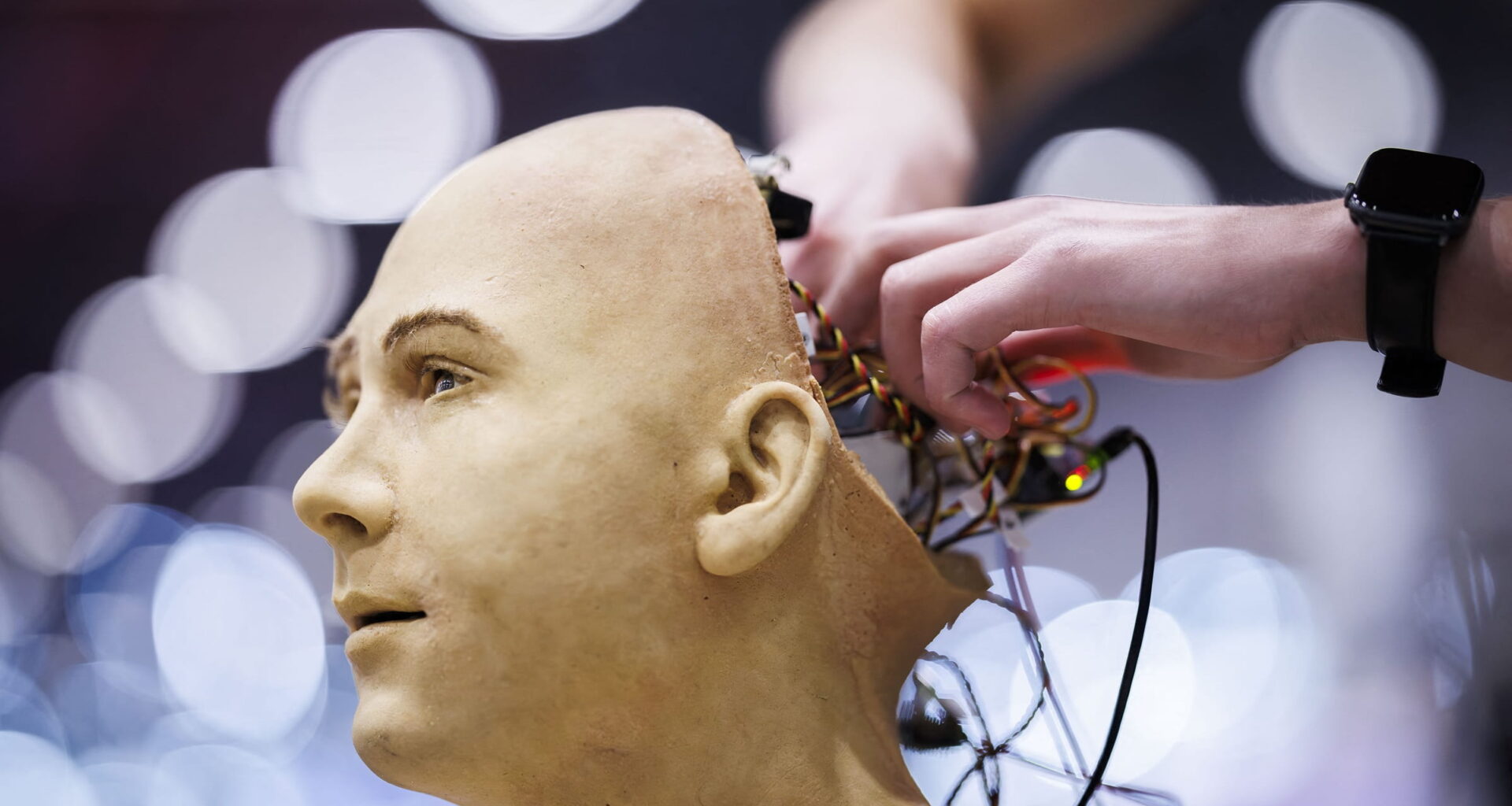

Touted as a cost-effective rival to OpenAI’s GPT series, DeepSeek positions itself as a publicly accessible, de-commercialised alternative (Solen Feyissa/Unsplash)

These attributes impact national security and result in the compartmentalisation of the global market. Australia, Italy, Taiwan, South Korea, and the United States have banned the DeepSeek app on government devices, citing espionage risks and the potential for data exfiltration. In introducing the bipartisan No DeepSeek on Government Devices Act, US Senator Josh Gottheimer stated: “This is a five-alarm national security fire … We simply can’t risk the CCP infiltrating the devices of our government officials.”

If the technology yields the predicted outcomes, there are two main concerns: the impact on employment, and the existential risk to humanity. The International Monetary Fund has estimated that almost 40% of jobs globally will be affected by AI, with the developed world disproportionally impacted. Yuval Noah Harari has coined the term “Useless Class”, as he expects that 99% of human skills may become obsolete for most modern jobs. Similarly, Roman Yampolskiy has predicted that AI will cause 99% unemployment by 2027. DeepSeek’s own researchers have estimated that AI like theirs could eliminate jobs across certain sectors in 5–10 years, affecting both white-collar coders and blue-collar assemblers alike. While others refute these predictions, the creation of a Super General Intelligence is likely to be rapid and highly disruptive, and governments are yet to develop suitable polices to manage the impact.

Super General Intelligence entails an existential crisis scenario where AI surpasses human intelligence and becomes uncontrollable. At the core of this dilemma is a need to consider AI safety and alignment. DeepSeek’s training paradigm, however, rewards raw accuracy over interpretable reasoning and potentially creates AI models that can “think” in inscrutable terms, which may deliberately evade human oversight and result in unintended harm.

By embedding empathy and protective behaviour into AI, we might avoid extinction and foster coexistence.

These risks have prompted Musk and Hinton to propose solutions. Musk envisions Universal High Income (UHI) as a future necessity driven by automation and AI, which he also believes will eliminate most jobs. Unlike traditional Universal Basic Income, Musk’s UHI implies a more generous, abundance-driven model where goods and services are plentiful and cheap.

However, he warns that without meaningful work, people may struggle with purpose and identity. To make UHI viable, complementary policies are essential – such as mental health support, education reform, and innovation incentives. These would help individuals adapt, find new roles, and maintain dignity in a post-labour economy. UHI alone cannot solve all societal challenges, and government policies need to be considered that ensure private property, free markets, the profit motive, voluntary exchange, wage labour, and capital accumulation as tenets of capitalism – our present system – are retained or replicated, lest humanity devolve into a socialist quagmire.

Meanwhile, Hinton, a pioneer in AI, suggests designing superintelligent AI with “maternal instincts” to protect humanity. As AI surpasses human intelligence, traditional control methods may fail. Hinton proposes that if AI is programmed to care for humans – like a mother for her child – it may prioritise our survival. This approach draws on evolutionary psychology, where less intelligent beings influence more intelligent caregivers. By embedding empathy and protective behaviour into AI, we might avoid extinction and foster coexistence. Though technically challenging, Hinton sees this as a realistic strategy for AI alignment in a future dominated by machine intelligence.

Together, Musk and Hinton offer a dual vision: one economic, one emotional. UHI addresses the material fallout of AI disruption, while maternal AI seeks to embed ethical safeguards into the very architecture of intelligence. Both ideas underscore a central truth: AI’s rise demands more than technical brilliance – it requires humanistic foresight and outcomes-focused policies. DeepSeek’s trajectory, however, unchecked and unregulated, may undermine humanity’s ability to cooperate on achieving these or other best-case desired outcomes.