Dell just announced a new Pro Max 16 Plus laptop that is making a strong pitch as your mobile workstation powered by AI. The key to this is the inclusion of the cutting-edge Qualcomm AI 100 PC Inference Card, which is an enterprise-grade discrete Neural Processing Unit (NPU).

This is a big step for the industry, as this system is the first mobile workstation to feature such a component. It brings true datacentre-class AI inferencing directly to the user, or as they call it, “the point of work,” without any reliance on a cloud connection. This is the moment where true, high-fidelity AI performance becomes mobile and completely untethered.

Datacentre Power in a Laptop

The Dell Pro Max 16 Plus is engineered with a custom dual-NPU architecture that delivers an unprecedented level of processing power. It boasts a staggering 64GB of dedicated AI memory, which is a massive amount of high-speed silicon dedicated purely to intelligent operations. This configuration means the laptop can support incredibly complex AI models up to approximately 120 billion parameters.

This hardware combination is built for sustained, high-fidelity FP16 inferencing performance. This high precision is vital for real-time, on-device decision-making where accuracy cannot be sacrificed for speed. Essentially, Dell is placing a compact, high-performance edge server into a professional-grade backpack.

The significance of this is that large-scale AI models can run natively on a single device, completely untethered from external networks. This capability doesn’t just offer incremental improvements; it completely redefines the possibilities for data security, privacy, and innovation in the professional world. Speed and control are finally non-negotiable elements.

– Advertisement –

Zero Cloud Dependency and Predictable Costs

The transition from cloud dependence to cloud-scale independence is arguably the most transformative benefit of this new architecture. While GPUs have been the workhorse for training AI models, inferencing, which is the real-time execution of those models, demands a different type of processing. This is where the discrete NPU changes the game entirely.

The Qualcomm AI 100 PC Inference Card, purpose-built for inferencing at scale, is the engine behind this new independence. It allows users to achieve real-time results without the cloud roundtrips that plague typical AI workflows. In time-critical scenarios, especially with the inherent latency of many Australian internet connections, those hundreds of milliseconds saved can be the difference between success and failure.

This also means professionals can now work anywhere, even in completely disconnected or air-gapped environments, without any drop-off in performance. For Australian professionals working in remote mining sites or rural clinics, this level of portability and power is truly revolutionary. Furthermore, the cost structure is vastly improved by replacing recurring and unpredictable cloud inference and token-based usage fees with a one-time hardware investment.

Security and Privacy

For industries that deal with highly sensitive data, like healthcare, finance, and government, data sovereignty and security are paramount. The regulatory landscape in Australia is constantly tightening its grip on personal and financial data, making on-device processing an incredibly attractive proposition.

The Dell Pro Max 16 Plus ensures that sensitive data remains on-device at all times during the inferencing process. By processing workloads entirely on the laptop, the system keeps every single inference private and under the user’s direct control. This level of data security is simply non-negotiable in regulated environments, ensuring full compliance and peace of mind.

Flexibility across Operating Systems

Recognising the diverse toolchains used by enterprise professionals, the Dell Pro Max 16 Plus offers exceptional platform flexibility. It is designed to support both the Windows and Linux environments, giving teams the freedom to work within their preferred development stacks. This is a crucial detail for enterprise adoption, allowing for easy integration into existing corporate infrastructure.

For Windows users, the integration is seamless, leveraging Dell’s existing ecosystem enablers for AI PCs. This allows IT administrators to manage security policies and lifecycle updates with the same precision and familiarity as any other corporate workstation. This blend of cutting-edge hardware and robust, manageable software is the hallmark of a true enterprise machine.

Real-world use cases

The immediate, tangible benefits of this localised power are being felt across a number of key industries that operate in the Australian market. This machine is built for the challenges faced by local experts.

In Healthcare, clinicians in mobile or remote clinics can finally analyse high-resolution medical images, like MRI or CT scans, directly on the device. This generates instant insights for time-critical diagnoses while simultaneously ensuring patient data remains compliant with strict privacy regulations. No longer will a patchy regional connection delay a pivotal diagnosis.

For the Finance, Legal, and Government sectors, the machine enables confidential AI operations. Analysts can run complex predictive models, fraud detection algorithms, and document classification tasks in secure or fully air-gapped environments. Imagine legal teams transcribing sensitive depositions and redacting personally identifiable information (PII) automatically, all within a completely secure, on-device sandbox.

In Engineering and Research, AI developers gain the ability to benchmark and validate their models locally without relying on slow cloud queues. They can fine-tune parameters and measure latency immediately, leading to accelerated development cycles. This is vital for local innovation in robotics, computer vision, and the development of autonomous systems used in smart factories and field maintenance.

In every one of these scenarios, the fundamental benefit is the same: AI that performs immediately, securely, and at scale, ensuring innovation can happen wherever the work takes place. This is a game-changer for professionals who need to move their cutting-edge AI tools outside the confines of the main office.

Discrete NPU advantage

Dell is keen to differentiate the Qualcomm AI 100 PC Inference Card from other processors, and for good reason. Not all chips handle AI workloads with the same efficiency or scale. The discrete NPU offers a specialised advantage designed explicitly for modern, large-scale AI inferencing.

Integrated NPUs, often found in standard consumer laptops, are designed to accelerate basic OS functions like background blur during video calls. They are limited by constraints in memory and performance, making them unsuitable for large, complex models. The enterprise-grade discrete NPU, with its 32 AI cores and 64GB of dedicated on-card memory, operates on a completely different level of complexity and performance.

The distinction between a discrete NPU and a traditional GPU is also important. GPUs are ideal for graphics rendering, complex simulation, and, crucially, the training of AI models. However, the discrete NPU is architecturally superior for sustained inferencing. It is far more power-efficient when running these continuous, long-duration AI workloads. This translates to consistent, reliable performance with lower power draw and less heat generation than older accelerator technologies.

Availability

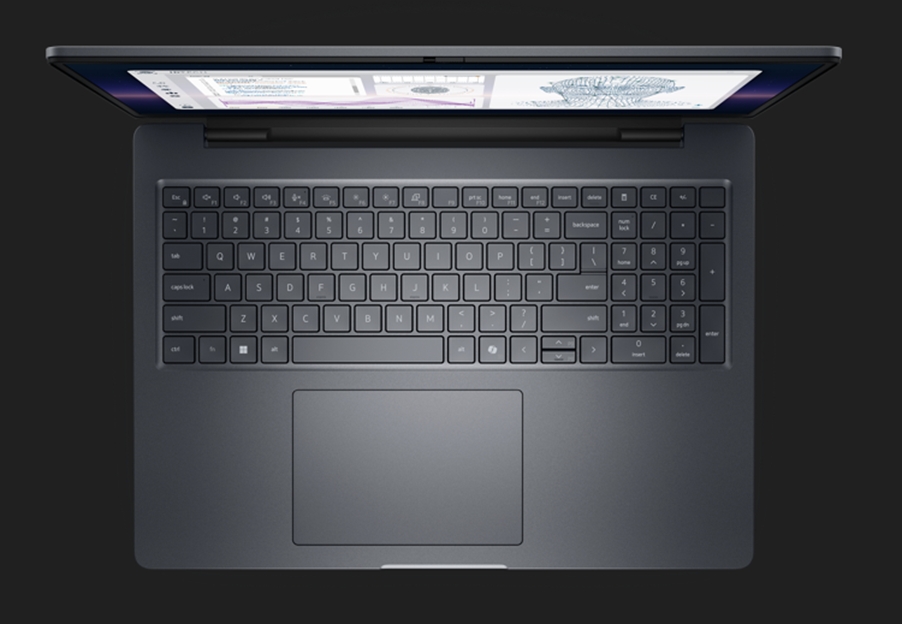

The Dell Pro Max 16 Plus marks a pivotal moment for the mobile workstation category and for any professional reliant on large-scale AI. Powered by Intel Core Ultra processors and featuring options for either NVIDIA RTX Pro Blackwell graphics or the groundbreaking Qualcomm AI 100 PC Inference Card, this machine is purpose-built for enterprise-grade, on-device AI performance.

Pricing and availability of the high-end system starts at US$3,329 for the 16″ model, while there’s also an 18″ model that starts at US$3,789.

For more information, head to Dell’s Website.