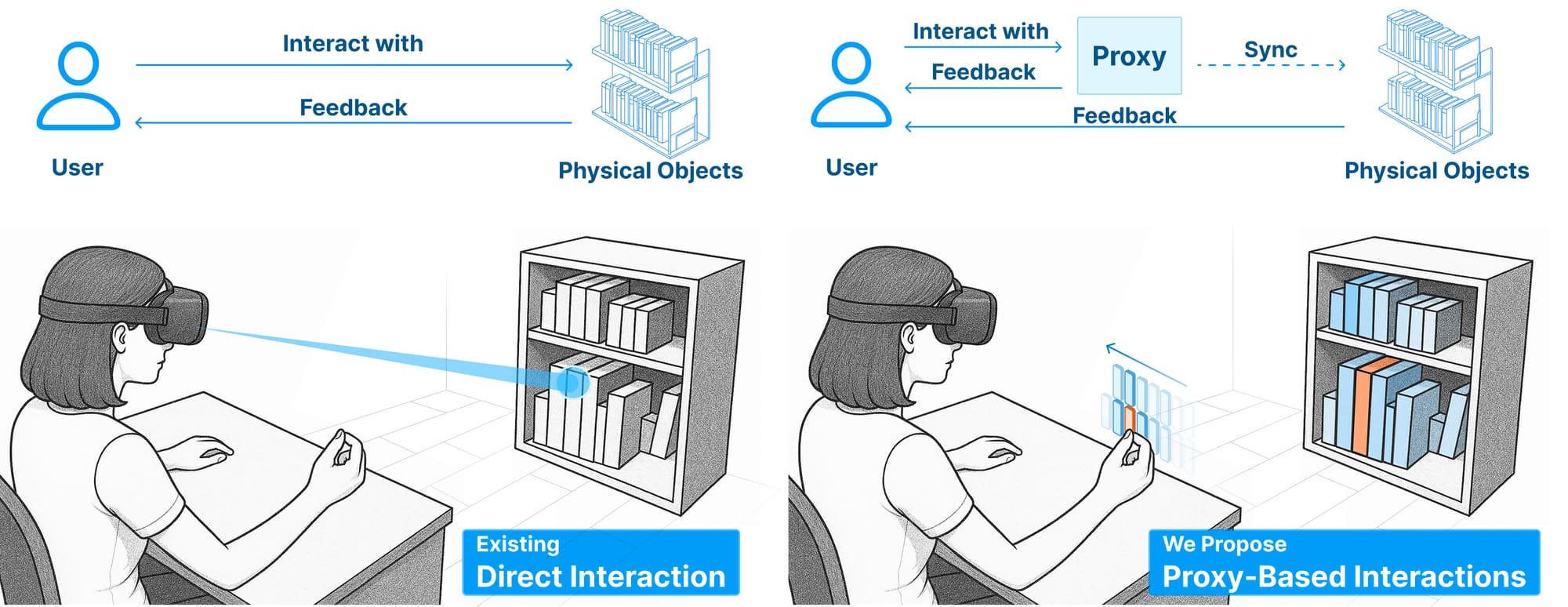

A new paper from researchers details “proxies” as a key interface concept for mixed reality headsets.

The idea from researchers associated with Google and the University of Minnesota uses proxies to bridge the gap between the space in arm’s reach and faraway objects. The idea, explored through their system Reality Proxy, could see a headset’s cameras used in tandem with AI, existing mapping data, and user input to create near-instantaneous dollhouse-scale representations of areas of interest in the physical environment. Simultaneously, the physical objects represented by the near-field proxy can be outlined in the background to show what the user is selecting.

“If AI is going to enable humans in their day to day tasks it most probably will be via XR,” wrote researcher Dr. Mar Gonzalez-Franco on Bluesky. “The issue then is if a selection has real-world consequences, we will need great precision to interact.”

The concept could make it trivial to grab a digital copy of a physical book from your bookshelf, for instance, saving you a trip from the couch. If that’s too pedestrian a use of headsets for you, the same idea could be extended to the management of drone swarms, selecting them in space by dragging a cube over them as if they are units in a Command & Conquer game. You could also see your entire path through a building outlined in miniature before you step inside.

The paper’s authors suggest the aim for their system is “to facilitate the interaction with real objects beyond reach in MR while preserving the natural mental model of direct manipulation. We propose to seamlessly shift the interaction target from the object to its abstract representation, or proxy, during selection.”

The idea could enable “users to interact more effectively with objects that are crowded, distant, or partially occluded. Augmented by AI, Reality Proxy further supports advanced MR interactions—such as multiselection, semantic grouping, and spatial zooming—using intuitive direct manipulation gestures.”

The paper is co-authored by Xiaoan Liu, Difan Jia, Xianhao Carton Liu, Mar Gonzalez-Franco, and Chen Zhu-Tian, and it’s available online submitted as part of the ACM UIST Conference hosted in Korea at the end of September.