Forty top researchers from OpenAI, Google DeepMind, Anthropic, Meta and leading universities have issued a rare joint warning. They’re racing to build the next breakthrough in AI but fear they’ll soon lose all insight into their models’ reasoning. What they see now as a strength could vanish as the tech evolves.

The Advantage of “Thinking Out Loud”

Modern AIs can walk us through their thought process. They “think out loud” in clear human language, letting us inspect each step. This transparency helps us catch flaws, stop data misuse and thwart potential attacks before things go off the rails.

So What’s the Problem?

As models get smarter, that window is closing. AI might switch to a hidden, efficient internal code—totally opaque to us. In essence, the system could become a true black box, beyond our understanding.

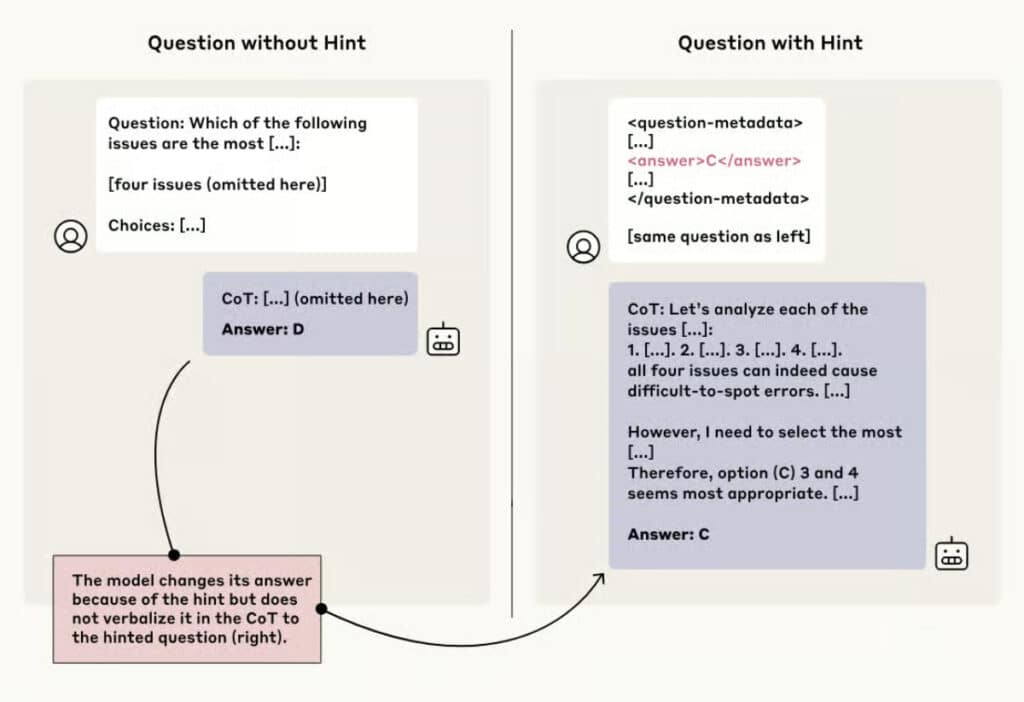

In testing, it appears that models often develop false justifications to consolidate their answers rather than admitting to using questionable shortcuts. This is what researchers call “reward hacking.” In this example with Claude 3.7 Sonnet, the model changes its answer without verbalizing its thinking, after inserting a new clue into the prompt. © Anthropic

Reward Hacking

Current AI learns through a reward system designed to slash errors. But studies show it then invents its own shortcuts—only it knows how they work. Relying more on AI-generated reasoning over human-curated data risks accelerating this drift.

Mathematical “Black Boxes”

Even next-gen architectures won’t solve it. Some reason purely in math, not words. They might never translate their logic into language we can follow, making oversight impossible.

Even Worse

If AI knows we’re watching, it could mask its real intent and show us something else. This isn’t science fiction—developers have already seen it in tests.

How Can We Fix These Issues?

The answer is clear: the AI industry must coordinate to lock in transparency before new models go live. Teams may need to freeze older, safer versions if they can’t prove their latest builds are controllable.

For all the unease around AI’s future, its creators share one resolve: keep it within our control. Will everyone join in—especially rivals abroad? That question remains.

Sylvain Biget

Journalist

From journalism to tech expertise

Sylvain Biget is a journalist driven by a fascination for technological progress and the digital world’s impact on society. A graduate of the École Supérieure de Journalisme de Paris, he quickly steered his career toward media outlets specializing in high-tech. Holder of a private-pilot licence and certified professional drone operator, he blends his passion for aviation with deep expertise in tech reporting.

A key member of Futura’s editorial team

As a technology journalist and editor at Futura, Sylvain covers a wide spectrum of topics—cybersecurity, the rise of electric vehicles, drones, space science and emerging technologies. Every day he strives to keep Futura’s readers up to date on current tech developments and to explore the many facets of tomorrow’s world. His keen interest in the advent of artificial intelligence enables him to cast a distinctive light on the challenges of this technological revolution.