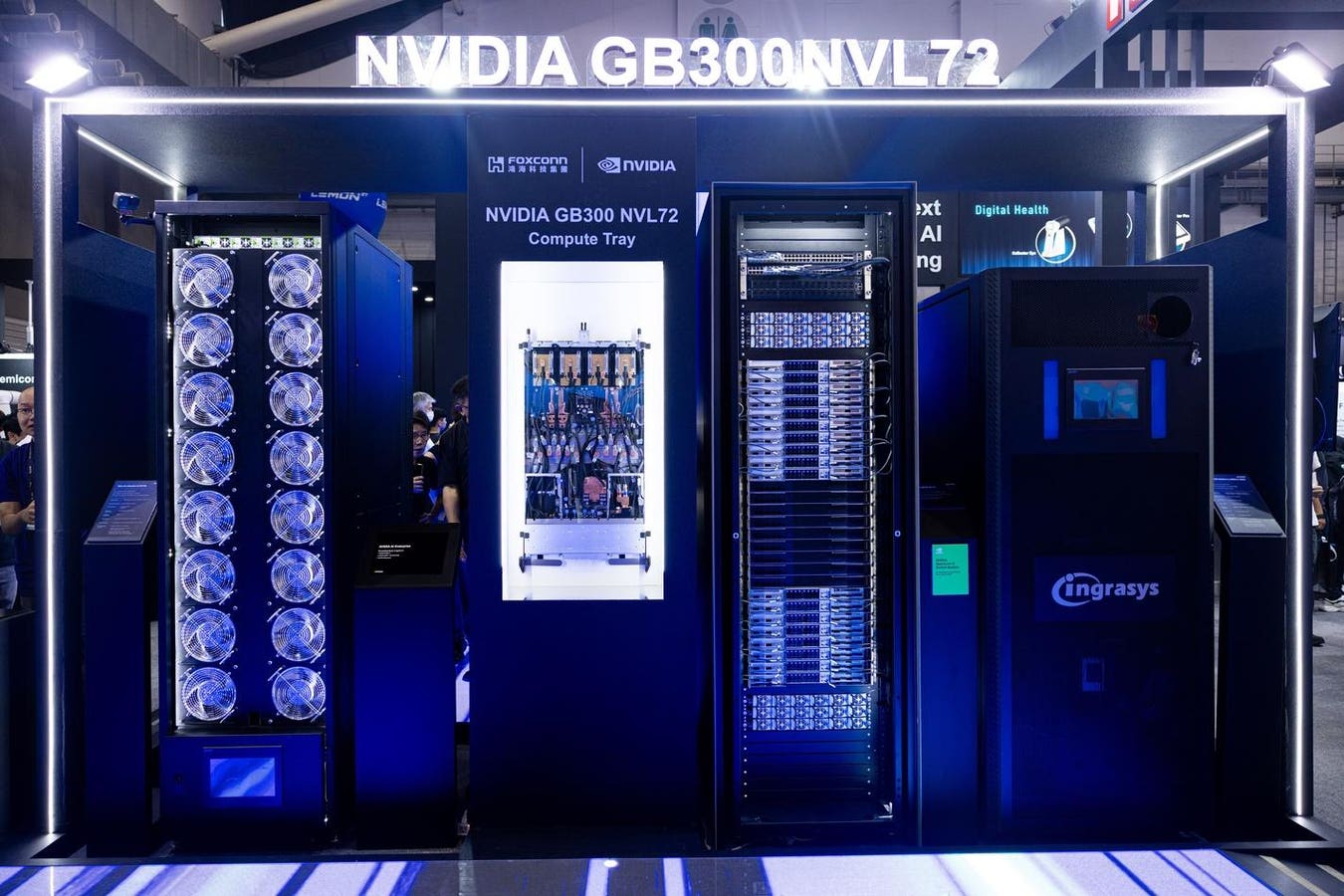

An Nvidia Corp. GB300 NVL72 GPU on display at the Foxconn Technology Co. booth during the Computex conference in Taipei, Taiwan, on Tuesday, May 20, 2025. Computex kicked off Monday in Taipei, and as in years past will draw industry chieftains from Huang and Qualcomm Inc.’s Cristiano Amon to Young Liu of Foxconn, which makes the bulk of the world’s iPhones and Nvidia servers. Photographer: Annabelle Chih/Bloomberg

© 2025 Bloomberg Finance LP

Amid all the speculation and handwringing about an imminent AI bubble, we’re overlooking an inconvenient truth: We are in the earliest stages of a once in a lifetime AI Supercycle.

Simply put, there aren’t enough wafers to meet AI demand. Chips, memory, storage — everything being built is already spoken for before it comes off the production line. There isn’t enough concrete or rebar or construction workers to build the datacenters. Every part of the AI supply chain is constrained.

Demand is insatiable across the board. Micron Technology Inc. just shut down its consumer business to pivot to AI chips. Intel Corp. is surging after a brutal patch because the market knows its time is coming and its foundries will soon be full. And all with all of this, enterprise AI hasn’t even taken off yet. Salesforce CEO Marc Benioff shared on X yesterday that its Agentforce has consumed 3.2 trillion tokens so far. This product is in very early stages and most of Salesforce users are just starting to experiment.

But the tech industry loves a good debate. Recent speculation that Google’s TPU would be the demise of NVIDIA reminded me of the DeepSeek moment that came earlier this year–both in the undeserved negative reaction it drove toward NVIDIA and the significant factual inaccuracies that came along with it. The current obsession with GPU vs. XPU or TPU or custom AI Chip or whatever competition fundamentally misses the moment we’re in. Great for a media moment, but the story doesn’t hold water. This isn’t a zero-sum game—not even close. In fact, it’s an all-hands-on-deck emergency response to unprecedented demand.

After spending a week at AWS’s re:Invent conference in Las Vegas, I’m more convinced than ever that we’re in the earliest phase of an AI transformation. The notion that we’re in an AI bubble is nearly impossible to defend when every component remains severely constrained. We heard this implied in comments from AWS CEO Matt Garman. Trainium 2 is sold out, and its newly available Trainium 3 is fully committed as well. Google Launched its new Gemini 3 model, and its being pushed to the limit, so even if some TPUs are going to Anthropic, there aren’t nearly as many being made as there are needed.

And then there is the manufacturing constraints. TSMC is ramping capacity as fast as physically possible. But it is well known that we face hard constraints on wafer production and packaging capacity. When NVIDIA Corp. and Apple Inc. are consuming enormous volumes, there’s only so much left for Broadcom Inc. and Marvell Technology Inc., the leaders in the customer chip development for clouds like Google, Amazon, and Meta, to scale their custom chip production. Tesla turned to Samsung because it needed more AI Chips.

This constraint is precisely why every AI chip manufactured will find a buyer. Intel’s 18A process will do well and its 14A should be a big success. AWS Trainium 4 will sell out completely. Large model companies will gladly use TPUs. AMD Inc. will capture more than 10% of the GPU market simply by selling everything it can manufacture, with hyperscalers and neoclouds deploying systems to serve customers who prefer AMD or simply need more compute capacity. We fully expect new entrants like Qualcomm, Arm, and Groq to all have success entering the AI chip race. Like I said, if it can be built, it will be sold.

GPUs Have a Bright Future Even As Custom Chips Surge

Running and optimizing software for multiple AI chips is enormously complex. Most companies simply won’t attempt it, which gives GPUs a natural advantage. Custom XPUs like Google’s TPU, AWS Trainium, and Meta Platforms Inc.’s MTIA will largely remain the domain of hyperscalers and massive frontier model companies.

These really aren’t designed to outperform NVIDIA even if that narrative gets packed in there somewhere. They’re engineered for better economics at scale. And for the likes of Google, Amazon, Meta, Microsoft it makes perfect sense. When you’re customer zero with virtually unlimited compute needs, building your own silicon makes sense. But we’re talking about maybe 10 companies worldwide that can pull this off.

None of these replaces NVIDIA and its ability to sell every Blackwell system it can build today, and Rubin in the future. Demand is the only metric that matters, and it’s insatiable. No startup, independent software vendor, manufacturer, or services firm is pumping the brakes on AI adoption. While the work to build highly performant generative AI and autonomous agents is underway, we’re genuinely just getting started.

Anyone who mistakes the AI revenue opportunity for what OpenAI and Anthropic represent today fundamentally misunderstands the scale ahead. These are merely proof-of-concept showcases for what’s possible. The real explosion comes when enterprises unleash their data—and remember, 95% of the world’s data sits behind corporate firewalls.

Energy represents the most severe constraint of all. In the near term, GPU REIT and Neocloud plays like Iren Limited, Nebius and Coreweave offer compelling opportunities, with companies positioned to benefit as demand outstrips supply. Yes, hyperscalers want vertical integration and full-stack ownership. But we’re years away from that strategy impacting the broader market. We also need nuclear and the promise of small modular reactors to augment energy needs, but this too will take time. What we know for sure is that current demand for AI will ensure every system built gets deployed.

This makes the energy infrastructure conversation critical. Nuclear power, small modular reactors, fusion, fission—all must become major priorities. We cannot build the compute capacity AI requires if we cannot power it. Deregulation offers a promising start, but progress must accelerate dramatically.

The Custom Chip Market

On the custom chip front, Broadcom stands as the primary beneficiary of the XPU movement, with Marvell next in line. Both also benefit from the ethernet, and optical networking infrastructure AI requires. Custom silicon will likely capture 25% to 30% of the AI accelerator market over the next five years—a market that will exceed $1 trillion annually within that timeframe and as I recently told CNBC, XPUs will soon grow faster than GPUs, albeit from a smaller base.

The recent earnings cycle delivered nothing but bullish AI signals. Every genuine builder in this revolution met or exceeded expectations. The fundamental thesis remains completely intact. Six months ago, estimates pegged data infrastructure investment at $1 trillion. That number has already grown substantially. The AI supercycle is moving faster than most observers can comprehend.

Consider NVIDIA’s recent disclosure: $500 billion in order visibility, excluding OpenAI commitments entirely. The supposed OpenAI risk doesn’t even factor into that staggering figure, making the business case even more compelling.

We’re witnessing the largest technology supercycle in history. AI remains in its earliest innings with tremendous runway ahead. Once you recognize that meeting current demand is impossible—let alone peak demand—the all-hands-on-deck nature of this moment becomes clear.

The debate shouldn’t be GPU or XPU. It should be: How do we build enough of everything, fast enough? And maybe this debate isn’t as interesting as Michael Burry shorting Palantir or NVIDIA failing because Google built a performant AI chip. But that’s the real question facing the industry. And the one the industry is busy working on solving.