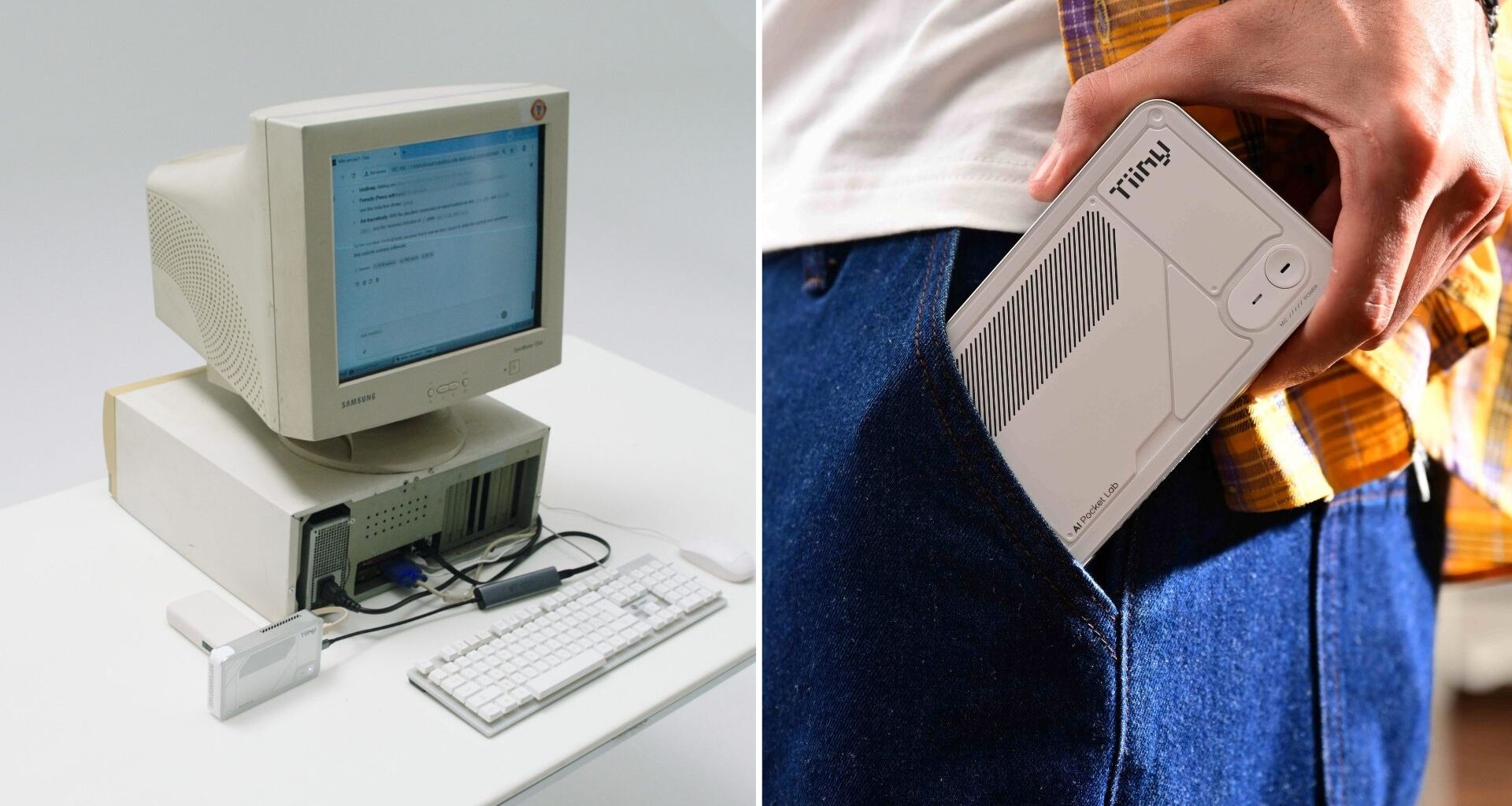

US deep-tech AI startup Tiiny AI has released a groundbreaking demonstration showing a 14-year-old PC running a 120-billion-parameter AI model smoothly without any internet connection.

Captured in a single uninterrupted take, the demo features OpenAI’s GPT-OSS 120B running on Pocket Lab, the company’s proprietary personal supercomputer.

Tiiny AI aims to make cloud-grade large language model intelligence accessible to everyone, regardless of internet connection, cloud access, or GPU upgrades. The company’s Pocket Lab PC recently gained recognition as “The Smallest MiniPC (100B LLM Locally)”, post its launch on December 10.

Equipped with a 65W power capacity, it supports installation of popular open-source models like Llama, Qwen, DeepSeek, MIstral, Phi, and GPT-OSS.

More on the experiment

See 120B LLM Running Locally on an Old PC

in One Continuous Take

No cloud. No GPU upgrade. No expensive computer.

😎 We just ran OpenAI GPT-OSS 120B on a computer from 14 years ago — powered by Tiiny AI Pocket Lab.

See what personal AI actually looks like. pic.twitter.com/6uaAOMH0oP

— Tiiny AI (@TiinyAILab) December 25, 2025

For the demo, the Tiiny AI team used a 2011 PC with an Intel Core i3-530 processor, 2GB of DDR3 RAM, and a CRT monitor. After connecting Pocket Lab to the old PC, the machine successfully operated ChatGPT, with an average token processing speed of 20 tokens per second.

The team ran reasoning and analytical tasks on the PC in the demo. The chat started with a question prompt asking, “Who are you?” Next, the team queried “Why does 1+1=2”, and the LLM launched into a detailed explanation of the question without any interruptions. It used 1582 tokens to answer the question with a speed of 18.6 tokens per second.

“This demonstration proves something the AI industry long assumed was impossible,” said Samar Bhoj, GTM Director of Tiiny AI.

“We are showing that large-model intelligence no longer needs massive GPU clusters or cloud infrastructure. With Tiiny AI Pocket Lab, advanced AI can run privately, offline, and on everyday hardware, even a 14-year-old PC. The future of AI belongs to people, not data centers,” he added further.

By moving inference fully to the device, the demonstration proves that even older hardware can now deliver performance previously believed to require advanced AI systems.

What makes the AI supercomputer tick?

At its base, Pocket Lab is powered by Tiiny AI’s proprietary technologies, named TurboSparse and PowerInfer. Together, these systems enable large-scale language models to run locally at a power level far below that of traditional GPU-based systems.

TurboSparse boosts efficiency by activating only the required neurons without reducing model intelligence. At the same time, PowerInfer spreads AI workloads across the CPU and NPU to improve performance while using far less power.

In terms of specifications, the Pocket Lab supercomputer comes with an ARMv9.2 12-core CPU. It has 80GB of LPDDR5X memory and a 1TB SSD, weighing about 300 grams.

A glance at the use cases

Pocket Lab is designed to support a wide range of personal AI applications, empowering creators, developers, researchers, students, and other professionals alike.

It enables multi-step reasoning, deep contextual understanding, agent-based workflows, content generation, and secure data handling without needing an internet connection.

User data, preferences, and documents are stored locally with bank-grade encryption, providing persistent memory and significantly stronger privacy than traditional cloud-based AI systems.