“Many people won’t know if the announcer on the radio…. or even the news they’re listening to, is actually human.”

The Australian Association of Voice Actors is continuing its campaign against the use of AI generated content, now calling on the government to mandate the labelling of all AI-generated content.

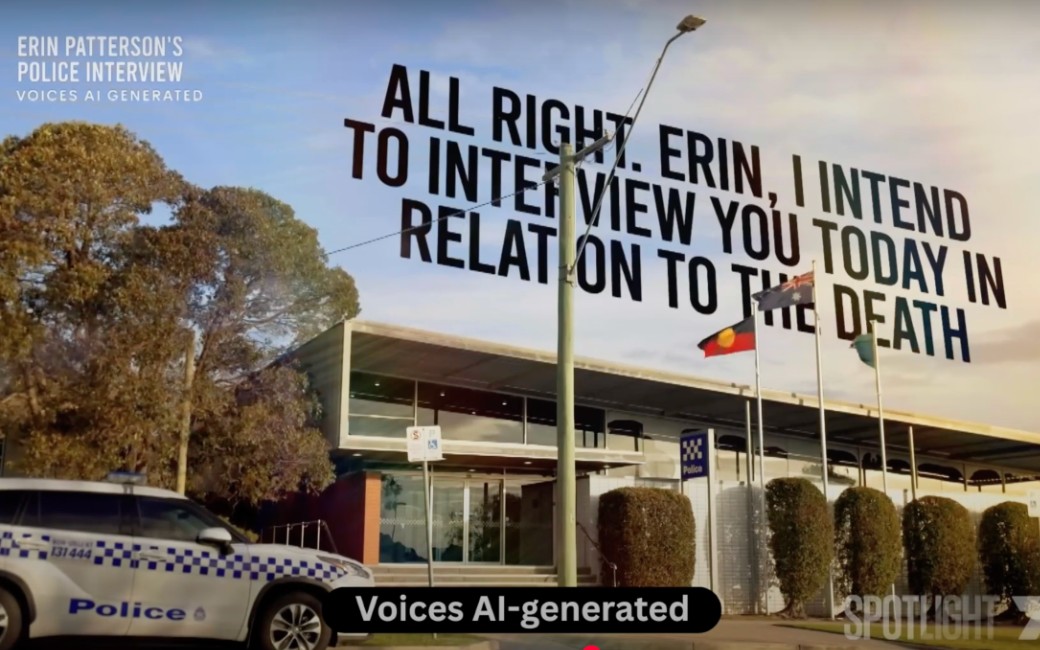

AAVA is asking the Australian Government to establish regulatory change to introduce mandatory explicit labelling and watermarking of AI-generated audio, visual and written content into all broadcast and advertising codes of practice.

AAVA President Simon Kennedy said, “This technology has come so far, so quickly, that many people won’t know if the announcer on the radio, the voiceover convincing them to buy something, or even the news they’re listening to, is actually human. Transparency is crucial”

“We all deserve to choose if we want to engage with content that isn’t human-created,” says Teresa Lim, Vice President of AAVA. “Use of an AI-generated radio announcer by the ARN network recently led listeners to believe that a woman of Asian decent was on-air. Neither was true, it wasn’t even a human. This was only disclosed when the truth was uncovered by a journalist”.

Alistair Lee, AAVA’s disability advocate and a blind voice actor, said, “Stating up-front that something is AI is essential for those us who are more vulnerable. For people who can’t read a disclaimer on the bottom of a screen, it also needs to be audible. I have a keener sense of hearing than most, but even for me it’s getting harder to tell what’s real and what isn’t”.

AAVA has previously called for Identity Protections for all Australians, in a call to outlaw the creation of AI voice clones and deepfakes without informed and explicit consent.