A CEO, who is a pioneer in the world of artificial intelligence, and his company are about to go live with a new app allowing people to speak to lost loved ones using AI. But at the same time, he gets a devastating, fatal diagnosis and learns his wife of 20 years has been cheating on him.

As the CEO prepares for the end of his life, he uncovers some unsettling information that forces him to question everything, including his own diagnosis.

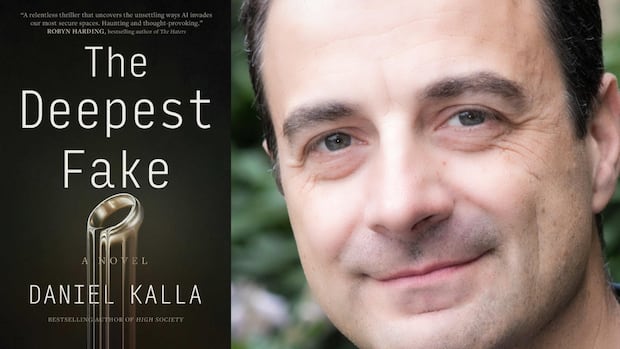

That’s the premise of Vancouver emergency room physician and thriller writer Daniel Kalla’s latest novel, The Deepest Fake.

The emergency room doctor often incorporates current events into his writing — things like the opioid crisis, anti-vaxxers and diet pills have all shown up in his books.

And while Kalla and his colleagues use AI in their practices for some things, he said this book is a little different than his others.

“Unlike other medical topics I’ve written about, I’m no AI expert, obviously, so this was never meant to be a detailed thriller that takes you under the hood of AI,” he said. “It’s mainly just to explore the possibilities … where there’s potential to do good with AI, but obviously how quickly it can go off the rails.”

Kalla spoke with CBC North by Northwest host Margaret Gallagher about his latest thriller.

This interview has been edited for length and clarity.

Now, you’ve written about many timely topics, and my colleagues at CBC Books have said that you have a knack for writing eerily prescient thrillers. Why did you want to write about AI right now?

Like many people, when a topic is kind of in the zeitgeist and interests me and grabs my attention, it’s something that I love to do — to create a story around that. And AI, you know, I knew nothing about it until the early 2020s and even when I started researching this book, I hadn’t even really heard of ChatGPT. But when I heard about what was coming and how quickly it was evolving, I started to do a little bit of just sort of self research and self-interest. It just fascinated and terrified me in equal measures.

The book deals with the creation of these convincing AI avatars that help you connect with your loved ones who have died. It’s a controversial subject in this book and in the world. What intrigues you about the idea and what scares you?

The idea is so potentially endearing and intoxicating for heartbroken, lonely people who want to reconnect with these souls they’ve lost in their lives. AI has the partial potential to do that. That can mimic the voice and look almost perfectly, and it can mimic some of the thoughts and ideas and temperament and personality. But it’s not the same thing, obviously. I understand the temptation and how powerful that could be. But the risks are huge because you’re talking to a machine, much as they might look and sound like the person you want to be talking to.

There’s a chilling story that’s making headlines right now about a teen who developed an intense relationship with ChatGPT, and his parents say that he was coached on methods of self harm. Now, the parents are suing and demanding more guardrails. What protections would you like to see when it comes to AI?

One of the subplots of this story is when the protagonist is convinced he is dying, has looked into medical assistance with suicide, he actually starts discussing the issue with one of the avatars.

WATCH | Parents allege ChatGPT coached son on self-harm:

Parents allege son was coached to suicide by ChatGPT in lawsuit

Sixteen-year-old Adam Raine died by suicide in April. Now his parents are suing OpenAI and CEO Sam Altman because its chatbot allegedly ‘provided detailed suicide instructions to a minor.’

This novel touches a lot about my deep feelings on guardrails. There’s some issues that AI should never get involved in discussing and to me it should be absolute conversation ender and that you shouldn’t be able, when it comes to harming yourself, harming others, using dangerous weapons, building dangerous weapons — all of that should be an automatic switch off of the conversation.

You are an ER doctor. What role does AI play in the medical world?

A lot. Nowadays a lot of doctors are using AI to transcribe conversation, to get the highlights of histories and physicals, which is something people have been doing in medicine for millennia. But AI does it very well and very efficiently. I don’t use it personally that way, but it has huge potential. I, myself, use it as a reference to get dosage of medications, to get updates on rare illnesses that I haven’t seen in a long time. It’s incredibly efficient and quick. So as a companion, as a tool, it’s very helpful.

LISTEN | Should you be asking AI for medical advice?

The DoseWhat should I know about asking ChatGPT for health advice?

As a growing number of Canadians use AI, physician groups like the Ontario Medical Association are warning against using it for medical help. Family physician Dr. Danielle Martin explains how AI chatbots can be useful, but risky when it comes to personal medical advice.

For transcripts of The Dose, please visit: lnk.to/dose-transcripts [https://www.cbc.ca/radio/podcastnews/the-dose-transcripts-listen-1.6732281]. Transcripts of each episode will be made available by the next workday. For more episodes of this podcast, click this link. [https://podcasts.apple.com/us/podcast/the-dose/id1498259551]

Fill out our listener survey here [https://insightscanada.qualtrics.com/jfe/form/SV_bfIcbmcQYPwjUrk?Podcast=The%20Dose&Prize=No ]. We appreciate your input!

The danger is people are using AI to self diagnose themselves. And that’s just as dangerous as using Google to self diagnose because if you can’t contextualize it, if you can’t apply human judgment, you can go astray really quickly.

Besides the AI element of this book, there is, as in many of your books, a really strong sense of medical intrigue that is solidly grounded in your knowledge as a doctor.

Thank you. I mean, it talks about a very devastating degenerative neurological disease, ALS, and the way people cope with the diagnosis. I see that in the emergency department. I’ve experienced it in my personal life, but much more I see it professionally. I really wanted to authentically portray the impact it could have on someone in their family going through that.

I truly believe this is one of the best mysteries I’ve ever written in terms of plot and story and the characters. But of course, the medical thing has always been my gimmick. I do that much less so in this book. I mean, it’s the first book I’ve really written where none of the main characters are doctors or nurses or in health care at all. But there certainly is that medical aspect.

I think my years in emergency medicine has given me a front row seat in the theatre of humanity and I get access to stories and personal lives and intimacy with people that never happen anywhere outside the emergency department. It really enhances my writing and my storytelling.