September 11, 2025

By Karan Singh

In a recent discussion on the All-In Podcast, Elon Musk provided some additional technical specifications of Tesla’s next-generation FSD chip, AI5. While we’ve already heard a lot about AI5, these new numbers are staggering, pointing to a huge leap in performance that dwarfs typical generational improvements between chipsets in the industry.

AI5 Specs

Elon described AI5 as having a 40x improvement in some metrics over the already powerful HW4 chip, framing AI5 as an absolute monster piece of hardware, which will eventually serve as the foundation for advancements in FSD and robotics.

AI5 isn’t just an incremental update; it’s an architectural step-change. Tesla has learned a lot since developing HW3 and HW4 and now has a better understanding of what it needs to solve FSD. AI5 will address the hardware bottlenecks Tesla is currently facing with HW4.

During the podcast, Musk revealed that AI5 will have 8x more raw compute power than HW4, and it’ll include 9 times more memory. Since hardware 4 includes 16GB of RAM, it appears Tesla is considering putting up to 144GB of memory in AI5. AI is extremely memory hungry, and we’ve heard for a while now that Tesla has been facing memory constraints on HW4, so it’s not surprising they’re increasing the amount of RAM drastically.

Musk also revealed that the memory bandwidth in AI5 will be improved by 5x over HW4.

NEWS: Elon Musk says Tesla’s AI5 chip will be up to 40X better than AI4 in some metrics, and 8x better in raw compute. 9x more memory.

“I am confident that the AI4 chips currently in the cars will be achieve self-driving safety at least 2-3x that of a human, maybe 10x.”

via… pic.twitter.com/X3vUkzd4nc

— Sawyer Merritt (@SawyerMerritt) September 10, 2025 Other Improvements

The specs are incredible gains on their own, but the true genius of the chip lies in its specialized design. That 40x improvement comes from how the chip handles specific, computationally intensive operations that are bottlenecks in the current system.

For example, a key mathematical function called “softmax” will run natively in fewer steps on AI5, and the chip will be able to dynamically handle mixed precision models much more efficiently. This type of specialized optimization is only possible when the teams designing the hardware and the software are working in complete lockstep.

A New Order of Magnitude

AI5 is expected to enable the next generation of FSD software. While Tesla has previously stated that they’ll be able to achieve Unsupervised FSD on hardware 4, we’re wondering if it’ll actually be achieved on AI5 first and then backported to HW4.

Once AI5 is released, Tesla will again not be limited by compute or memory, much like when they diverged from HW3. Once Tesla trained and built models exclusively for HW4, they were able to drastically increase model sizes and parameters, as they no longer needed to support HW3.

While Tesla still intends to achieve Unsupervised FSD on HW4 or offer a free upgrade, Elon has stated that AI5’s improvements will result in an order of magnitude increase in the quality and safety of FSD.

This powerful new chip is what will run future builds of FSD, including even larger models than the upcoming 10x parameter model intended for HW4.

Even with the current hardware, Elon remains confident that FSD will achieve a safety level that is two to three times better than that of a human, and with further optimization, potentially even ten times better.

Designing Purpose-built Chips

Elon emphasized that these massive, targeted gains are part of recent changes to Tesla’s approach to chip design and FSD. Now, Tesla is co-designing its hardware and software simultaneously, rather than buying off-the-shelf chips for training. This means that Tesla’s AI team can identify the exact mathematical operations and data pathways that are slowing down their neural networks, and work with the silicon team to design a chip that is purpose-built to eliminate those specific bottlenecks.

This first-principles approach to chip design is a core competitive advantage that allows Tesla to achieve performance gains that are simply not possible for competitors relying on third-party hardware.

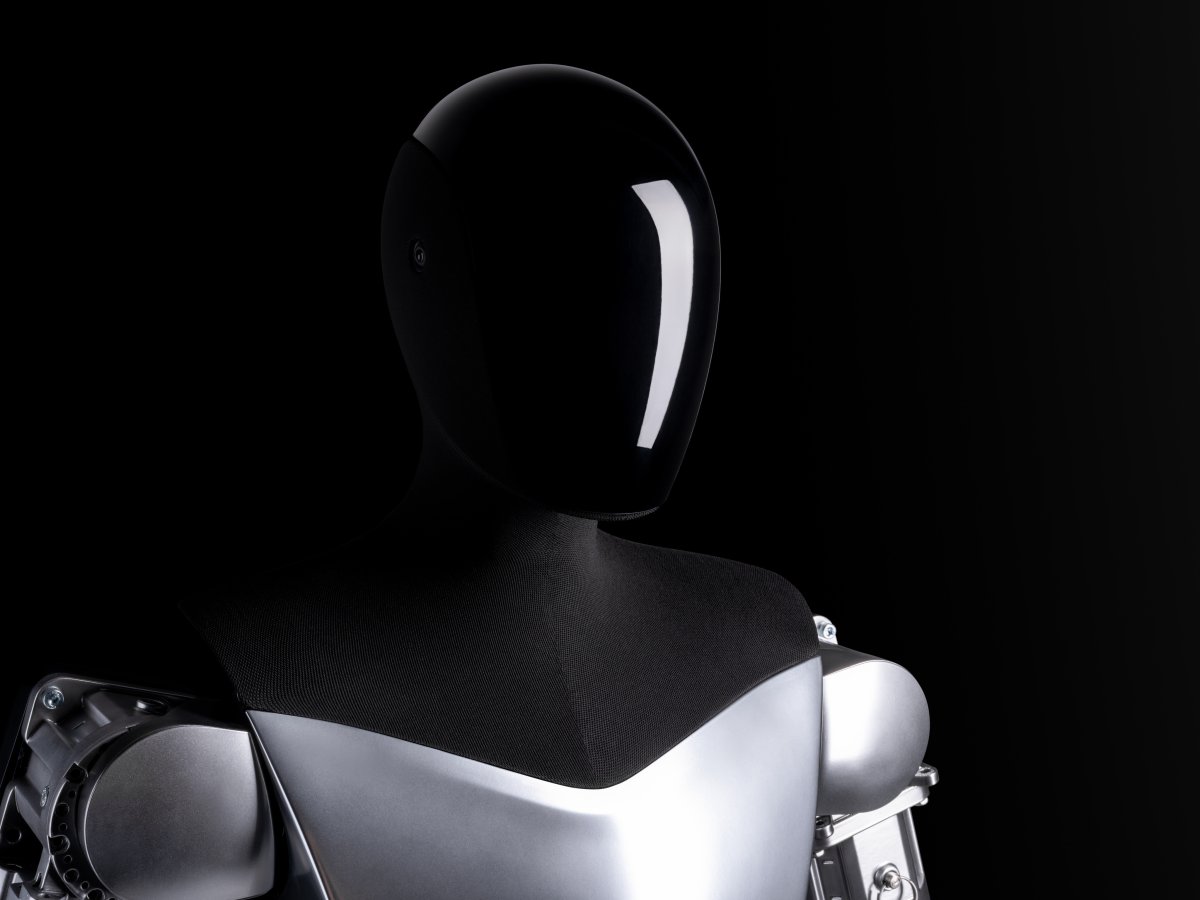

The Bigger Picture: Optimus and AGI

While the immediate impact of AI5 will be on FSD, its significance extends to Tesla’s most ambitious future project: the Optimus humanoid robot. Elon is dedicating the majority of his time and effort to Optimus, which he believes will be the greatest product in the history of humanity.

Building a robot with a capable AI mind and human-like dexterity requires an immense amount of efficient, in-robot computing. The AI chip in Optimus could account for $5,000 to $6,000, or even more, of its eventual $20,000 to $25,000 production cost, and a powerful, efficient chip like AI5 could be the key.

The relentless push for more compute with less waste is all part of Elon’s broader roadmap for the future of artificial intelligence. In the same interview, he made his most aggressive prediction yet on the timeline for Artificial General Intelligence (AGI). He stated he believes AI could be smarter than any single human at anything as soon as next year, and by 2030, AI will likely be smarter than the sum of all humans.

You can watch the entire interview on the All-In Podcast below.

Subscribe to our newsletter to stay up to date on the latest Tesla news, upcoming features and software updates.

September 11, 2025

By Karan Singh

In a welcome but undocumented change in software update 2025.32.2, Tesla is rolling out a visual upgrade that will be a pleasant surprise for many long-time owners.

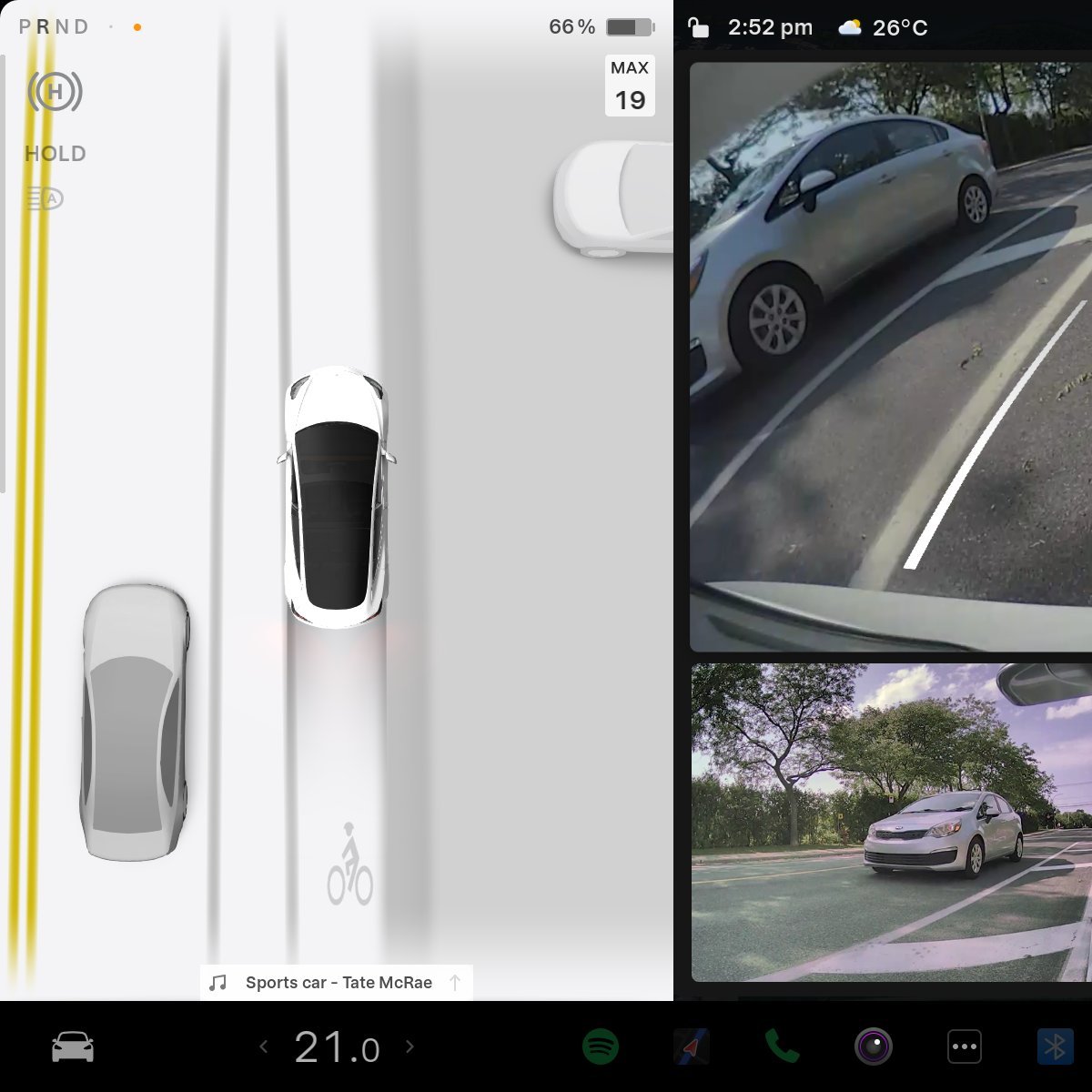

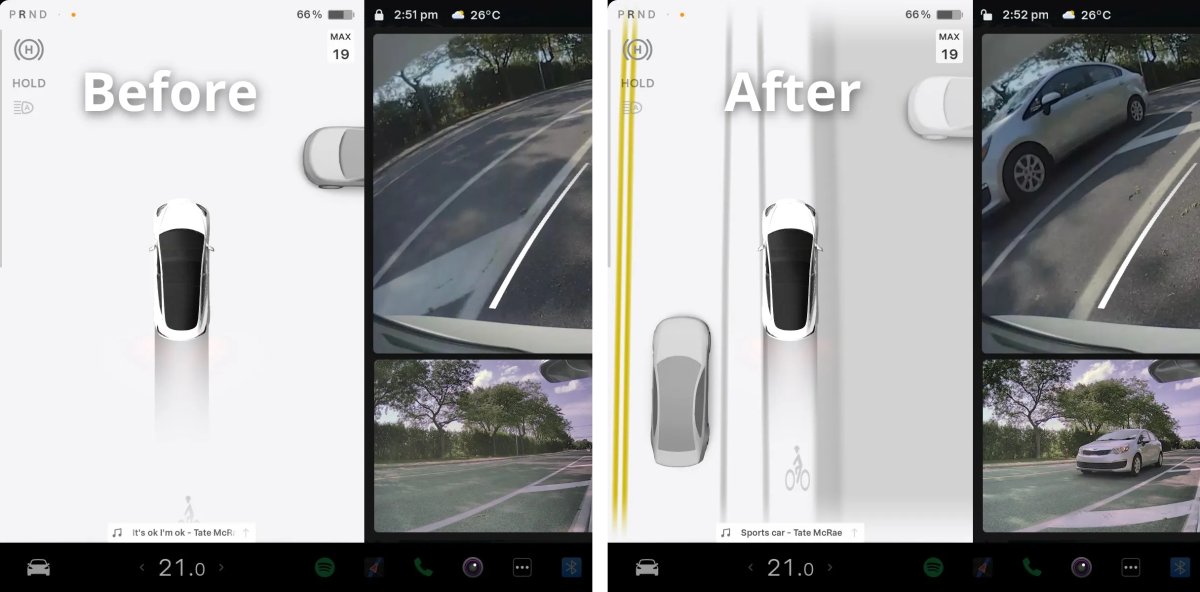

According to TheBeatYT_evil, older Tesla vehicles equipped with the Intel Atom (MCU 2) processor and ultrasonic sensors (USS) now retain the full, rich FSD visualization when shifting into reverse.

This subtle quality of life improvement makes the driving experience just a little bit better, as users retain the full FSD visualizations while reversing.

Improved Visualization

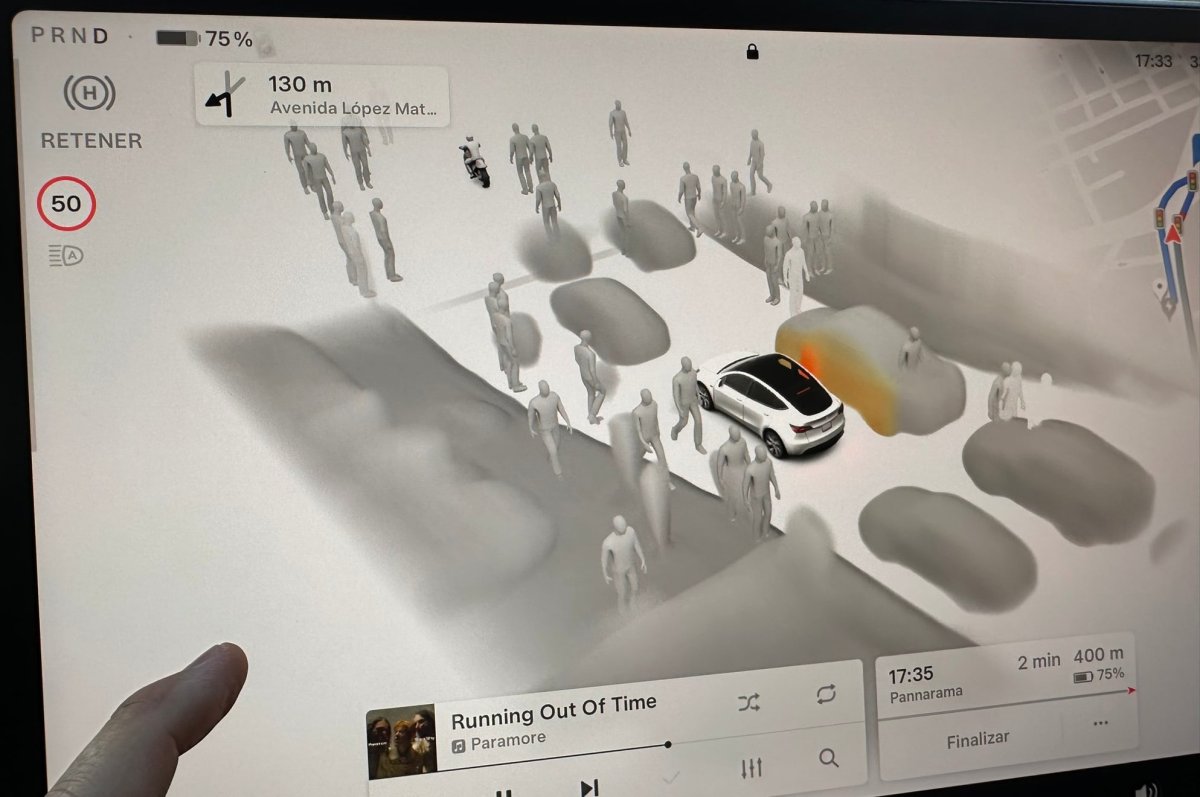

While newer, AMD-based vehicles use Tesla’s High-Fidelity Park Assist visualizations while the vehicle is driving under 6 mph in forward or reverse, MCU 2 vehicles don’t support these custom visualizations that are rendered on the fly.

When Intel vehicles drive forward, the screen displays the detailed, vector-space visualization generated by FSD, showing the car’s surroundings. However, when shifting to reverse, the system would revert to the older, more basic Autopilot visuals, with simple grey lines and less detailed object rendering.

This new update eliminates that transition. The full FSD visualization, with the full rendering of the space around your vehicle, now persists, regardless of the gear the car is in. This means that you’ll now be able to see curbs, vehicles, and other FSD visualizations while backing up as well.

While reversing, the vehicle typically displays an overhead view, meaning that other objects aren’t usually displayed since the visualization focuses on the vehicle. This is likely why this issue has gone unnoticed for so long.

Still Improving Intel

Still Improving Intel

The most significant part of the update isn’t just the feature itself, but the fact that Tesla is still actively pushing visualization updates and changes to older vehicles in the fleet. Having full reverse visualizations on older vehicles with the Intel MCU is a great change for millions of owners, and a sign that Tesla is still committed to supporting older hardware.

Tesla is continuing to find ways to optimize its software to run on the less powerful hardware of previous-generation vehicles, and that’s a good sign for FSD in the future as well, regardless of the type of hardware you have.

While this feature has gone undocumented in the official release notes, we’ve added it to ours, along with several other undocumented changes. If you notice any other undocumented changes, please let us know.

September 10, 2025

By Karan Singh

Marc Benioff, the co-founder and CEO of Salesforce, posted a new video of Tesla’s Optimus, hailing it as the dawn of the physical “Agentforce” revolution and a productivity game changer.

The post from Marc Benioff is an example of how the business world, even one focused on software, is beginning to see the immense potential of humanoid robots in corporate and commercial environments. Even if a robot doesn’t add value to the core business, you can see how a polished version of Optimus could be beneficial for almost any business.

Optimus Improvements

There are a few aspects that make this video interesting. Musk stated back in July that Tesla was integrating Grok into Optimus, and this is our first time seeing that in action. You can see how it really makes Optimus come to life — even if it’s a bit robotic (ba-dum-tss!).

While Optimus is a little clunky and requires more physical space to move in the video, it’s easy to see how quickly Tesla is iterating on Optimus.

While a lot of what we’ve seen from Optimus lately, such as the popcorn vendor at the Tesla Diner, is done through tele-operating, meaning that there’s someone in a suit filled with sensors somewhere who can see what Optimus is seeing. The sensors on the tele-operator then tell Optimus how to move.

While it’s still impressive, this video offers a more raw look at the current state of Optimus. Another striking feature in the video is Optimus’ hands. While these hands look a lot more human-like, they don’t appear to be functional. Musk recently stated that Tesla is designing version 3 of Optimus, but he clarified that this video represents Optimus 2.5 and it’s not the upcoming third generation of Optimus.

Elon’s Tesla Optimus 🤖🔥 is here! Dawn of the physical Agentforce revolution, tackling human work for $200K–$500K. Productivity game-changer! Congrats @elonmusk, and thank you for always being so kind to me! 🚀 #Tesla #Optimus pic.twitter.com/bA5IYIylE1

— Marc Benioff (@Benioff) September 3, 2025 Valuing the Work, Not the Robot

One of the most intriguing parts of Benioff’s post was his valuation of Optimus’ contribution. He described Optimus as taking on $200K to $500K worth of work. This isn’t a speculation on Optimus’ potential pricing, which Elon has previously suggested could be as low as $20,000 once it hits mass production, but rather an enterprise-level valuation of the labor that Optimus can perform annually. Obviously, it’s not there yet, but this is the first time we’re seeing Grok integrated into Optimus, which makes the robot immediately more useful.

From a business perspective, this reframes Optimus entirely. It isn’t just a machine; it’s a productivity asset whose value is measured by the cost of the skilled human work it can automate or augment. For a company like Salesforce, which is focused on business efficiency, this high return on investment is a crucial metric.

Benioff’s valuation suggests he sees Optimus as a tool capable of performing high-value tasks that would otherwise require a significant investment. The future will have Optimus take over tasks that are managed by humans today, and humans will either perform work that Optimus is not capable of or they’ll manage and use Optimus as a tool to be even more productive.

The “Agentforce” Vision

Benioff’s coining of the term physical Agentforce is another interesting factor. In the world of AI, an agent is a piece of software that can autonomously perform tasks. By calling Optimus a physical Agentforce, Benioff is suggesting a future where these software agents are given bodies to interact with and to perform functions in the real, physical world.

This vision aligns perfectly with the content of the video shared. The footage doesn’t show Otpimus on a factory line, but instead navigating a human-centric office environment. The robot is seen responding to queries, helping to locate items, and assisting with simple tasks. While Optimus’ movements are still stiff and cautious, the demonstration is clearly focused on its future potential as an interactive assistant in a corporate or commercial setting. One of the first applications for this type of agent could be in a retail or restaurant setting, where robots manage everyday tasks such as delivering food or taking orders, while humans perform more specialized tasks that require human-level attention and understanding.

This high-profile endorsement from one of the most respected leaders in the tech industry is a massive vote of confidence in Tesla’s approach to physical AI and robotics. It’s a sign that the business world is watching the Optimus program not just with simple curiosity but is now beginning to calculate its transformative potential.