Apparently fed up with being used as a source for every other AI, Reddit has debuted its own called “Answers.” The new feature allows users to ask questions and get automated responses, based on training data sourced from across the platform.

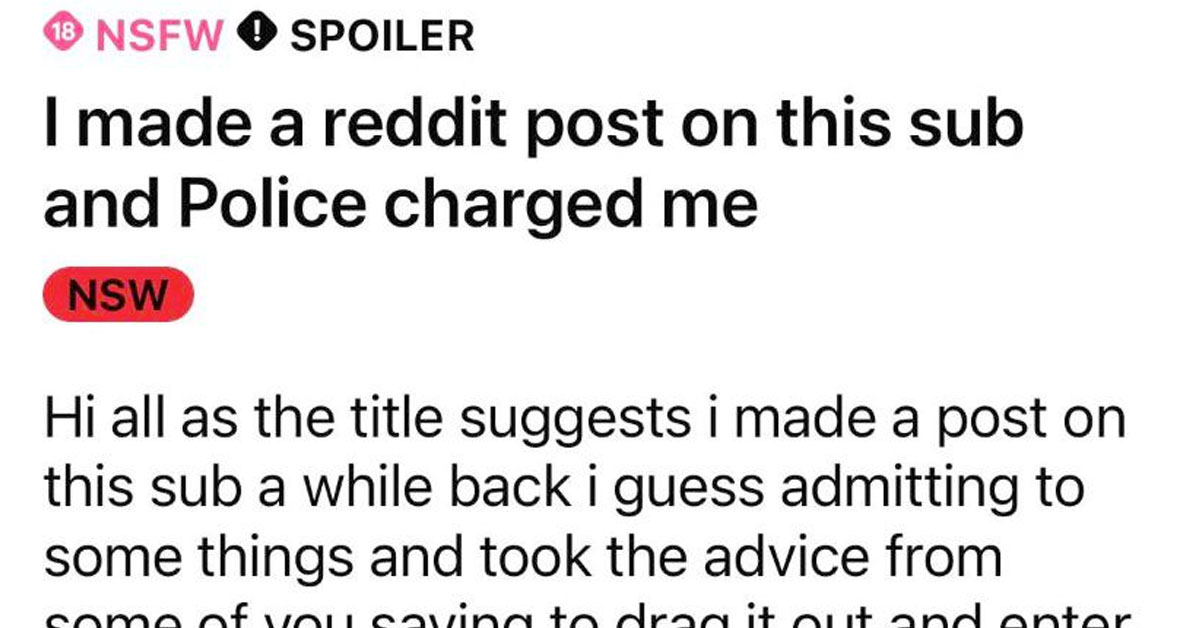

The problem? The social media app is filled with some pretty dumb people with fairly illegal ideas. As such, the AI keeps suggesting that people commit crimes in order to solve their problems.

For example, 404media recently reported that, until recently, searches for “pain management” could eventually lead one toward a recommendation that they buy a needle and recreate Trainspotting.

While there are limits on this program, they don’t seem to be too effective. To offer another example, if you query “how to get into car without key,” it will trigger a refusal. However, if you simply search for “how to open car door,” it’ll give you a host of options for opening a car door without a key.

Similarly, many scanners will not allow you to scan money, as this is a common step in counterfeiting. Thus, if you search for “how to scan money” on Reddit Answers, it will say it can’t help you. But change that last word to “currency” and the system may give you several tips to undermine scanner protections.

Looks like your safety limits might need a bit of work there, Reddit!