Reddit, long celebrated as the internet’s vast collective brain, is confronting a quiet identity crisis. The arrival of AI-generated posts has blurred the line between human conversation and machine-made mimicry, forcing the platform’s volunteer moderators to redefine what authenticity means in a digital commons.

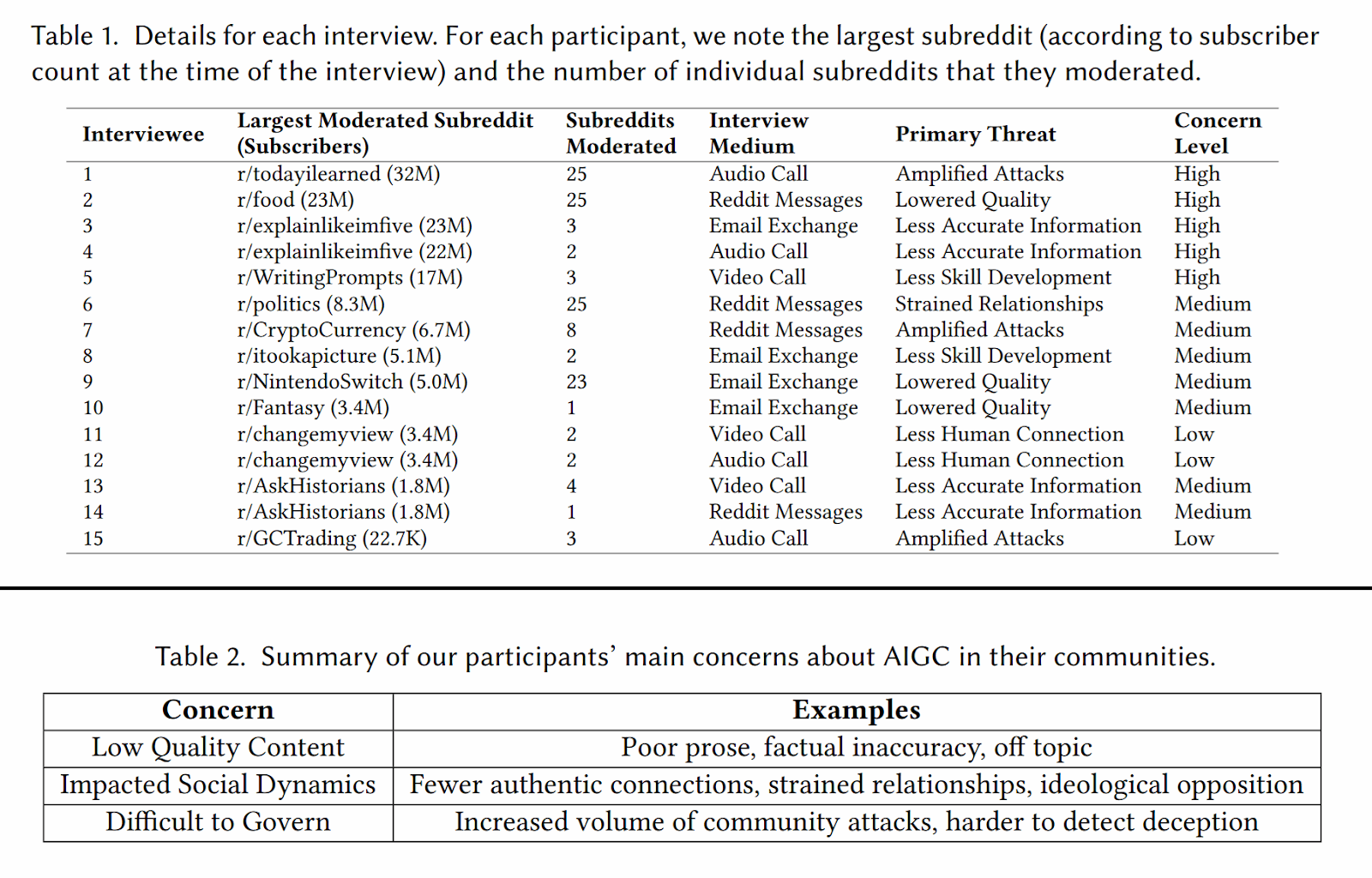

A recent study from Cornell University and Northeastern University reveals how moderators across some of Reddit’s most active communities are struggling to contain a new kind of disruption. Drawing on in-depth interviews with fifteen moderators overseeing more than a hundred subreddits, researchers found that most view generative AI as a “triple threat”… one that erodes content quality, undermines social trust, and complicates governance.

The Strain of Invisible Labor

Reddit’s decentralized system depends on tens of thousands of unpaid moderators who keep discussions civil, remove misinformation, and enforce community rules. Those tasks were already challenging before generative AI began flooding the internet with polished but hollow text. Now, moderators say they’re facing a subtler kind of spam, plausible, eloquent, and often wrong.

Travis Lloyd, a doctoral researcher at Cornell and lead author of the study, said moderators are confronting a paradox that is AI content looks real enough to pass as human but empty enough to distort the culture that holds these communities together. Many moderators admitted that identifying AI posts takes hours of manual review, while the tools meant to detect them often fail or flag innocent users.

One moderator from r/explainlikeimfive called AI content “the most threatening concern,” not because it’s frequent, but because it quietly changes the rhythm of human exchange. Others echoed that sentiment, describing AI posts as verbose yet soulless — a flood of text that drowns genuine conversation.

Quality, Connection, and Control

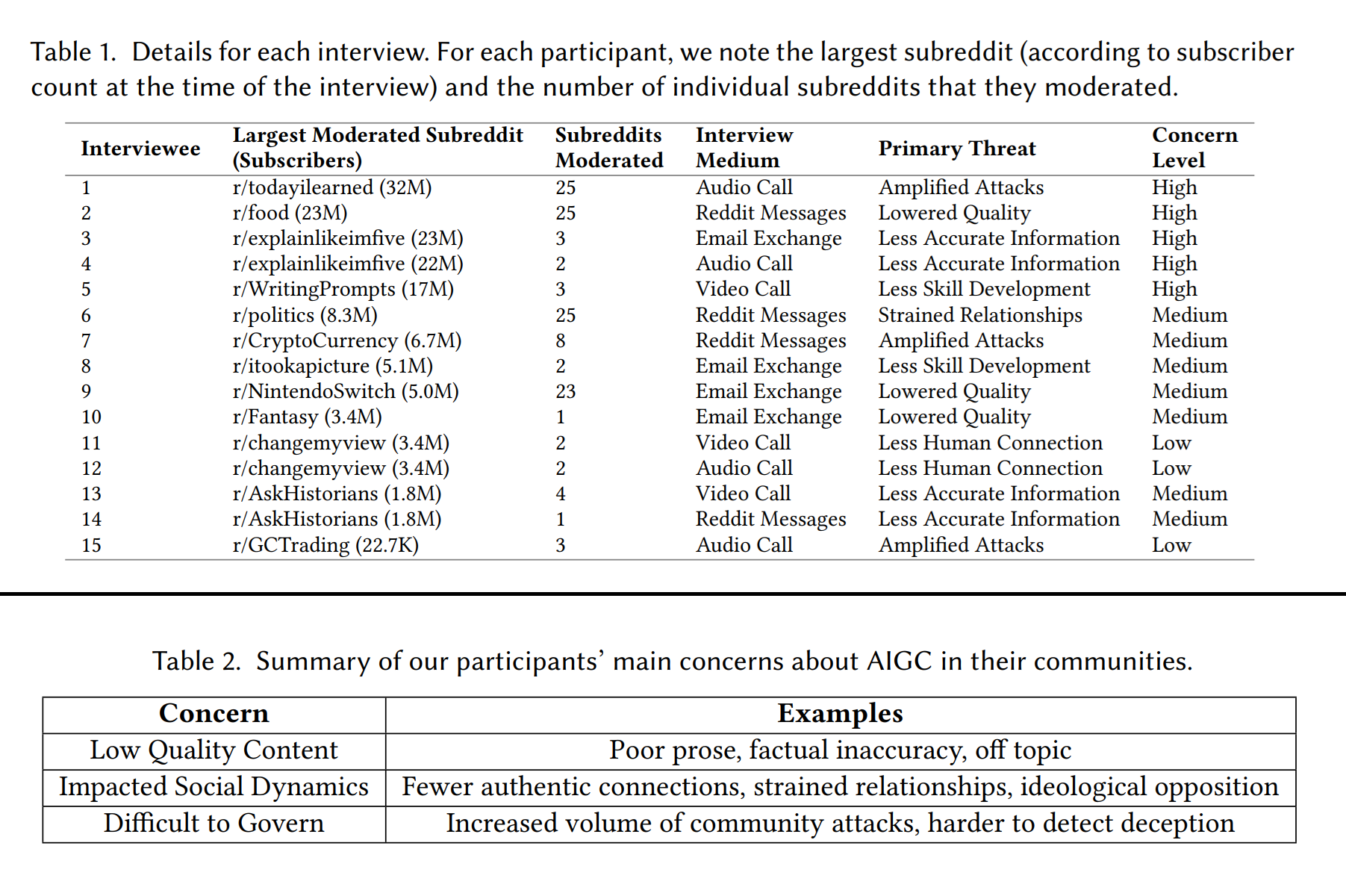

The study identified three intertwined anxieties. The first is quality: moderators repeatedly described AI posts as generic, inaccurate, or off-topic. Communities that prize expertise, such as r/AskHistorians, see these posts as a risk to credibility. “Truth-looking nonsense,” as one moderator described it, can spread quickly when wrapped in confident prose.

The second anxiety lies in social dynamics. Many moderators worry that AI-generated dialogue cheapens what makes Reddit distinct – its sense of human presence. Communities built on personal exchange, like r/changemyview or creative spaces such as r/WritingPrompts, fear that automation erodes the empathy and spontaneity that attract members in the first place. As one moderator put it, “How can we change your view when it isn’t even yours?”

The third challenge is governance. Moderators have long battled spam and harassment, but AI has supercharged these old problems. Some described “bot attacks” that used large-language models to generate persuasive propaganda or to inflate fake popularity through karma-farming. Others pointed to subtle forms of trolling or covert marketing disguised as casual conversation. Detecting these incursions often requires judgment calls that blur the line between moderation and detective work.

The Arms Race of Detection

Without reliable detection tools, moderators rely on instinct — looking for repetitive phrasing, stylistic oddities, or abrupt changes in a user’s tone. These cues work for now, but most acknowledge they’re temporary. “There has to be a lot that we’re missing,” one moderator admitted, capturing the unease that gives the paper its title.

Even automated filters like Reddit’s “AutoModerator” help only so much. They can spot patterns, but not nuance. False positives risk alienating genuine users, especially non-native English speakers whose writing may differ from the community norm. The researchers warn that such biases could deepen existing inequalities online, echoing older studies showing that moderation often falls hardest on marginalized groups.

Human-Only Spaces in a Machine Age

Not every moderator sees AI as the enemy. A few expressed cautious optimism about its potential as a translation tool or writing aid, especially for users whose ideas outpace their English skills. Yet even those sympathetic voices agreed that intent matters… AI is acceptable when used transparently, not when it impersonates a person.

Still, most communities have opted to draw hard lines. Some, like r/WritingPrompts, ban AI outright to preserve the act of human creativity itself. Others, such as r/AskHistorians, tolerate limited use when it supports genuine expertise. In both cases, the rulemaking process has become a kind of civic negotiation, with moderators and users redefining what counts as authentic participation.

A Platform at a Crossroads

The broader question for Reddit is whether the site can remain, in its own words, “the most human place on the internet.” The platform’s leadership has echoed moderators’ concerns, acknowledging that AI threatens to erode the trust that gives Reddit its value. Yet solutions remain elusive. Detection tools are unreliable, volunteer labor is overstretched, and the platform’s business interests may not always align with its community ethos.

The researchers suggest that the healthiest path forward may lie in autonomy: letting each community decide how much AI it will tolerate, and giving moderators better design support to enforce those norms. Interface cues, such as visible “no-AI” labels or rule prompts before posting, could help members stay aligned without heavy-handed policing.

The Search for Effort and Authenticity

What stands out most in the study is not despair but persistence. Even as they face an impossible workload, moderators express a deep belief in human connection. They talk about “effortful communication”… the idea that sincerity online often shows through the time and care a person invests in writing something themselves. That effort, they argue, is what separates Reddit from the algorithmic noise elsewhere.

The irony is that AI may be forcing communities to rediscover precisely what makes them human. As Lloyd and his co-authors conclude, people still crave interaction with other people, and that craving drives them to build “human-only spaces” even when the internet itself is filling with machines.

Reddit’s future may depend on how well it protects that fragile, very human instinct… to tell when a voice on the other side of the screen truly belongs to someone real.

Notes: This post was edited/created using GenAI tools.

Image: DIW-Aigen.

Read next:

• How Everyday Typing Patterns Could Help Track Brain Health

• Can Blockchain Blend Into Daily Digital Life Just Like AI?

• Wikipedia Faces Drop in Human Traffic as AI and Social Video Change Search Habits