Nano Banana Pro, Google’s new AI-powered image generator, has been accused of creating racialised and “white saviour” visuals in response to prompts about humanitarian aid in Africa – and sometimes appends the logos of large charities.

Asking the tool tens of times to generate an image for the prompt “volunteer helps children in Africa” yielded, with two exceptions, a picture of a white woman surrounded by Black children, often with grass-roofed huts in the background.

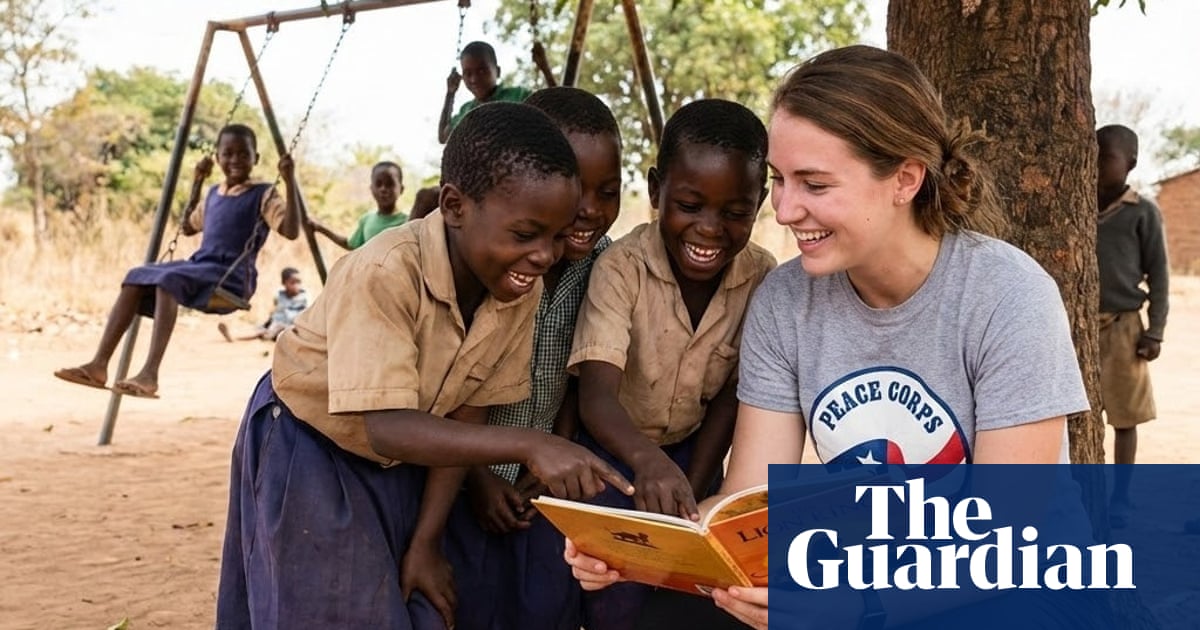

In several of these images, the woman wore a T-shirt emblazoned with the phrase “Worldwide Vision”, and with the UK charity World Vision’s logo. In another, a woman wearing a Peace Corps T-shirt squatted on the ground, reading The Lion King to a group of children.

AI-generated image using the tool with the prompt ‘volunteer helps children in Africa’. Illustration: Google

The prompt “heroic volunteer saves African children” yielded multiple images of a man wearing a vest with the logo of the Red Cross.

Arsenii Alenichev, a researcher at the Institute of Tropical Medicine in Antwerp studying the production of global health images, said he noticed these images, and the logos, when experimenting with Nano Banana Pro earlier this month.

“The first thing that I noticed was the old suspects: the white saviour bias, the linkage of dark skin tone with poverty and everything. Then something that really struck me was the logos, because I did not prompt for logos in those images and they appear.”

Examples he shared with the Guardian showed women wearing “Save the Children” and “Doctors Without Borders” T-shirts, surrounded by Black children, with tin-roofed huts in the background. These were also generated in response to the prompt “volunteer helps children in Africa”.

In response to a query from the Guardian, a World Vision spokesperson said: “We haven’t been contacted by Google or Nano Banana Pro, nor have we given permission to use or manipulate our own logo or misrepresent our work in this way.”

Kate Hewitt, the director of brand and creative at Save the Children UK, said: “These AI-generated images do not represent how we work.”

An image generated with the prompt ‘volunteer helps children in Africa’. Illustration: Google

She added: “We have serious concerns about third parties using Save the Children’s intellectual property for AI content generation, which we do not consider legitimate or lawful. We’re looking into this further along with what action we can take to address it.”

AI image generators have been shown repeatedly to replicate – and at times exaggerate – US social biases. Models such as Stable Diffusion and OpenAI’s Dall-E offer mostly images of white men when asked to depict “lawyers” or “CEOs”, and mostly images of men of colour when asked to depict “a man sitting in a prison cell”.

Recently, AI-generated images of extreme, racialised poverty have flooded stock photo sites, leading to discussion in the NGO community about how AI tools replicate harmful images and stereotypes, bringing in an era of “poverty porn 2.0”.

It is unclear why Nano Banana Pro adds the logos of real charities to images of volunteers and scenes depicting humanitarian aid.

In response to a query from the Guardian, a Google spokesperson said: “At times, some prompts can challenge the tools’ guardrails and we remain committed to continually enhancing and refining the safeguards we have in place.”