This marked the first time a Chinese developer publicly validated the feasibility of using only Huawei chips to train AI models with MoE architecture, which has become widely adopted because of its ability to deliver high performance with fewer computational resources.

The TeleChat3 models – ranging from 105 billion to trillions of parameters – were trained on Huawei’s Ascend 910B chips and its open-source deep learning AI framework MindSpore, according to a technical paper published last month by China Telecom’s Institute of Artificial Intelligence (TeleAI).

The Huawei stack was able to meet the “severe demands” of training large-scale MoE models across many different sizes, TeleAI researchers said.

“These contributions collectively address critical bottlenecks in frontier-scale model training, establishing a mature full-stack solution tailored to domestic computational ecosystems,” they said.

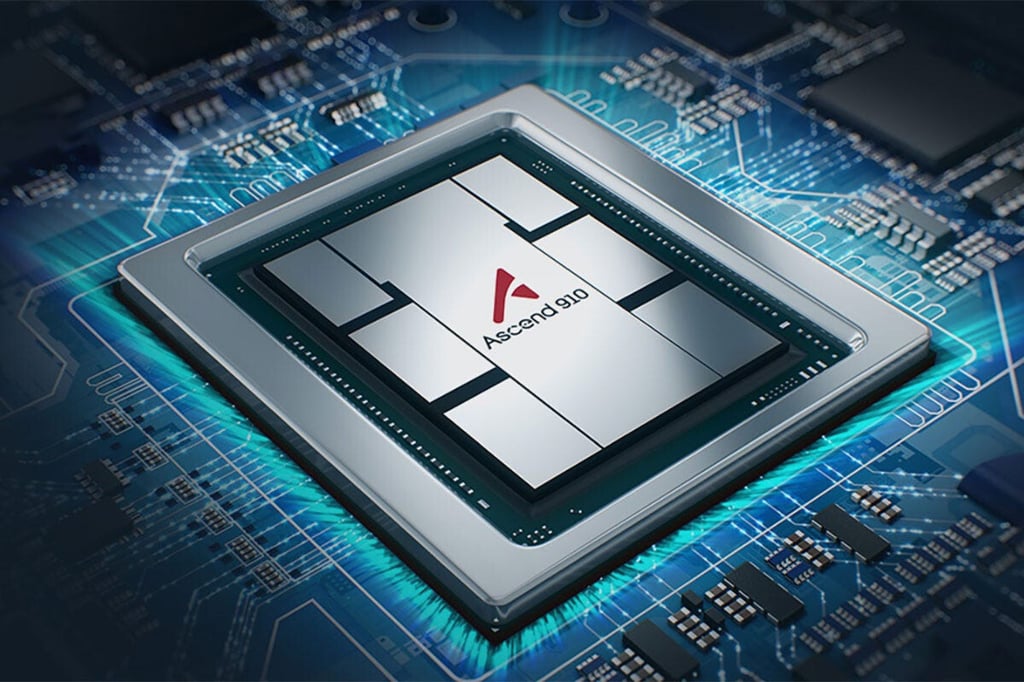

The initiative by China Telecom, one of the world’s largest fixed-line and mobile network operators, underscored growing efforts by a number of US-blacklisted Chinese firms – including Huawei and iFlytek – to train AI models using domestically designed semiconductors. Huawei Technology’s Ascend 910B chip. Photo: Handout

Huawei Technology’s Ascend 910B chip. Photo: Handout