Quantum annealing offers a potential route to solving complex optimisation problems, but current hardware limitations pose significant challenges to scalability and reliability. Seon-Geun Jeong, Mai Dinh Cong, and colleagues from Pusan National University, alongside Dae-Il Noh from Korea Quantum Computing Co., Ltd and Quoc-Viet Pham from Trinity College Dublin, investigate how the process of embedding logical variables onto physical qubits introduces noise and impacts performance. Their work establishes a crucial connection between embedding structure and hardware noise, developing a mathematical framework that predicts how errors accumulate in longer qubit chains. The team’s analysis, validated by experimental results from a Zephyr topology-based processor, reveals a clear scaling rule for adjusting chain strength to maintain reliability, and provides a fundamental understanding of noise amplification in these systems, ultimately offering guidance for optimising future quantum annealing devices.

Quantum Annealing, Noise and Practical Limitations

This research provides a comprehensive exploration of quantum annealing, focusing on the challenges of noise, embedding, and optimization in real-world quantum annealers like those produced by D-Wave. The work blends theoretical background with experimental observations and practical considerations for effectively utilizing these machines. A central goal is to understand and mitigate factors limiting performance, and to develop techniques for improving their ability to solve complex optimization problems. This research targets scientists and practitioners in quantum computing and optimization. Quantum annealers are susceptible to various sources of noise, including thermal fluctuations and control errors, which can disrupt the annealing process and lead to suboptimal solutions.

Scientists investigated several approaches to mitigating noise, including techniques to reduce its impact without full quantum error correction, monitoring and controlling the annealer’s temperature to minimize thermal noise, and using idle qubits to characterize the noise environment. Careful benchmarking is crucial for assessing the performance of quantum annealers and comparing them to classical algorithms, with metrics like chain breaking used to evaluate performance. The research also highlights the importance of formulating optimization problems in a way that is well-suited for quantum annealing, often involving mapping the problem onto a Quadratic Unconstrained Binary Optimization or Ising model. The primary focus of this work is on D-Wave quantum annealers, including processors with Chimera and Pegasus topologies, and the newer Zephyr topology.

Researchers utilized QUBO and Ising models to represent optimization problems, and compared results with classical optimization solvers like Gurobi and Python-MIP. The research demonstrates that embedding overhead is a significant bottleneck, noise is a critical limiting factor, benchmarking is crucial for accurate assessment, and problem formulation significantly impacts performance. While quantum annealing holds promise, it is not a universal solution and is best suited for specific types of problems.

Chain Length Scales with Logical Variables

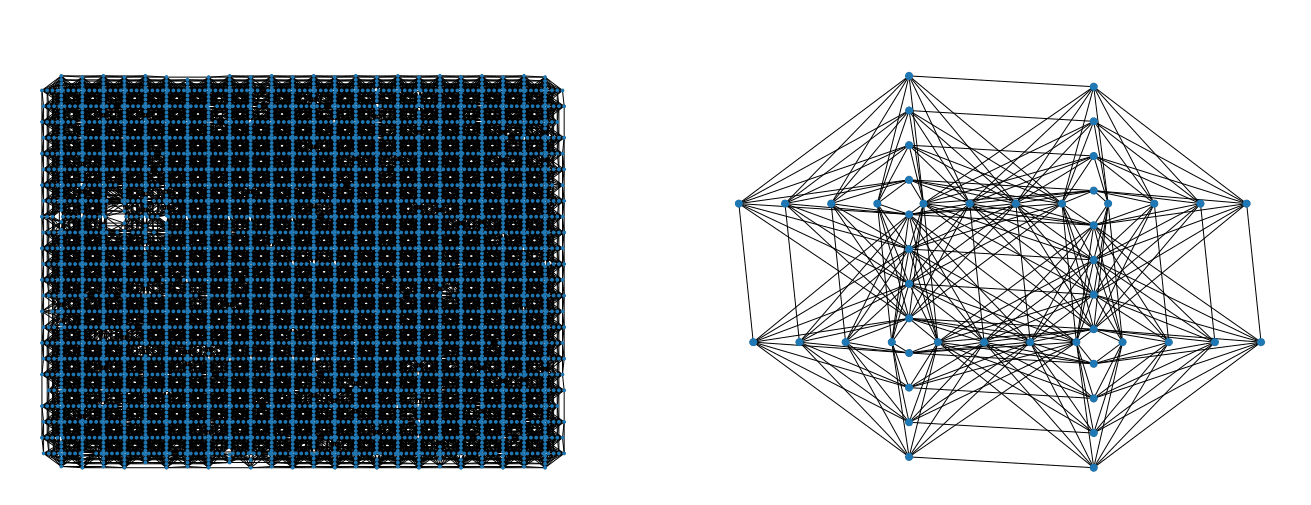

This study pioneers a detailed investigation into noise accumulation within quantum annealers, specifically focusing on the impact of embedding logical variables onto physical qubits. Researchers systematically explored how chain length, arising from this embedding process, influences the reliability of computations performed on the D-Wave Advantage2_system1. 6 QPU. The team generated random QUBO instances of varying size and embedded them onto the quantum processing unit, meticulously measuring the average chain length as a function of the number of logical variables. This analysis revealed a near-perfectly linear relationship, demonstrating that chain length increases predictably with each additional logical variable.

Building on this structural understanding, scientists developed a mathematical framework connecting embedding-induced overhead with hardware noise, employing a Gaussian control error model. They derived equations for chain break probability and chain break fraction, establishing how noise scales with embedding size and how chain strength should be adjusted to maintain reliability. Experiments involved systematically varying annealing time and chain strength while meticulously measuring the observed mean chain break fraction. The team then compared these experimental observations with predictions generated by their noise model, achieving close agreement across a range of problem sizes.

To validate the model’s robustness, researchers conducted experiments at different annealing times, while maintaining a fixed chain strength. Results consistently demonstrated that the predicted chain break fraction curves closely followed observed data, indicating the embedding-aware noise model remains stable under different annealing schedules. Fitted parameters, including control error standard deviations and a coupling effectiveness factor, remained within a narrow range across these schedules, confirming that control errors dominate chain breaks while the overall chain break fraction level is modulated by annealing time. The team quantified model accuracy using the sum of squared errors, achieving values that further validate the model’s predictive power.

Embedding Structure Dictates Quantum Annealer Reliability

Scientists have developed a new noise model for quantum annealing that connects the growth of chain length in clique embeddings with chain break statistics, offering a pathway to improved device reliability and scalability. The work establishes a direct link between structural embedding properties and quantifiable chain break statistics, moving beyond purely empirical analyses of quantum annealers. Researchers derived equations for chain break probability and chain break fraction, demonstrating how embedding overhead directly impacts the reliability of quantum annealing solutions. Experiments conducted on the D-Wave Advantage2 processor, utilizing the Zephyr topology, confirm the accuracy of these theoretical predictions.

The team measured chain break fraction, a key metric connecting embedding overhead to practical solution reliability, and found close agreement between theoretical models and hardware observations. Specifically, the research establishes how variance accumulates within these embedded chains, directly impacting the probability of chain breakage. Furthermore, scientists established a theoretical scaling rule dictating how chain strength should increase with embedding size to maintain reliability, and hardware experiments empirically confirmed this rule. While experiments revealed a slightly steeper growth than predicted, the results still demonstrate a sublinear relationship, suggesting correlated hardware noise as a contributing factor. This work provides quantitative guidance for embedding-aware parameter tuning strategies and forward-looking scalability analysis of future quantum annealers, offering a foundation for designing more robust and efficient quantum computing systems. The team’s analysis predicts chain length will grow linearly with the problem size, and the derived scaling laws provide a means to mitigate the resulting noise amplification.

Embedding Length Dictates Quantum Annealer Reliability

Scientists have developed a new noise model for quantum annealing that connects the growth of chain length in embeddings with chain break statistics, offering a pathway to improved device reliability and scalability. The work establishes a direct link between structural embedding properties and quantifiable chain break statistics. Researchers derived equations for chain break probability and chain break fraction, demonstrating how embedding overhead directly impacts the reliability of quantum annealing solutions. Experiments conducted on the D-Wave Advantage2 processor, utilizing the Zephyr topology, confirm the accuracy of these theoretical predictions.

The team measured chain break fraction, a key metric connecting embedding overhead to practical solution reliability, and found close agreement between theoretical models and hardware observations. Specifically, the research establishes how variance accumulates within these embedded chains, directly impacting the probability of chain breakage. Furthermore, scientists established a theoretical scaling rule dictating how chain strength should increase with embedding size to maintain reliability, and hardware experiments empirically confirmed this rule. While experiments revealed slight deviations from the theoretical model, the results still demonstrate a sublinear relationship. This work provides quantitative guidance for embedding-aware parameter tuning strategies and forward-looking scalability analysis of future quantum annealers, offering a foundation for designing more robust and efficient quantum computing systems. Future research will focus on expanding the model to incorporate other physical noise channels, such as thermal excitations and decoherence, and exploring non-Gaussian error distributions, ultimately aiming to create more accurate hardware-aware quantum annealing simulators.