Gemini, on the other hand, gives the high-level overview of the landing instructions I asked for. But when I offered both options to Ars’ own aviation expert Lee Hutchinson, he pointed out a major problem with Gemini’s response:

Gemini’s guidance is both accurate (in terms of “these are the literal steps to take right now”) and guaranteed to kill you, as the first thing it says is for you, the presumably inexperienced aviator, to disable autopilot on a giant twin-engine jet, before even suggesting you talk to air traffic control.

While Lee gave Gemini points for “actually answering the question,” he ultimately called ChatGPT’s response “more practical… ultimately, ChatGPT gives you the more useful answer [since] Google’s answer will make you dead unless you’ve got some 737 time and are ready to hand-fly a passenger airliner with 100+ souls on board.”

For those reasons, ChatGPT has to win this one.

Final verdict

This was a relatively close contest when measured purely on points. Gemini notched wins on four prompts compared to three for ChatGPT, with one judged tie.

That said, it’s important to consider where those points came from. ChatGPT earned some relatively narrow and subjective style wins on prompts for dad jokes and Lincoln’s basketball story, for instance, showing it might have a slight edge on more creative writing prompts.

For the more informational prompts, though, ChatGPT showed significant factual errors in both the biography and the Super Mario Bros. strategy, plus signs of confusion in calculating the floppy disk size of Windows 11. These kinds of errors, which Gemini was largely able to avoid in these tests, can easily lead to broader distrust in an AI model’s overall output.

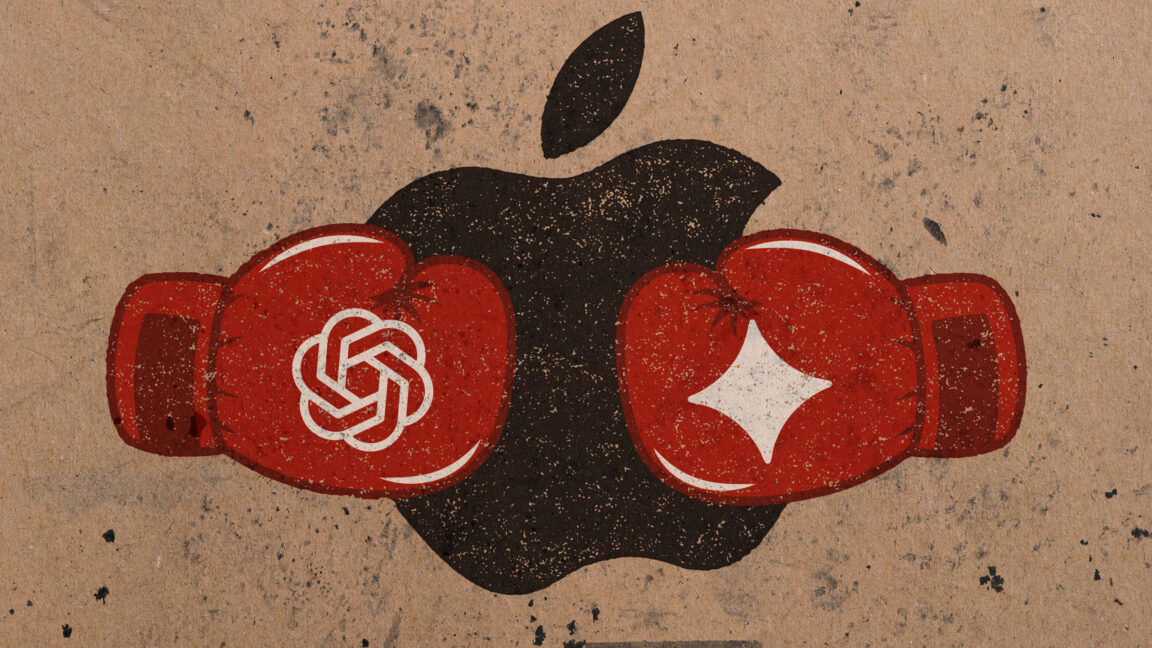

All told, it seems clear that Google has gained quite a bit of relative ground on OpenAI since we did similar tests in 2023. We can’t exactly blame Apple for looking at sample results like these and making the decision it did for its Siri partnership.