. Machine Learning and Deep Learning are mentioned just as often.

And now, Generative AI seems to dominate nearly every technology conversation.

For many professionals outside the AI field, this vocabulary can be confusing. These terms are often used interchangeably, sometimes mixed together, and sometimes presented as competing technologies.

If you have ever asked yourself:

What exactly is AI?

How are Machine Learning and Deep Learning connected?

What makes Generative AI different?

This article is for you 😉

The objective here is clarity — not simplification through approximation, but accurate explanation in plain language. No technical background is required for the rest of the article.

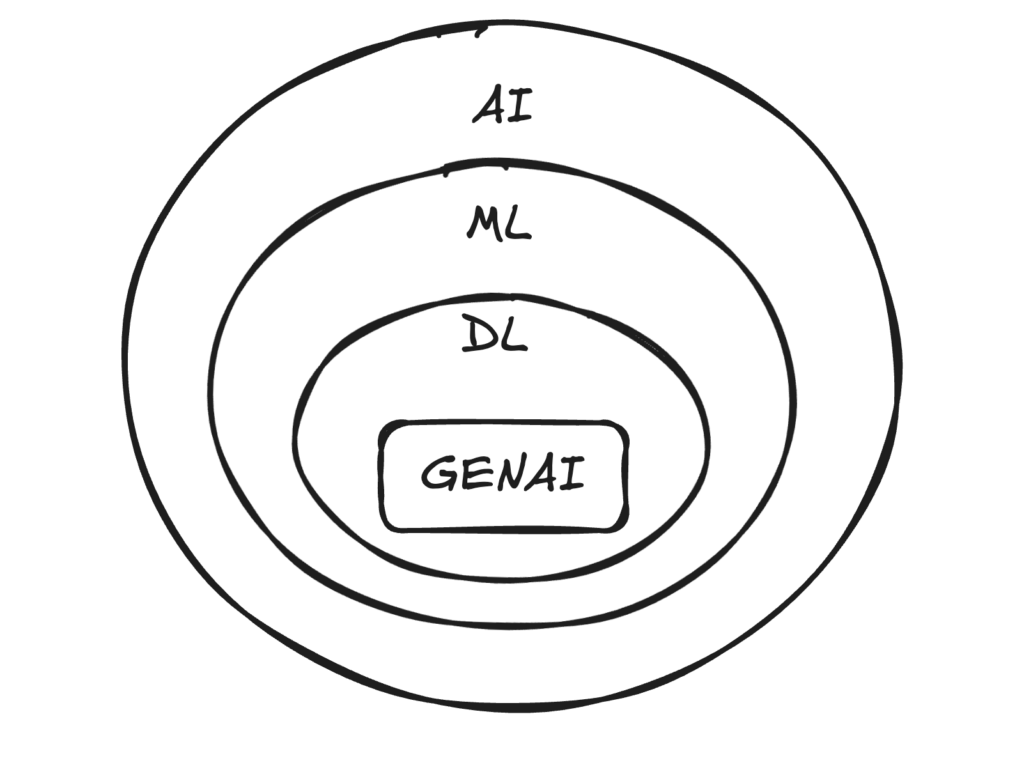

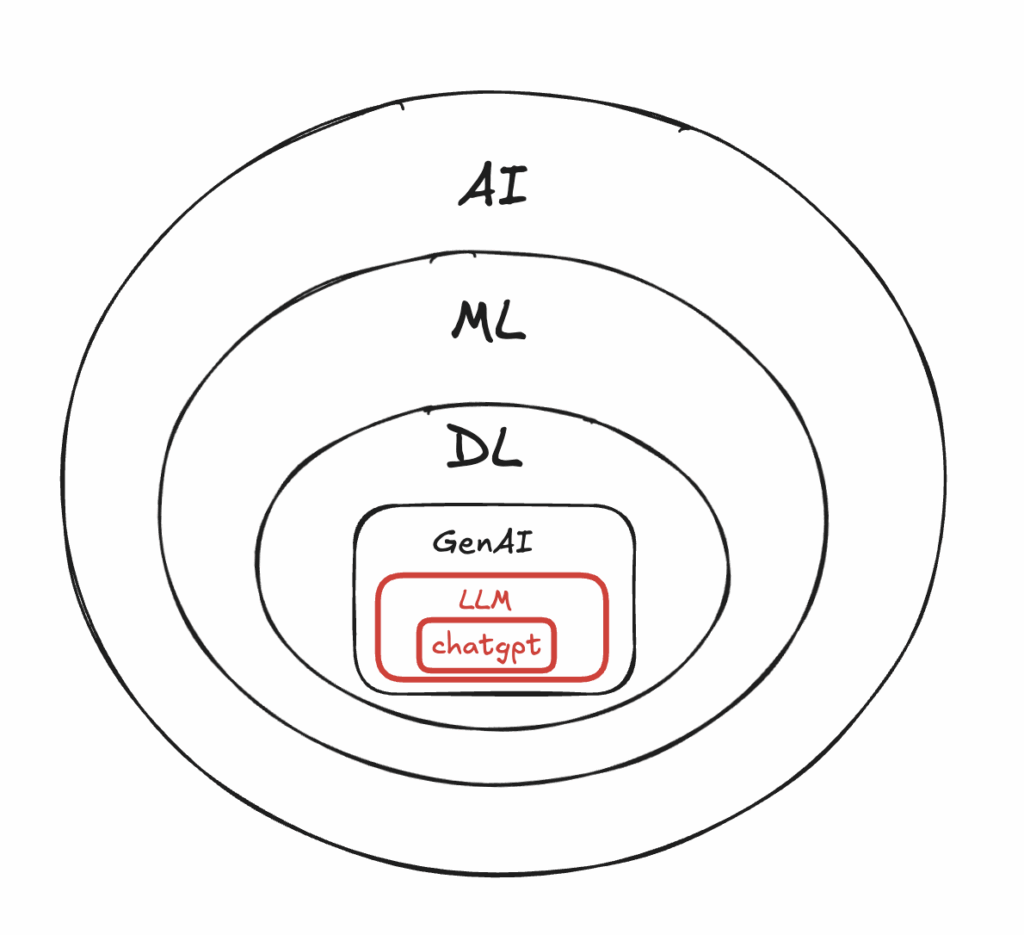

Figure 1 — AI Ecosystem (created by the author using Excalidraw)

Figure 1 — AI Ecosystem (created by the author using Excalidraw)

The key idea: the Matryoshka doll

A useful way to understand the relationship between Artificial Intelligence, Machine Learning, Deep Learning, and Generative AI is to imagine Matryoshka dolls.

Each concept contains the next one inside it:

Nothing replaces what came before,

Each layer builds upon the previous one.

Let’s open them one by one.

Artificial Intelligence: the outer shell

Artificial Intelligence (AI) is the broadest definition.

At its core, AI refers to systems designed to perform tasks that typically require human intelligence. In practice, AI includes systems that can:

1. Make decisions. Example: A navigation system choosing the fastest route based on real-time traffic conditions.

2. Draw conclusions. Example: A system deciding whether to approve or reject a loan application based on multiple factors.

3. Recognize patterns. Example: Detecting fraudulent credit card transactions by identifying unusual spending behavior.

4. Predict outcomes. Example: Estimating future energy consumption or product demand.

Rule-based AI: intelligence written by humans

In the early decades of AI, particularly in the 1970s and 1980s, systems were primarily rule-based. What I mean is that humans explicitly wrote the logic. The computer did not learn — it executed predefined instructions.

-> A rule looked like this in human natural language: “If a house has at least three bedrooms and is located in a good neighborhood, then its price should be around €500,000.”

-> In programming terms, the logic is similar but written in code with something that can looks like this : IF bedrooms ≥ 3 AND neighborhood = “good” THEN price ≈ 500000

This was considered Artificial Intelligence because human reasoning was encoded and executed entirely by a machine.

Why rule-based AI was limited

Rule-based systems work well only in controlled environments.

Real-world conditions are not controlled. If we are still with our real estate example.

markets evolve,

contexts change,

exceptions multiply.

The system cannot adapt unless a human rewrites the rules.

This limitation led to the next layer.

Machine Learning: letting data speak

Machine Learning (ML) is a subset of Artificial Intelligence.

The key shift is simple but profound:

Instead of telling the computer what the rules are, we let the system learn them directly from examples.

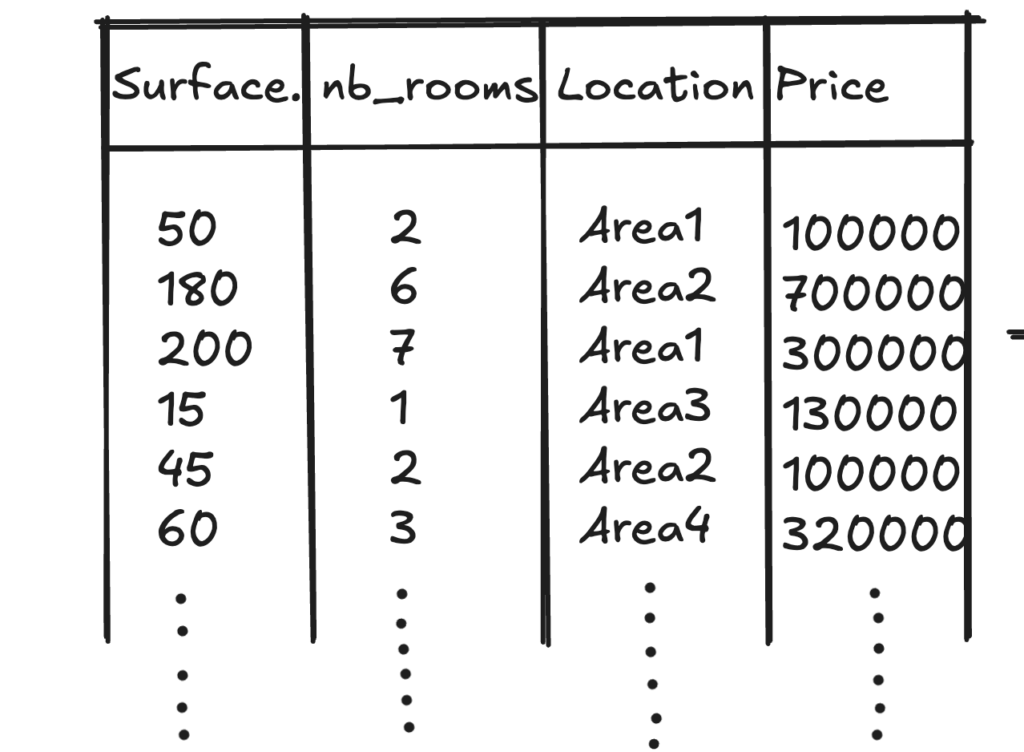

-> Let’s return to the house price example. Instead of writing rules, we collect data:

surface area,

number of rooms,

location,

historical sale prices.

Table 1 — Example of Data (created by the author using Excalidraw)

Table 1 — Example of Data (created by the author using Excalidraw)

Thousands, sometimes millions, of past examples.

This data is provided as training data to a machine learning model.

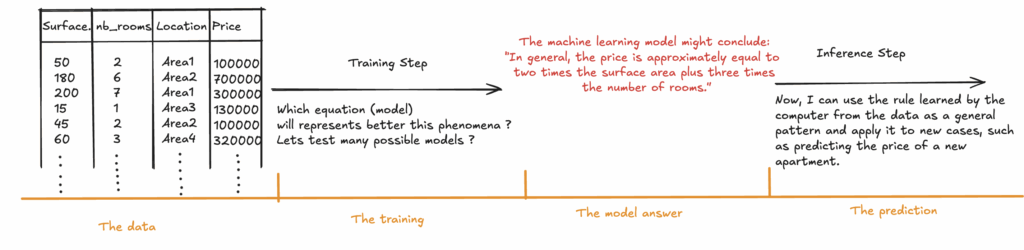

Figure 2 — Machine Learning Pipeline (created by the author using Excalidraw)

Figure 2 — Machine Learning Pipeline (created by the author using Excalidraw)

But what does “training a model” using data actually mean?

Training is not a black box. We begin by choosing a mathematical model — essentially an equation — that could describe the relationship between inputs (surface, location, etc.) and output (price).

We do not test one equation. We test many (We call them models).

A very simplified example might look like:

price = 2 × surface + 3 × location

The model adjusts its parameters by comparing prices with real prices across many examples.

No human could manually analyze hundreds of thousands of houses at once. A machine can.

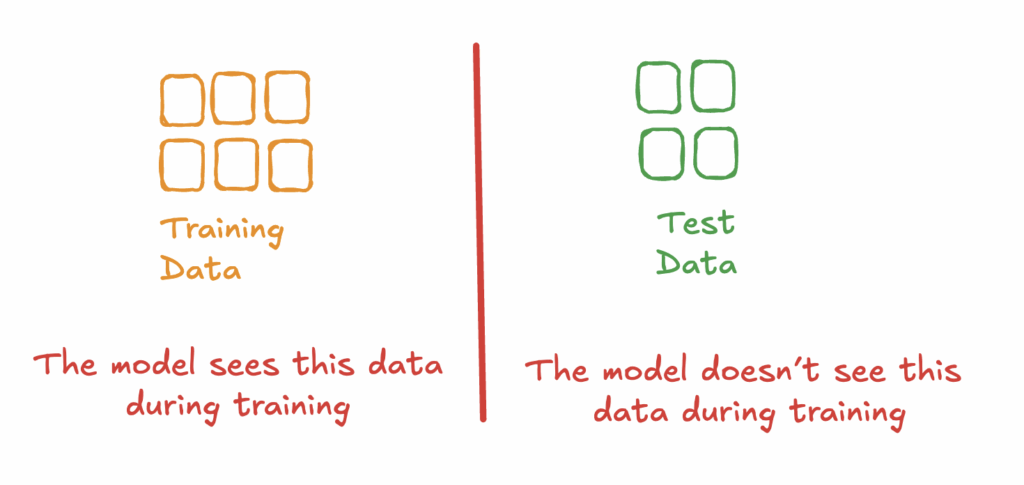

How do we know a model works?

Before adopting a model — that is, the equation that best represents the phenomenon we are studying — we evaluate it.

Part of the data is intentionally hidden. This is known as test data.

The model:

Never sees this data during training,

Must make predictions on it afterward.

Predictions are then compared to reality.

Figure 3 — Train/Test Data (created by the author using Excalidraw)

Figure 3 — Train/Test Data (created by the author using Excalidraw)

If performance is good on unseen data, the model is useful.

If not, it is discarded and another model is tried.

This evaluation step is essential.

Machine learning excels at tasks humans struggle with:

Analyzing large volumes of data,

Detecting subtle patterns,

Generalizing from past examples.

Examples of applications:

Healthcare

-> disease risk prediction,

-> analysis of medical images.

Industry

-> predicting equipment failures,

-> optimizing production processes.

Consumer products

-> recommendation systems,

-> fraud detection.

The limits of traditional machine learning

Nevertheless, traditional Machine Learning has important limitations. It works very well with structured data:

tables,

numerical values,

clearly defined variables.

However, it struggles with types of data that humans handle naturally, such as:

The reason for this limitation is fundamental -> “computers only understand numbers“

Computers do not understand images, sounds, or words the way humans do.

They only understand numbers.

When working with images, text, or audio, these data must first be transformed into numerical representations.

For example, an image is converted into a matrix of numbers, where each value corresponds to pixel information such as color intensity. Only after this conversion can a machine learning model process the data.

This transformation step is mandatory.

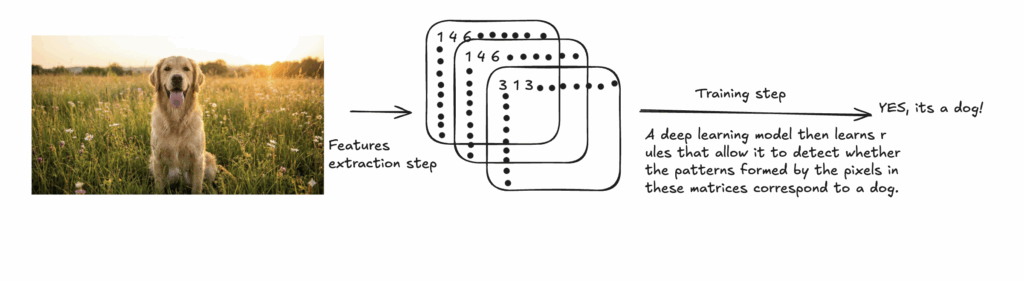

Figure 4 — Converting an Image to Matrices (created by the author using Excalidraw)

Figure 4 — Converting an Image to Matrices (created by the author using Excalidraw)

Feature extraction: the traditional approach

Before the rise of deep learning, this transformation relied heavily on manual feature engineering.

Engineers had to decide in advance which characteristics might be useful:

edges or shapes for images,

keywords or word frequencies for text,

spectral components for audio.

This process, known as feature extraction, was:

time-consuming,

fragile,

strongly dependent on human intuition.

Small changes in the data often required redesigning the features from scratch.

Why deep learning was needed

The limitations of manual feature extraction in complex settings were a key motivation for the development of Deep Learning. (I’m not covering the more technical motivations in this article. My goal is to give you a clear understanding of the big picture).

Deep Learning does not eliminate the need for numerical data.

Instead, it changes how features are obtained.

Rather than relying on hand-crafted features designed by humans, deep learning models learn useful representations directly from raw data.

This marks a structural shift.

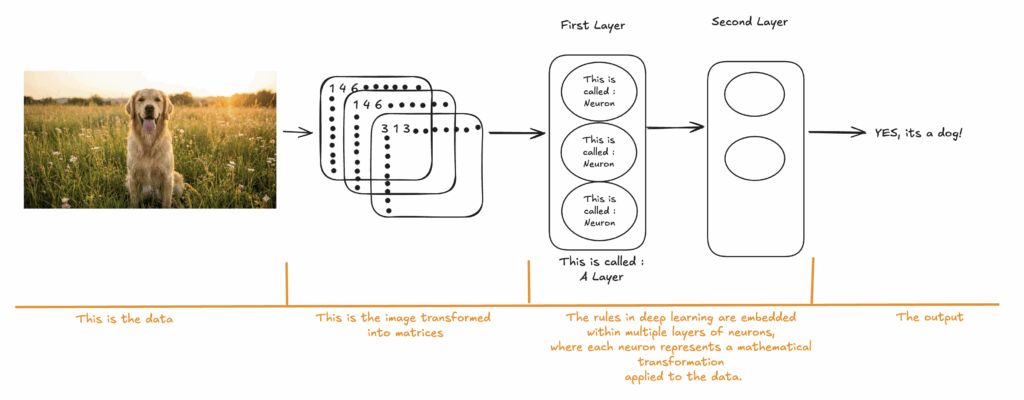

Deep Learning: the structural shift

Deep Learning still works as Machine Learning. The learning process is the same:

-> data,

-> training,

-> evaluation.

What changes is what we call the architecture of the model.

Deep learning relies on neural networks with many layers.

Layers as progressive representations

Figure 5 — Deep Learning Pipeline (created by the author using Excalidraw)

Figure 5 — Deep Learning Pipeline (created by the author using Excalidraw)

Each layer in a deep learning model applies a mathematical transformation to its input and passes the result to the next layer.

These layers can be understood as progressive representations of the data.

In the case of image recognition:

Early layers detect simple patterns such as edges and contrasts,

intermediate layers combine these patterns into shapes and textures,

later layers capture higher-level concepts such as faces, objects, or animals.

The model does not “see” images the way humans do.

It learns a hierarchy of numerical representations that make accurate predictions possible.

Instead of being told explicitly which features to use, the model learns them directly from the data.

This ability to automatically learn representations is what makes deep learning effective for complex, unstructured data (see the representation above).

And once this level of understanding is reached, an important shift becomes possible.

Up to this point, deep learning models have mainly been used to analyze existing data.

They are trained to:

recognize what is present in an image,

understand the structure of a text,

classify or predict outcomes based on learned patterns.

In short, they help answer the question: What is this?

But learning rich representations of data naturally raises a new question:

If a model has learned how data is structured, could it also produce new data that follows the same structure?

This question is the foundation of Generative AI.

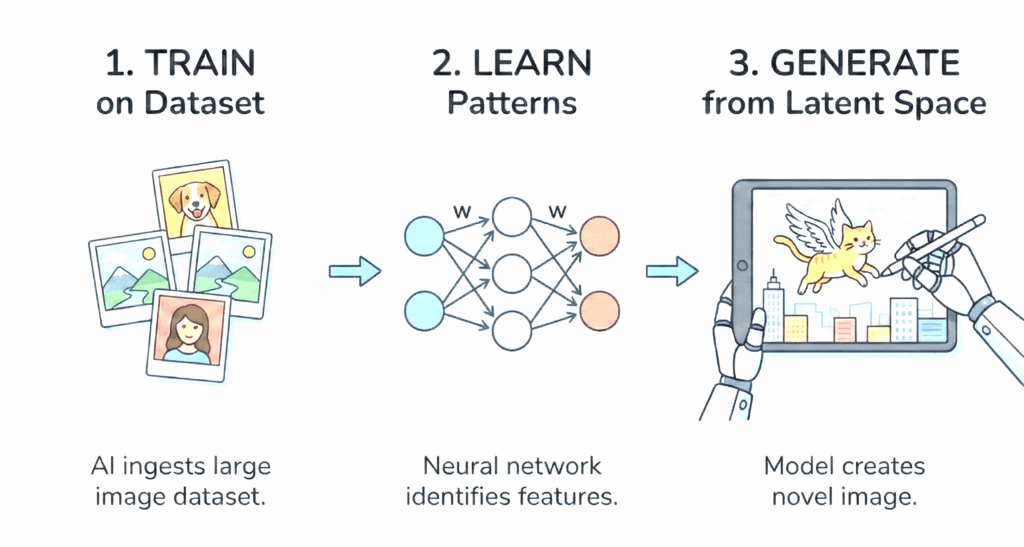

Generative AI: from analysis to creation

Figure 6 — GenAI Pipeline (created by the author using Gemini3)

Figure 6 — GenAI Pipeline (created by the author using Gemini3)

Generative AI does not replace deep learning. It builds directly on top of it.

The same deep neural networks that learned to recognize patterns can now be trained with a different objective: generation.

Instead of focusing only on classification or prediction, generative models learn how data is produced, step by step.

As a result, they are able to create new content that is coherent and realistic.

A concrete example

Consider the prompt:

“Describe a luxury apartment in Paris.”

The model does not retrieve an existing description.

Instead:

It starts from the prompt,

predicts the most likely next word,

then the next one,

and continues this process sequentially.

Each prediction depends on:

What has already been generated,

The original prompt,

And the patterns learned from large amounts of data.

The final text is new — it has never existed before — yet it feels natural because it follows the same structure as similar texts seen during training.

The same principle across data types

This mechanism is not limited to text. The same generative principle applies to:

images, by generating pixel values,

audio, by generating sound signals over time,

video, by generating sequences of images,

code, by generating syntactically and logically consistent programs.

This is why these models are often called foundation models: a single trained model can be adapted to many different tasks.

Why Generative AI feels different today

Artificial Intelligence, Machine Learning, and Deep Learning have existed for many years.

What makes Generative AI feel like a turning point is not only improved performance, but how humans interact with AI.

In the past, working with advanced AI required:

technical interfaces,

programming knowledge,

infrastructure and model management.

Today, interaction happens primarily through:

natural language,

simple instructions,

conversation.

Users no longer need to specify how to do something.

They can simply describe what they want.

This shift dramatically reduces the barrier to entry and allows AI to integrate directly into everyday workflows across a wide range of professions.

Putting everything together

These concepts are not competing technologies. They form a coherent progression:

Artificial Intelligence defines the goal: intelligent systems.

Machine Learning enables systems to learn from data.

Deep Learning allows learning from complex, unstructured information.

Generative AI uses this understanding to create new content.

Seen this way, Generative AI is not a sudden break from the past.

It is the natural continuation of everything that came before.

Once this structure is clear, AI terminology stops being confusing and becomes a coherent story.

But, Have we finished? Almost.

Figure 6 — The Complete AI Ecosystem in 2025 (created by the author using Excalidraw)

Figure 6 — The Complete AI Ecosystem in 2025 (created by the author using Excalidraw)

At this point, we’ve covered the core AI ecosystem: artificial intelligence, machine learning, deep learning, and generative AI — and how they naturally build on one another.

If you are reading this article, there is a good chance you already use tools like ChatGPT in your daily life. I won’t go much deeper here — this deserves an article of its own.

However, there is one important final idea worth remembering.

Earlier, we said that Generative AI is a continuation of Deep Learning, specialized in learning patterns well enough to generate new data that follows those same patterns.

That is true — but when it comes to language, the patterns involved are far more complex.

Human language is not just a sequence of words. It is structured by grammar, syntax, semantics, context, and long-range dependencies. Capturing these relationships required a major evolution in deep learning architectures.

From Deep Learning to Large Language Models

To handle language at this level of complexity, new deep learning architectures emerged. These models are known as Large Language Models (LLMs).

Instead of trying to understand the full meaning of a sentence all at once, LLMs learn language in a very particular way:

They learn to predict the next word (or token) given everything that comes before it.

This might sound simple, but when trained on massive amounts of text, this objective forces the model to internalize:

grammar rules,

sentence structure,

writing style,

facts,

and even elements of reasoning.

By repeating this process billions of times, the model learns an implicit representation of how language works.

From these Large Language Models, conversational systems such as ChatGPT are built — combining language generation with instruction-following, dialogue, and alignment techniques.

The illustration above shows this idea visually: generation happens one word at a time, each step conditioned on what was generated before.

The final big picture

Nothing you see today came out of nowhere.

ChatGPT is not a separate technology. It is the visible result of a long progression:

Artificial Intelligence set the goal.

Machine Learning made learning from data possible.

Deep Learning enabled learning from complex, unstructured data.

Generative AI made creation possible.

Large Language Models brought language into this framework.

I hope this article was helpful. And now, you’re no longer lost in tech conversations — even at your end-of-year family gatherings 🙂

If you enjoyed this article, feel free to follow me on LinkedIn for more honest insights about AI, Data Science, and careers.

👉 LinkedIn: Sabrine Bendimerad

👉 Medium: https://medium.com/@sabrine.bendimerad1