We keep seeing the same thing over and over again in the AI racket, and people keep reacting to it like it is a new or surprising idea. So we will say it very clearly here one more time: The only companies that are making money – meaning additional gross and operating profits, and sometimes meaning “material revenue” as the US Securities and Exchange Commission uses that term – on the GenAI boom right now are Nvidia and Taiwan Semiconductor Manufacturing Co.

Everybody else in the AI system and model supply chain, as far as we can tell, is either losing money fist under hand or diluting their profits by expanding into AI. What financial people call “operating profit accretive but operating margin dilutive.” Broadcom, for all of its might and prowess in networking and other switchery in the datacenter, where it thankfully has very good operating margins, is no exception. And so, we see that Broadcom, like other chip suppliers as well as OEMs and ODMs higher up in the value chain, have one after the other decided to go after the GenAI business and dilute their profits rather than be left behind.

It really is the only choice, and thankfully for Broadcom, it bought Symantec, CA, and VMware to have some highly profitable legacy enterprise software that is very sticky in the datacenter and therefore can counterbalance its AI aspirations and the skinnier margins they necessitate based on the nature of this market. Broadcom’s other option was to find itself on the outside of the GenAI boom and get nothing and risk losing a substantial portion of chip sales into datacenter products.

So while people are jaw-boning about how Broadcom missed Wall Street’s expectations for AI-related revenues in the final quarter of 2025, we do not think that is the issue. This AI business has a backlog of $73 billion over the next six quarters, and Hock Tan, Broadcom’s chief executive officer, was clear that between now and the end of that backlog term even more orders will come in. What was really driven home that Broadcom is being coaxed into being an AI system integrator and that this, too, will further dilute its profits – and there is no escaping this. If big clients – hyperscalers, cloud builders, and model builders – want their chip helper to deliver full systems, they will go elsewhere to get that super-low price, that one ass to kick when things are late, and that one throat to choke when stuff doesn’t work.

And so, without asking for it, Broadcom is now an ODM, which means it has the responsibility of an OEM but at a much larger scale with even lower profits.

Strange world we are building, isn’t it? Perhaps Supermicro should merge with Marvell and get it over with. (You heard it here first.)

One of the four Broadcom XPU customers was already buying rackscale machines built by the company, and now the fifth XPU customer is going straight to buying rackscale machinery, which includes a slew of Broadcom chips but obviously also a lot of stuff that comes from the outside.

Of that $73 billion in AI backlog, the XPUs represent around $53 billion of that, and the Tomahawk 6 switch ASICs are probably a very big portion of it. Tan said that Tomahawk 6 is the fastest ramping switch ASIC in Broadcom’s history, and that is saying a lot. That AI order book also includes DSPs, lasers, PCI-Express switches, and probably a smattering of other things like storage controllers.

By the way, Tan confirmed that it was indeed model builder Anthropic that had placed an order with Broadcom to get around $10 billion of Google TPU racks a few months back, and said further that Anthropic had just placed a new order for another $11 billion in TPU racks for delivery in late 2026. As for the fifth customer, which we do not believe is OpenAI even though Broadcom is partnering with that model builder, the order is for $1 billion worth of XPU systems of unknown design, delivered in 2026. OpenAI’s deal with Broadcom runs from 2027 through 2029 (not 2026 as the press release said as Tan clarified in the call with Wall Street) and we believe is to help shepherd 10 gigawatts of some kind of capacity to OpenAI. (For all we know, OpenAI wants to use Google TPUs as well.) And, for the record, Tan confirmed that this OpenAI deal is “different from the XPU program we are developing with them,” by which we think he means the development and manufacturing of OpenAI’s homegrown “Titan” inference XPU.

Not to get all Appalachian on y’all, but that’s a lot of lean spring bear meat to eat and not a lot of fat to cut it with. But it is eat or be eaten out there in GenAI Land, so get out your knives like Broadcom has. And, by the way, that CA and VMware base can be fattened up with higher prices as needed. . . . And you can bet Hock Tan’s last dollar that this will happen if the overall company margins require it.

With that all said, let’s get to the Broadcom Q4 F2025 numbers.

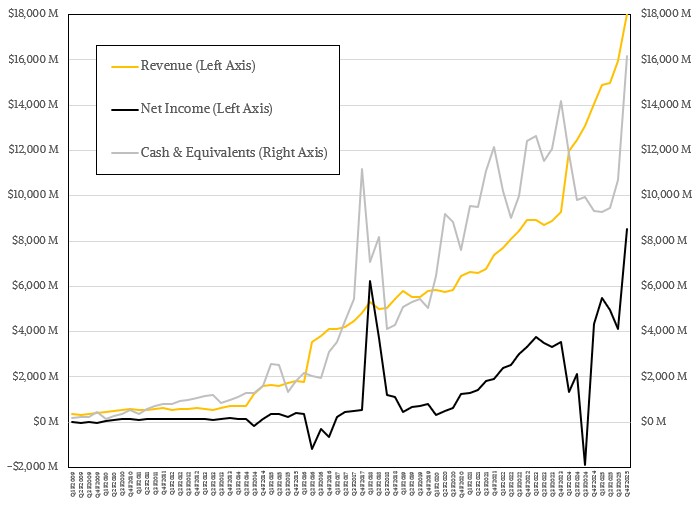

In the quarter ended on November 2, Broadcom had a little over $18 billion in sales, up 28.2 percent year on year and up 12.9 percent sequentially. Operating income rose by 62.3 percent to $7.51 billion, and net income rose by 97 percent to $8.52 billion, thanks in part to a $1.65 billion tax gain that Broadcom has kept in its back pocket.

For the full year, Broadcom had $63.89 billion in sales, up 23.9 percent and net income of $23.13 billion, up by a factor of 3.9X and representing 36.2 percent of revenues. This is amazing, truly. It isn’t magic, it is just what happens when you put together a whole bunch of little monopolies to give you the ability to face the even harsher reality of the modern chip market.

Broadcom ended the quarter with $16.18 billion in cash and $65.14 billion in debts, the latter trending downward ever so slowly and the former growing fast.

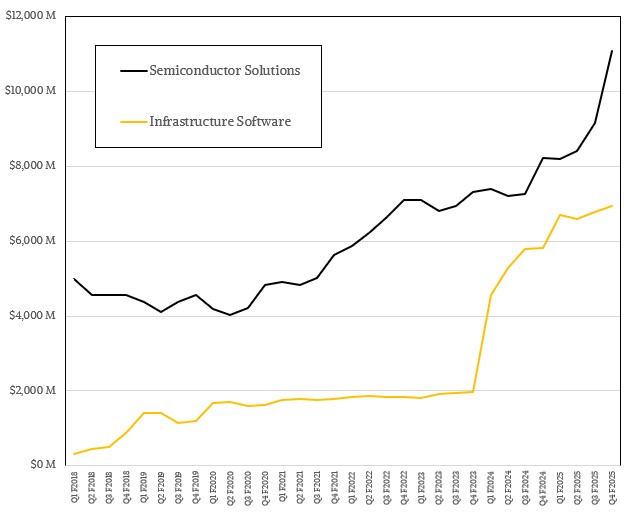

The Infrastructure Software group that has been built up over the past decade as a profit bulwark had $6.94 billion in sales, up 19.2 percent, with operating income of $5.42 billion, up 29.1 percent and representing 78 percent of revenues. For the year, the Broadcom software conglomerate had just over $27 billion in sales, up 25.9 percent, and $20.76 billion in operating income, up 48.5 percent and accounting for 76.8 percent of revenues. That is some mainframe-class profitability, and it stands to reason because CA is literally mainframe software and VMware is the modern mainframe software stack in a Kubernetes world.

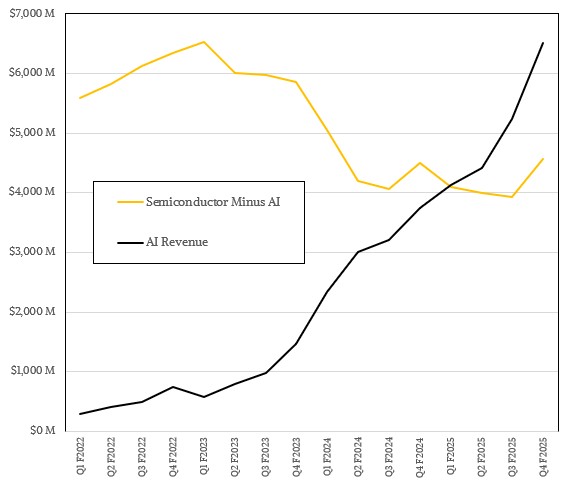

In the final quarter of fiscal 2025, the Semiconductor Solutions group at Broadcom had $11.07 billion in sales, up 34.5 percent, with operating income of $6.53 billion, up 41.7 percent. For the year, the chip side of the Broadcom house had $36.86 billion in revenues, up 22.5 percent, with operating income of just a hair over $23 billion, up 37.6 percent and representing 62.5 percent of revenues. This is still a very healthy business given that margin-diluting XPU business.

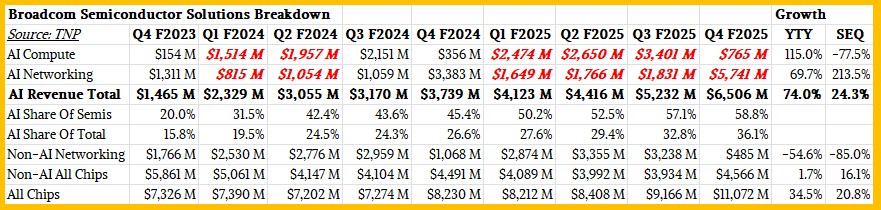

In the quarter, AI XPU sales were modest, around $765 million in our model, but up 2.2X from a year ago. Most of the AI sales came from AI networking, which we think hit $5.74 billion thanks in large part to the 102.4 Tb/sec Tomahawk 6 rollout but also helped by the Jericho 4 rollout, too, we think. This is a factor of 3.1X sequential boost in revenue. Add it up, and AI drove $6.51 billion in revenues, up 74 percent year on year.

Here’s the data behind our model:

Looking ahead to Q1 F2026, Broadcom expects to double AI chip revenues to $8.2 billion, with non-AI chips down a bit to $4.1 billion due to seasonality in the wireless chip business. Infrastructure software revenue will be about $6.8 billion in the Broadcom forecast. Add it up, and Broadcom is expecting around $19.1 billion in revenues for Q1, up 28 percent.

Featuring highlights, analysis, and stories from the week directly from us to your inbox with nothing in between.

Subscribe now

Related Articles