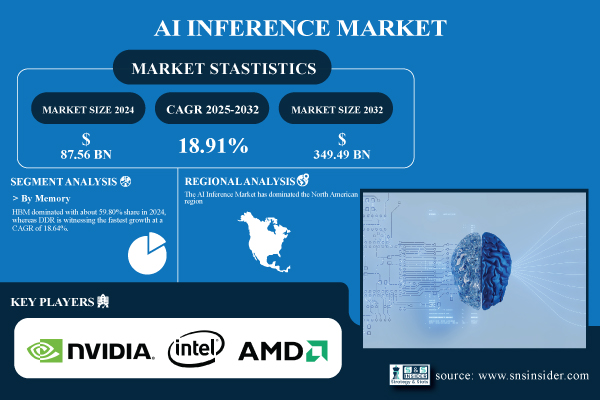

Austin, Sept. 24, 2025 (GLOBE NEWSWIRE) — The AI Inference Market Size was valued at USD 87.56 Billion in 2024 and is expected to reach USD 349.49 Billion by 2032 and grow at a CAGR of 18.91% over the forecast period 2025-2032.

The expansion of the AI inference market is largely fueled by the rising demand for near real-time processing and low-latency AI applications across various sectors, including healthcare, automotive, finance, and retail. As organizations increasingly adopt Generative AI, natural language processing (NLP), and computer vision solutions, the demand for robust inference mechanisms that facilitate accurate and rapid outputs to enhance results is rising. Advancements in GPU, NPU, and high-bandwidth memory (HBM) have provided enterprises with essential components for scaling solutions to complex and intensive AI workloads.

Download PDF Sample of AI Inference Market @ https://www.snsinsider.com/sample-request/8378

Key Players:

NVIDIAIntelAMDGoogle (TPU)BroadcomHuaweiAlibaba (MetaX)Cambricon TechnologiesPositronMediaTekInspur SystemsDell TechnologiesHewlett Packard Enterprise (HPE)LenovoIBMGigaByte TechnologyH3C TechnologiesLambda LabsQualcommXilinx

AI Inference Market Report Scope:

Report AttributesDetailsMarket Size in 2024USD 87.56 BillionMarket Size by 2032USD 349.49 BillionCAGRCAGR of 18.91% From 2025 to 2032Base Year2024Forecast Period2025-2032Historical Data2021-2023Report Scope & CoverageMarket Size, Segments Analysis, Competitive Landscape, Regional Analysis, DROC & SWOT Analysis, Forecast OutlookKey Segments• By Compute (GPU, CPU, FPGA, NPU, Others)

• By Memory (DDR, HBM)

• By Deployment (Cloud, On-Premise, Edge)

• By Application (Generative AI, Machine Learning, Natural Language Processing, Computer Vision)Customization ScopeAvailable upon requestPricingAvailable upon request

If You Need Any Customization on AI Inference Market Report, Inquire Now @ https://www.snsinsider.com/enquiry/8378

Segmentation Analysis:

By Memory, HBM Dominated the Market in 2024

High-Bandwidth Memory (HBM) occupies majority of AI Inference Market share as it provides high data throughput to memory-demanding AI tasks including GPUs and Data Center Accelerators. The fastest growing type of memory is DDR memory, owing to its low price and adoption in processors for edge devices, mobile platforms and consumer electronics.

By Compute, GPU Led the Markey in 2024 with the Largest Share

In 2024 GPU inference platforms are leading in the AI Inference Market as they easily used to tackle high-performance parallel processing making them an exciting choice for sophisticated AI workloads including machine learning, computer vision and generative AI workloads. NPU segment is also one of the fastest-growing segments owing to the growth of edge AI applications, smartphones and IoT devices.

By Deployment, the Market was Dominated by the Cloud Segment in 2024

Cloud deployment owns the major part of AI Inference Market share in 2024, due to scalability, centralized management, and integration with other enterprise AI applications. The edge segment is anticipated to experience rapid growth due to increasing demands for real-time, low-latency inference in smartphones, IoT sensors, autonomous vehicles, and smart cameras.

By Application, Machine Learning Holds Largest Share in the Market in 2024

Machine Learning (ML) continues to be the largest application segment in the AI Inference Market, owing to extensive adoption of predictive analytics, recommendation engines, and process automation across industries. Generative AI is the fastest-growing category of application owing to the surge in content generation, the adoption of AI assistants, such as ChatGPT, and creative automation solutions.

In 2024, North America Dominated the AI Inference Market; Asia Pacific is Projected to Witness the Fastest Growth in the Market

The AI Inference Market has dominated the North American region owing to the presence of large technology companies, leading semiconductor manufacturing and an established AI research ecosystem. The increasing adoption of AI technologies in countries, such as China, Japan, South Korea, and India is resulting in AI inference becoming the fastest-growing market in the Asia Pacific region.

Recent Developments:

In 2024, NVIDIA unveiled the H200 AI chip and Blackwell platform to enhance large-scale AI inference and generative AI capabilities.In April 2024, Intel launched the Gaudi 3 AI chip and Jaguar Shores processor to accelerate AI model training and inference efficiency.

Buy Full Research Report on AI Inference Market 2025-2032 @ https://www.snsinsider.com/checkout/8378

Exclusive Sections of the Report (The USPs):

PRICING & REVENUE BENCHMARKS – helps you compare pricing trends across AI chips, accelerators, and cloud AI services while analyzing revenue distribution by deployment models such as cloud, edge, and on-premise.OPERATIONAL & PERFORMANCE METRICS – helps you understand user adoption across regions, latency and throughput benchmarks, and sector-specific utilization trends shaping AI inference efficiency.INVESTMENT & FINANCING LANDSCAPE – helps you track venture capital flows, private equity activity, and M&A deals driving consolidation and innovation in the AI inference ecosystem.INFRASTRUCTURE & EXPANSION TRENDS – helps you evaluate data center growth, edge AI device deployments, and cloud platform expansion strategies across global markets.COMPETITIVE LANDSCAPE – helps you benchmark key players by pricing strategies, performance optimization, product innovations, and regional market penetration.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company’s aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.