Broadcom this week announced that it had signed an agreement to supply $10 billion worth of AI data center hardware to an undisclosed customer. The hardware includes custom-designed AI accelerators tailored for workloads specific to the client, as well as other gear from the company. It is widely rumored that the customer in OpenAI, which reportedly intends to use the AI processors for inference workloads, and the sheer scale of the order could amount to several million AI processors.

As always, Broadcom isn’t tipping its hat on the mystery customer. “Last quarter, one of these prospects released production orders to Broadcom, and we have accordingly characterized them as a qualified customer for XPUs and, in fact, have secured over $10 billion of orders of AI racks based on our XPUs,” said Hock Tan, President and CEO of Broadcom, during the company’s earnings conference call.

Hardware is ready

While the $10 billion number is a big deal, there is a lot more in the announcement. Broadcom stated the customer is now ‘qualified,’ confirming that the chip and possibly the associated platform passed customer validation. That means that whoever this client is, they are happy with the functionality and performance (and so the design has been locked). Meanwhile, the phrase ‘released production orders’ means the customer moved from evaluation or prototyping to full-scale commercial procurement, though Broadcom will recognize revenue at a later point, possibly once the hardware is delivered or deployed.

You may like

Broadcom does not reveal the names of its customers, but it said it would deliver ‘racks based on its XPUs’ in Q3 2026, which ends in early August 2025. Although the company calls its products ‘racks,’ these are not racks supplied by companies like Dell. What Broadcom intends to deliver to its undisclosed client is custom AI accelerators (XPUs) and networking chips, along with reference AI rack platforms that integrate these components. These are not full systems, but building blocks for large customers to assemble their own AI infrastructure at scale.

On time for 2026 deployment

Assuming that Broadcom delivers its hardware to the undisclosed client in June or July, 2026, that client will be able to able to deploy them in a few months (i.e., in Fall, 2026), depending on various factors. Such a timeframe aligns with reports suggesting that OpenAI’s first deployment of its custom AI processors, developed in collaboration with Broadcom, was expected between late 2026 and early 2027.

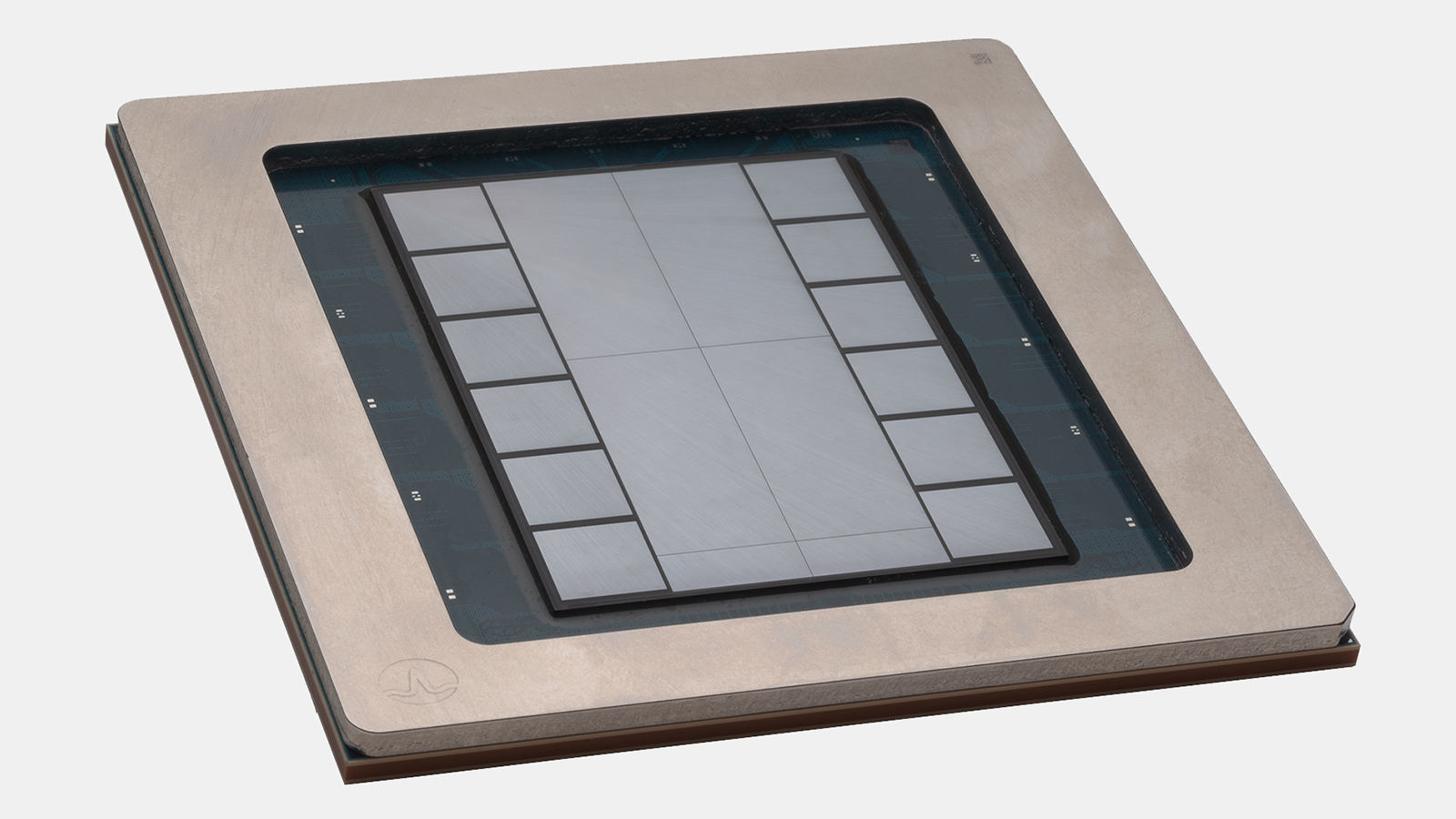

OpenAI’s custom AI processor is expected to utilize a systolic array architecture — a grid of identical processing elements (PEs) optimized for matrix and/or vector operations, arranged in rows and columns — a design similar to that of other AI accelerators. The chip will reportedly feature HBM memory, though it is unclear whether it will adopt HBM3E or HBM4, and is likely to be manufactured on TSMC’s N3-series (3nm-class) process technology.

A massive deal

A $10 billion hardware investment is a massive commitment for OpenAI (especially given the fact that it will be delivered over a single quarter) and places it firmly in hyperscaler territory. For context, Meta’s entire CapEx for 2025 is $72 billion, and the lion’s share of that is reportedly assigned to AI hardware. AWS and Microsoft are also investing tens of billions of dollars in AI hardware every year.

Assuming average costs of $5,000 to $10,000 per accelerator, the $10 billion could translate to 1–2 million XPUs, likely distributed across thousands of racks and tens of thousands of nodes. That scale rivals or exceeds many of today’s largest AI inference clusters (including OpenAI’s own gargantuan cluster), which will put OpenAI into a very competitive position going forward.

OpenAI, which has historically run its workloads on Microsoft Azure using AMD or Nvidia GPUs, now appears to be shifting toward in-house infrastructure with custom Broadcom silicon, a strategic move that is, of course, aimed at cost control and inference optimization. As an added bonus, $10 billion not only buys a million (or two) custom AI accelerators, but can also give OpenAI quite some leverage when negotiating prices with AMD and Nvidia. That’s assuming, of course, that the $10 billion deal with Broadcom was indeed signed by OpenAI. Notably, there is no official confirmation as of yet.

Follow Tom’s Hardware on Google News, or add us as a preferred source, to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button!