Every quarter, Nvidia CEO Jensen Huang is asked about the growing number of custom ASICs encroaching on his AI empire, and each time he downplays the threat, arguing that GPUs offer superior programmability in a rapidly changing environment.

That hasn’t stopped chip designer SiFive from releasing RISC-V-based core designs for use in everything from IoT devices to high-end AI accelerators, including Google’s Tensor Processing Units (TPUs) and Tenstorrent’s Blackhole accelerators.

Last summer, SiFive revealed that its RISC-V-based core designs power chips from five of the “Magnificent 7” companies – those include Alphabet, Amazon, Apple, Meta, Microsoft, Nvidia, and Tesla. And while we’re sure many of those don’t involve AI, it’s understandable why analysts keep asking Huang about custom ASICs.

While many of you will be familiar with SiFive for Meta and Microsoft’s RISC-V development boards, the company’s main business is designing and licensing core IP, similar to Brit chip designer Arm Holdings. These intellectual property (IP) offerings are what we’re discussing here.

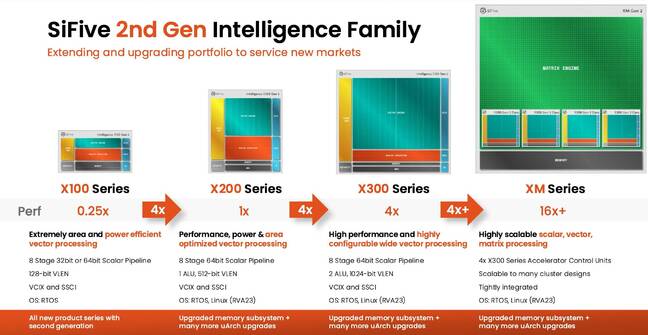

This week at the AI Infra Summit, the RISC-V chip designer revealed its second generation of Intelligence cores, including new designs aimed at edge AI applications like robotics sensing, as well as upgraded versions of its X200 and X300 Series, and XM Series accelerator.

Here’s a quick overview of SiFive’s second-gen Intelligence core lineup. – Click to enlarge

All of these designs are based on an eight-stage dual-issue in-order superscalar processor architecture, which is the long way of saying they aren’t designed for use in a general-purpose CPU like the one powering whatever device you’re reading this on.

Instead, SiFive’s Intelligence line is intended to serve as an accelerator control unit (ACU) for keeping its customers’ tensor cores and matrix multiply-accumulate units (MAC) from starving for data.

The idea is that rather than investing resources to design something custom for the job, SiFive customers can license its Intelligence family instead. This is what Google, Tenstorrent and a number of tech titans have apparently done.

The X100-class makes its debut

SiFive’s two newest Intelligence cores, the X160 and X180, are aimed at low-power applications like IoT devices, drones, and robotics. The X180 is a 64-bit core, while the X160 is based on the 32-bit RV32I instruction set architecture.

SiFive’s two newest Intelligence cores are nearly identical with the lower end of the two using a simpler 32-bit instruction set. – Click to enlarge

Customers can arrange them in clusters of up to four (although a chip could have multiple four-core clusters on it), and they support 128-bit wide vector registers and feature a 64-bit wide data path.

This allows them to contend with many modern data types, including INT8 and more commonly BF16, which is kind of important when they’re attached to an accelerator designed to run models at those precisions.

How these cores communicate with those accelerators has changed a little in SiFive’s second generation. In addition to its Vector Coprocessor Interface Extension (VCIX), which provides high-bandwidth access to the CPU core’s vector registers, the chip designer’s second gen parts now feature SiFive’s Scalar Coprocessor Interface (SSCI), which provides custom RISC-V instructions that give accelerators direct access to the CPU’s registers.

X200, X300 Gen 2

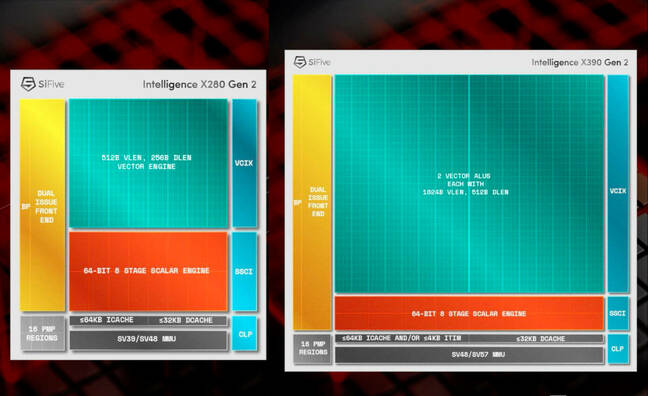

Alongside the new cores, SiFive is also rolling out updated versions of its X280 and X390 family of processor cores.

Compared to the X280 Gen 2, SiFive’s updated X390 cores have much fatter vector ALUs. – Click to enlarge

Like the X100-class, both cores feature eight-stage dual-issue in-order execution pipelines and can be arranged in clusters of one, two, or four cores depending on your application. Like last-gen, the X280 and X390 Gen 2 cores boast support for 512-bit and 1024-bit wide vector registers, respectively.

As you may recall, Google used SiFive’s X280 cores to manage the matrix multiplication units (MXUs) in its Tensor Processing Units back in 2022.

One key difference is that, for the second gen of the cores, SiFive has upgraded to the RVA23 instruction set, which adds hardware support for BF16 and OCP’s MXFP8 and MXFP4 micro-scaling data types. The latter is gaining considerable attention as of late, as it’s how OpenAI opted to release its open weights gpt-oss models.

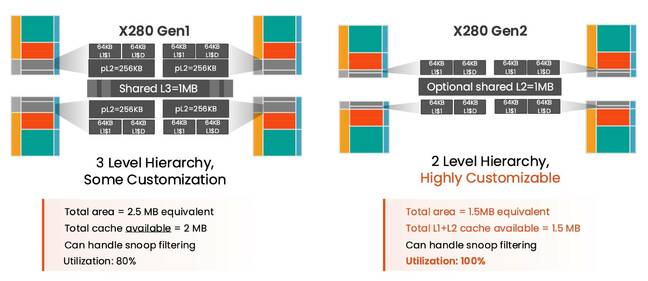

SiFive has also optimized the X280 Gen 2’s cache hierarchy, moving from a three-level arrangement – L1, L2, and shared L3 – to a simpler and more customizable one that ditches the L3 for up to 1MB of shared L2 per core cluster. The company says this new arrangement boosts utilization and saves on die area.

Compared to last gen, the new X280 cores have a much simpler cache hierarchy with up to 1MB of shared L2 per core cluster. – Click to enlarge

In terms of performance, SiFive says its larger X390 Gen 2 now boasts 4x the compute and 32x the data throughput of the original X280, allowing for up to 1TB/s of data movement in the four core cluster config.

SiFive is positioning the X390 as both a standalone AI accelerator core, presumably leaning heavily on its massive vector registers, as well as an ACU, an application that also benefits from the introduction of the SSCI interface we mentioned earlier.

Don’t forget SiFive’s take-and-bake AI accelerators

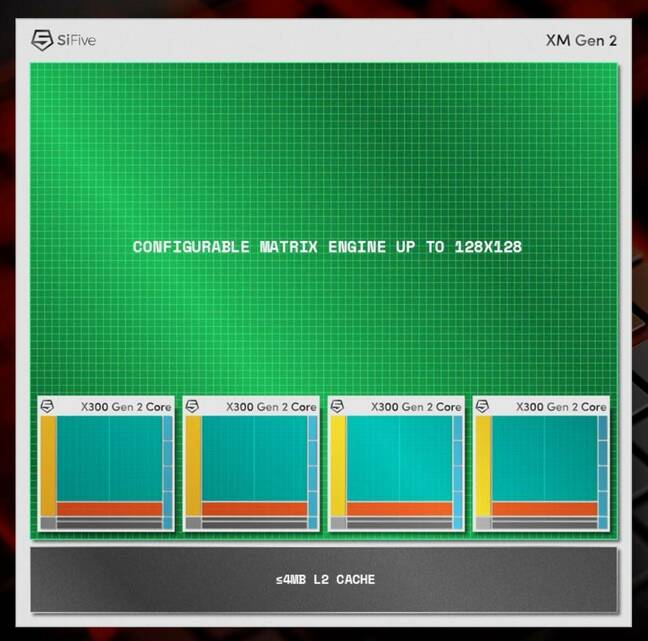

Finally, SiFive has also upgraded the XM family of take-and-bake AI accelerator IP to use its X390 Gen 2 cores. We first looked at SiFive’s XM products last summer, which serve as a blueprint for building a scalable AI accelerator.

SiFive’s take ‘n’ bake AI accelerator designs have been upgraded to make use of its second-gen X300 cores. – Click to enlarge

SiFive’s Gen 2 XM clusters now combine its in-house matrix math engine with four of its updated X390 cores. According to SiFive, each XM cluster can deliver 64 teraFLOPS of FP8 performance at 2GHz and can be scaled up to support chips with more than 4 petaFLOPS of AI performance.

All of these CPU core and accelerator designs are now available to license, with the first customer silicon based on them expected to hit the market sometime in Q2 2026. ®