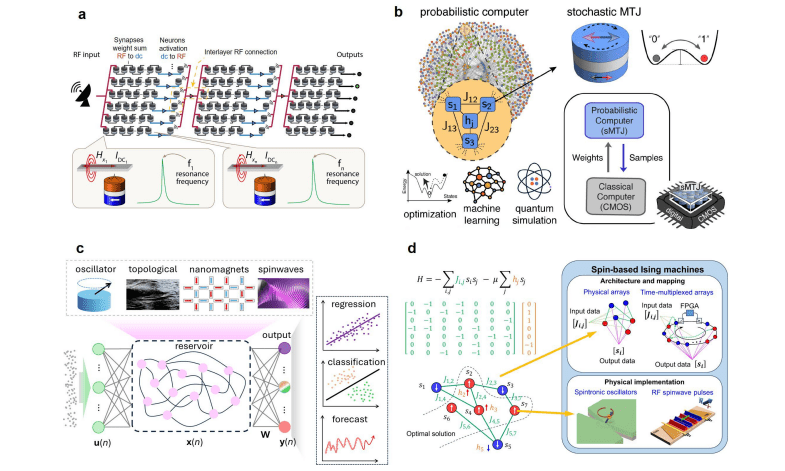

Spin-based computing represents a promising pathway towards faster, more energy-efficient data processing, and a comprehensive review of recent progress in this field now clarifies its potential. Hidekazu Kurebayashi from University College London, Giovanni Finocchio from the University of Messina, and Karin Everschor-Sitte from the University of Duisburg-Essen, alongside Jack C. Gartside and colleagues, present a detailed analysis of how manipulating electron spin can create innovative computational architectures. Their work explores the development of fundamental building blocks, including spin-based neurons and probabilistic bits, and examines broader systems like reservoir computing and magnetic Ising machines. By connecting computing performance directly to the physical properties of these devices, the team highlights the significant opportunities and remaining challenges in realising the full potential of spin-based computing for future technologies.

Physical Systems as Recurrent Neural Networks

Researchers are investigating Physical Reservoir Computing, a novel machine learning approach that implements recurrent neural networks using physical systems rather than traditional software. This technique offers potential benefits in terms of energy efficiency, speed, and the ability to leverage complex physical dynamics. The study explores various physical systems suitable for building these reservoirs, assessing their computational capabilities and applying them to tasks including time series prediction and pattern recognition. Reservoir Computing involves a fixed, recurrent neural network, the reservoir, which transforms input data into a higher-dimensional space, then learns to map these transformed states to desired outputs.

Investigations reveal a diverse range of systems being explored, varying in scale from a few to thousands of physical nodes. Key metrics used to evaluate these systems include kernel rank, measuring the richness of the reservoir’s mapping of inputs, and generalization rank, indicating its robustness. Information Processing Capacity assesses the reservoir’s ability to reconstruct nonlinear functions. This work demonstrates that Physical Reservoir Computing is a promising approach to building energy-efficient and high-performance machine learning systems, but standardized metrics and benchmarking are needed for effective comparison.

Stochastic Magnetic Tunnel Junctions for Probabilistic Computing

Scientists are pioneering a new form of computing using probabilistic bits, or p-bits, built from stochastic magnetic tunnel junctions, or s-MTJs. These devices harness randomness as a computational resource, mirroring a concept proposed for simulating probabilistic systems. Researchers have successfully demonstrated the functionality of these p-bits in emulating the transverse field Ising model and applied them to tasks like combinatorial optimization and machine learning. The team engineered s-MTJs, optimizing their stochastic behavior and key performance metrics. Experiments revealed random telegraph noise generation on the nanosecond timescale, directly influencing computation speed.

They explored various designs, including synthetic antiferromagnetic structures and double free-layer configurations, to enhance robustness and minimize sensitivity to external fields and temperature. Proof-of-concept systems have been constructed, starting with eight p-bits for integer factorization and expanding to 80 p-bits for solving travelling salesman problems. To address limitations in p-bit counts and random number generation speed, researchers explored software-based simulations, digital circuit emulations, and heterogeneous s-MTJ/CMOS systems. Electrical coupling of multiple s-MTJs was investigated for compact and energy-efficient implementations, while alternative p-bit construction methods were explored to achieve arbitrary switching probabilities.

Physical Reservoirs Successfully Perform Machine Learning Tasks

Significant advances are being made in spin-based computing, integrating magnetic and spintronic elements into computational architectures like reservoir computing and magnetic Ising machines. Experiments demonstrate that physical reservoirs can successfully perform machine learning tasks, including signal transformation and the classification of handwritten and spoken digits. Correlation between task results and task-independent metrics is visualized using statistical analysis. Investigations into reservoir performance reveal that simulated reservoirs consistently outperform experimental systems due to the absence of experimental noise and drift, highlighting the importance of reproducibility.

Detailed analysis of time delays used to enhance non-linearity shows that a linear system probed by white noise can yield high non-linearity. Measurements of magnetic Ising machines focus on metrics related to solving complex optimization problems, including the total number of spins and coupling connectivity. Studies of oscillator-based Ising machines reveal that the time-to-solution scales with the amplitude relaxation frequency parameter, allowing for amplitude and phase changes on the timescale of nanoseconds. In spin-wave time-multiplexed systems, the single solution time scales with the delay of the spin-wave delay line and depends logarithmically on system size. Energy consumption measurements consider the power used by magnetic devices and supporting electronics, with potential for lower consumption due to passive spin coupling.

Spin Computation, Low Power, and Architectures

This research demonstrates significant advances in spin-based computing, exploring how the unique properties of electron spin can be harnessed for energy-efficient and high-performance computation. The work surveys various approaches, from fundamental components like spintronic neurons and probabilistic bits to broader architectures like reservoir computing and magnetic Ising machines, establishing clear links between physical properties and computational performance. Certain nanoscale oscillator implementations, specifically those based on spin-hall and spin-transfer nano-oscillators, exhibit remarkably low power consumption, operating in the milliwatt range. While acknowledging the considerable progress, the authors highlight key challenges remaining before widespread implementation.

A central focus lies in the successful co-integration of spintronic devices with existing CMOS technology, requiring identification of competitive functionalities, interconnect scaling solutions, and energy-efficient coupling mechanisms. Future research directions include developing hardware replacements for external software components and exploring efficient on-chip time-multiplexing techniques to reduce circuitry complexity. Standardized manufacturing protocols and simulation tools tailored to the physical properties of spintronic devices will be crucial for accelerating this development, alongside metrics that account for the energy costs of peripheral circuitry.