Open any newspaper and you will find at least a few articles on Artificial Intelligence (AI). Everyone wants in—investors, end users, and entrepreneurs—but this colossal revolution may have reached a structural inflection point. AI success is no longer dependent on language models, software, investment or semiconductors; it will depend on how much electricity we can bring to market quickly and sustainably.

As hyperscalers, chipmakers, and AI-first firms compete to build the computational capacity of tomorrow’s economy, they face an “uncomfortable truth”: the energy infrastructure required to power this tech revolution may not exist at the scale or speed Silicon Valley expects.

The acceleration in AI infrastructure investment

The numbers are crazy stupid. Data centers consumed about 1.5 per cent of global electricity in 2024, according to the International Energy Agency (IEA), and demand continues to grow at double-digit rates. The IEA expects that data centres will use 945 terawatt-hours (TWh) in 2030, equivalent to the yearly electricity consumption of Japan. In the U.S., data-center electricity use increased from about 58 TWh in 2014 to 176 TWh in 2023, with projections suggesting 325–580 TWh by 2028. Globally, nearly $3 trillion of cumulative investment is expected by 2028 according to Morgan Stanley analysts, when including hyperscale, and AI training facilities—yet all of that capacity depends on sufficient electricity supply and grid infrastructure capacity..jpg)

Financial markets are racing to support this expansion, deploying long-term capacity contracts, private equity, equipment-backed lending, and structured securitizations of future power or compute revenue streams. These structures enable rapid scaling but also create systemic risk that seem overlooked. Investors are betting that today’s generation of AI hardware will remain economically valuable long enough to justify large capital allocation, a bet that depends on both technology performance and energy abundance.

Mega projects, such as the “Stargate” project led by OpenAI, Microsoft and its UAE, Oracle and SoftBank partners, demonstrate the tension between technology ambition and reality. Early-phase deployments are capital-intensive, while later capacity depends on compliance and regulation, energy contracts, transmission upgrades and environmental compliance. The mismatch between contractual financial commitments and delivery of power capacity shows the huge infrastructure challenge facing the AI industry.

The Real Bottleneck: Power infrastructure and location strategy

The constraint facing AI infrastructure isn’t fibre optic capacity or available land, it’s electrical power. U.S. Interior Secretary Doug Burgum talked about this during a recent industry conference: “To win the AI arms race against China, we’ve got to figure out how to build these artificial intelligence factories close to where the power is produced, and just skip the years of trying to get permitting for pipelines and transmission lines.”

This represents a fundamental shift in data centre development strategy. The next generation of AI facilities will be built where power is available, not where land is cheap like the mega-project led by Elon Musk in Memphis known are Colossus 2. Amazon has already signed deals with Dominion Energy near the North Anna nuclear power station in Virginia and expanded partnerships with Talen Energy at the Susquehanna nuclear plant. Sites within transmission distance of existing nuclear facilities are becoming exponentially more valuable, while traditional site selection criteria are being replaced energy supply reliability.

The timing mismatch compounds the challenge. Data centers take two to three years to build, while power system upgrades require approximately eight years. According to CoStar analysis, data centres will account for up to 60 per cent of total power load growth through 2030, creating a structural supply-demand imbalance that forces developers to either delay projects or locate near existing power plants, regardless of other site characteristics.

Community resistance adds another layer of complexity. Data Center Watch estimates $64 billion in data centre projects were blocked or delayed over a recent two-year period, with 142 activist groups across 24 states organizing against development. Northern Virginia, the U.S.’s largest data center market, hosts 42 separate activist groups opposing projects. Concerns range from water consumption and utility cost increases to noise pollution and loss of open space. The result: sites with existing power capacity and community support have become far more valuable than sites with merely favourable zoning and available land.

OpenAI signed approximately $1 trillion worth of contracts for computing power to support its AI operations, including agreements with AMD, Nvidia, Oracle, and CoreWeave. These agreements aim to give OpenAI access to over 20 gigawatts of computing capacity, equivalent to the output of 20 nuclear reactors (rough estimate), by 2035. OpenAI’s investments are aimed at improving and scaling services like ChatGPT, but the financial commitments far exceed its revenue. While Open AI raised $47 billion in VC funding and $4 billion in debt, with a valuation of $500 billion, the company is expected to lose $10 billion this year. Experts question its financial sustainability, noting its high capital intensity and uncertain path to profitability.

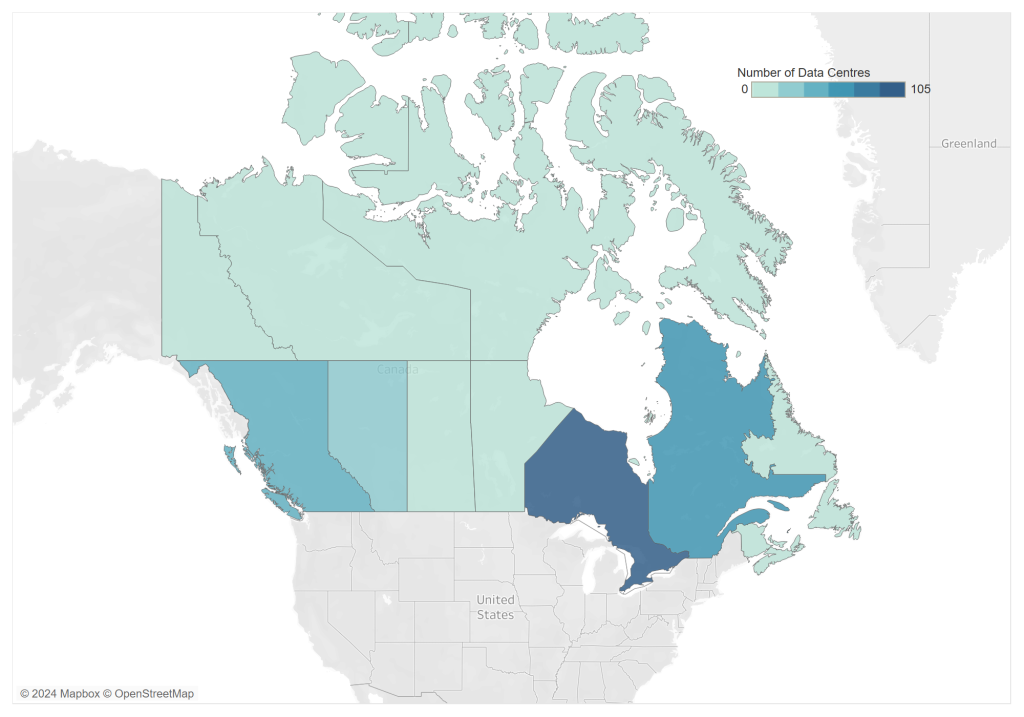

This interactive map illustrates the concentration of data centres in each province in Canada. The darkest shade indicates the highest number of data centers, while the lightest shade indicates the fewest number of data centers. Ontario has the highest number followed by Quebec and British Columbia. Source: Data Center Map – Canada Data Centers

The AI energy demand surge and climate considerations

A new report from global risk firm DNV projects that power demand from AI-driven data centers will rise tenfold by 2030. The U.S. and Canada, are expected to be the primary driver of this surge in energy consumption. Despite this significant increase, DNV states that AI will likely still account for less than three per cent of global electricity usage by 2040, remaining lower than sectors like electric vehicle charging and building cooling. The report is based on an analysis of over 50 global data center energy estimates and places DNV on the more conservative side of projections.

BloombergNEF projects that U.S. data-center power demand will at least double by 2035, rising from almost 35 gigawatts in 2024 to 78 gigawatts. Energy consumption growth will be even steeper, with average hourly electricity demand growing almost three times, from 16 gigawatt-hours in 2024 to 49 gigawatt-hours by 2035.

The climate externalities depends on how this energy demand is delivered. Many technology companies have committed to powering their project with clean energy such as solar, leading to substantial investments in renewable energy projects and nuclear power partnerships. These commitments represent opportunities to accelerate clean energy deployment significantly. However, the craze of AI infrastructure buildouts creates pressure to use whatever power sources are available, including more carbon intensive sources that are readily available. The tension between speed of deployment and emissions reduction goals adds more challenge to energy planning, particularly in regions with ambitious climate targets.

The Canadian Context: Digital ambition meets grid reality

In Canada, this disconnect between digital ambition and physical infrastructure could become challenging. Ottawa has championed AI as a strategic growth sector, releasing the world’s first national AI strategy in 2017 and following up with $2.4 billion in the 2024 federal budget, plus an additional $2 billion Canadian Sovereign AI Compute Strategy in December 2024. These investments have positioned Canada as a global leader with world-renowned research clusters in Montreal, Toronto, and Edmonton. Yet the country’s power planning hasn’t caught up to its technology ambitions. Hydro-Québec expects a 4.1 TWh increase in data center demand from 2023 to 2032, while Ontario’s Independent Electric System Operator lists data center growth as a key driver of expected power increases. Alberta’s grid operator has received numerous large-scale data center proposals around Calgary and Edmonton, representing concentrated electricity demands that challenge existing infrastructure capacity.

Canada has abundant clean hydroelectric resources and potential for nuclear expansion that could power AI infrastructure sustainably. But using that potential requires a ton of coordination between federal AI strategy, provincial energy planning, and utility infrastructure investment. The environmental risk are high: Canada wants to achieve net-zero emissions by 2050 and reduce emissions by 40-45 per cent below 2005 levels by 2030. Adding significant new electricity demand from AI infrastructure, even if powered by renewable energy, competes with electrification needs in transportation, heating, and industrial processes that are essential to meeting these climate targets. Without a coordinated strategy, Canada risks seeing its $4.4 billion in AI investments constrained by energy infrastructure limitations while potentially slowing down its cleaner economy goals.

See some similarities with the 1990s Internet boom and bust?

The current AI infrastructure boom reminds us of the internet fiberoptic expansion of the 1990s. At that time, large scale investments were made building the infrastructure for the internet, such as data centers, fiber-optic cables, and other equipment. Many of these investments were made without fully understanding future demand, driven by the fear of missing out, leading to overcapacity and financial losses and ultimately to the bursting of the Wall Street bubble.

Similarly, the rapid scaling of AI infrastructure today is being driven by over optimistic projections of future demand. While AI can revolutionize entire industries, the current pace of investment may be outpacing the actual demand for AI services. This could lead to a situation where companies have invested heavily in infrastructure that is underutilized, resulting in financial stress and potential market corrections.

To put it bluntly, the $3 trillion planned investment in AI infrastructure is as much a bet on energy and grid capacity as it is on machine learning innovation. Success will depend on unlikely coordination between technology firms, utilities, financial markets, and governments. Failure could result not only in financial losses but in a fundamental recalibration of expectations about AI’s role in the global economy. The next five years will determine whether AI fulfills its transformative promise or confronts the constraints of the energy systems on which it depends.

Andrea Zanon is an Environment, Social and Governance (ESG) strategy and resiliency advisor who has advised ministers of finance and over 100 global corporations on how to develop more resilient countries and societies.

Featured image credit: Getty Images