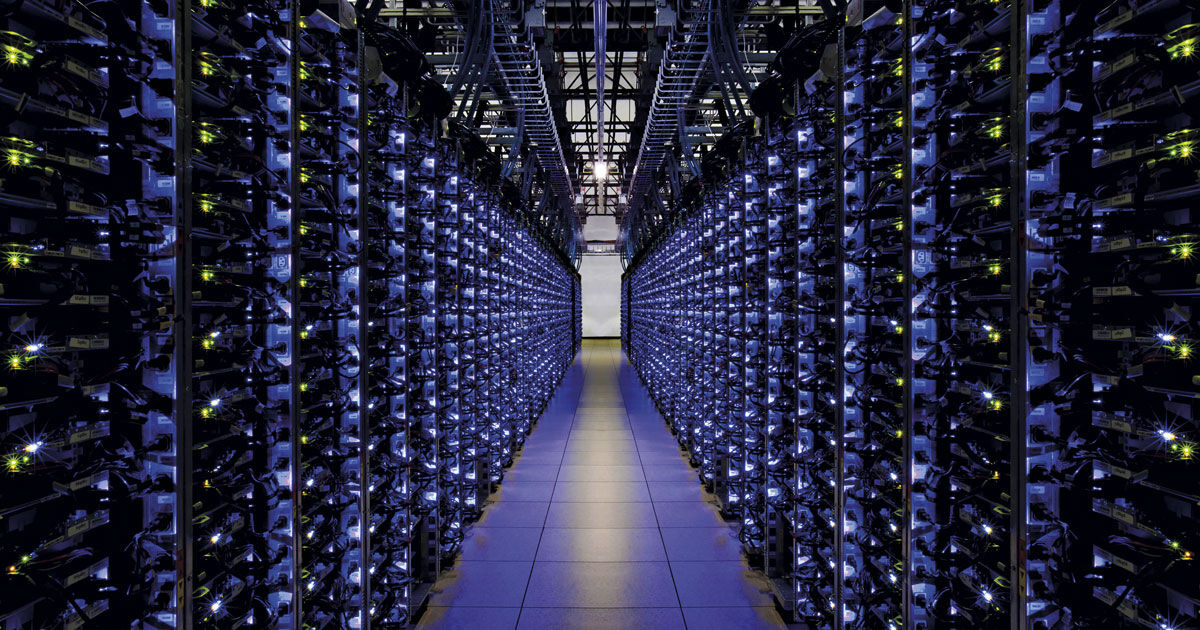

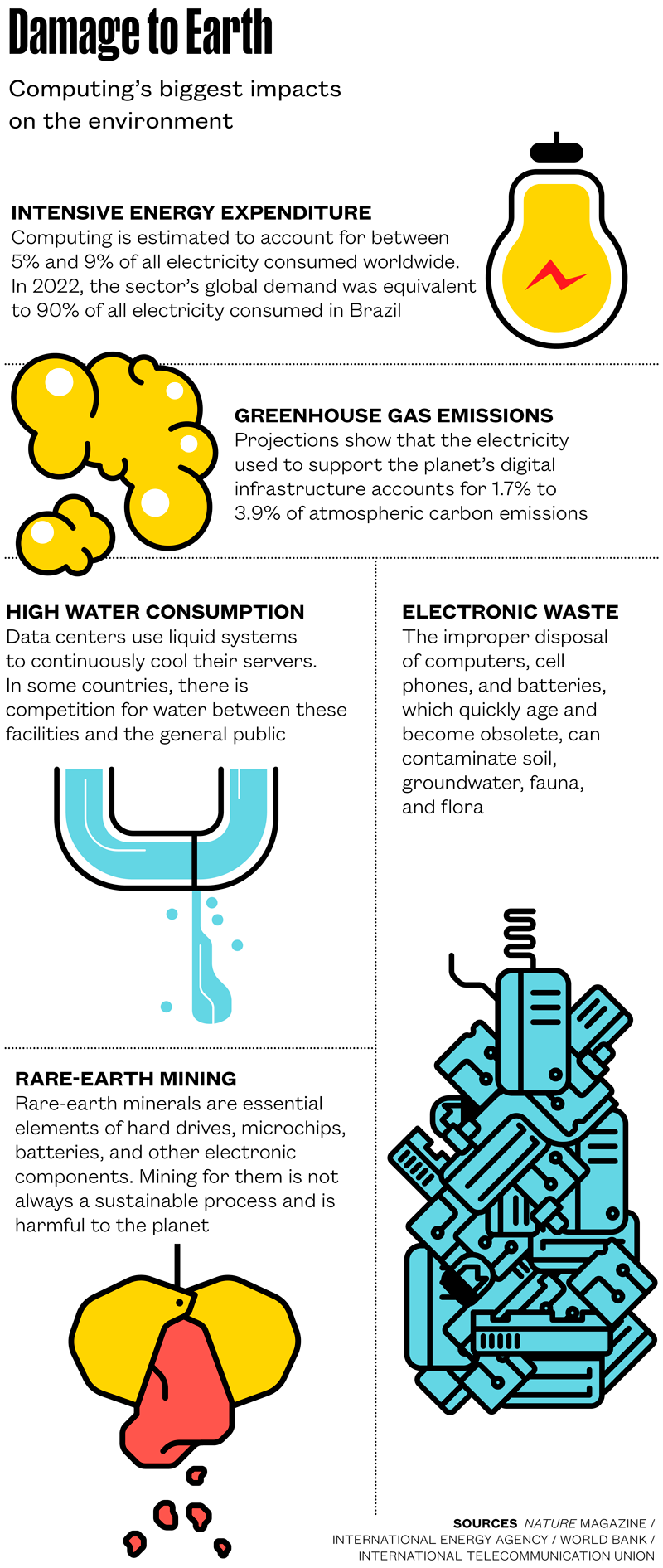

Computers, an essential part of modern life, are now everywhere. It is difficult to imagine a world without digital technology such as the internet, social media, video streaming services, artificial intelligence (AI), and the vast range of apps we use every day. Governments, organizations, and companies across all sectors are increasingly relying on information and communication technologies (ICT). The rise in computational demand, however, is having an impact on the environment. It is estimated that the ICT sector accounts for 5% to 9% of total electricity consumption worldwide. The International Energy Agency (IEA) has warned of a major upward trend in this demand. According to the agency, the global energy consumption of data centers (which have enormous data storage and processing power) and the AI and cryptocurrency sectors in 2026 could be double the level of 2022, when it amounted to 460 terawatt-hours (TWh). In the same year, Brazil consumed 508 TWh of electricity.

“The use of energy is inherent to computing,” says Sarajane Marques Peres, a computer scientist from the School of Arts, Sciences, and Humanities at the University of São Paulo (EACH-USP) and a researcher at the C4AI Artificial Intelligence Center, funded by FAPESP and IBM. Individually, she points out, a computer does not require a large volume of energy, but thousands of machines together or many computers with powerful processors working 24 hours a day, seven days a week, represent a high demand.

See more:

– How to make data centers more sustainable

– The high energy demands of the cryptocurrency market

“Every digital activity, such as browsing the internet, accessing social media, participating in video conferences, and sending photos to friends, ultimately has an effect on the environment,” says computer scientist Thais Batista, president of the Brazilian Computing Society (SBC) and a professor at the Department of Computer Science of the Federal University of Rio Grande do Norte (UFRN).

The energy supplied to data centers is used not only to operate the servers, but also to power cooling systems. “Because they are performing numerical processing nonstop, the computers warm up, emit heat, and need to be cooled to a reasonably low temperature,” explains Marcelo Finger, a computer scientist from USP’s Institute of Mathematics and Statistics (IME). “The level of damage to the environment depends on the sources of this energy,” says Peres, referring to the emission of carbon dioxide (CO₂) when fossil fuels are burned to generate the electricity used.

The World Bank and the International Telecommunication Union, a United Nations (UN) agency, estimate that the computing sector accounts for at least 1.7% of all global greenhouse gas emissions. “Other studies warn of an even higher number, around 4%,” says computer scientist Emilio de Camargo Francesquini, from the Center for Mathematics, Computing, and Cognition at the Federal University of ABC (UFABC).

Alexandre Affonso / Revista Pesquisa FAPESP

Alexandre Affonso / Revista Pesquisa FAPESP

Google, Microsoft, Apple, Amazon, and other big tech companies have committed to zero carbon emissions by 2030, but according to experts interviewed for this report, there is no evidence that such an objective is achievable. In 2023, the most recent year with available data, emissions from these companies grew, primarily as a result of advances in AI systems, the training and use of which require a lot of processing power—and therefore a lot of electricity.

Rising energy consumption and carbon emissions are not the only cause for concern. The heavy use of water for data center cooling systems and emission of heat into the environment are raising another red flag. “Water consumption is a more recent concern, since most large data centers use liquid cooling for their enormous equipment,” says Álvaro Luiz Fazenda of the Institute of Science and Technology at the Federal University of São Paulo (UNIFESP), São Jose dos Campos campus. One solution is to use non-potable water sources for cooling processes.

Ecosystems are also feeling the pressure of the often unsustainable exploitation of rare earth elements and other minerals—such as silicon, copper, and lithium—used to produce hard drives, microchips, and batteries, and the disposal of computers, cell phones, and other electronic devices that quickly become obsolete. “Information and communication technologies are among the largest consumers of energy and natural resources, in addition to leading the way in the generation of electronic waste containing hazardous substances,” summarizes Francesquini.

Seeking to tackle the problem, a new field of study known as green or sustainable computing, has been growing in Brazil and across the world. “It refers to the practices, techniques, and procedures applied to the manufacture, use, and disposal of computer systems with the aim of minimizing the environmental impact,” explains the UFABC researcher.

Several approaches have been proposed to achieve this goal, such as increasing the energy efficiency of hardware and software so that they perform the same operations while consuming less energy; designing more durable, repairable, and recyclable systems to reduce the generation of electronic waste; and prioritizing sustainable materials in the production and use of computing devices and renewable energy sources in data centers (see report).

VCG / VCG via Getty ImagesAerial view of a Tesla data center in Shanghai, China, which opened in 2021VCG / VCG via Getty Images

The impact of AI

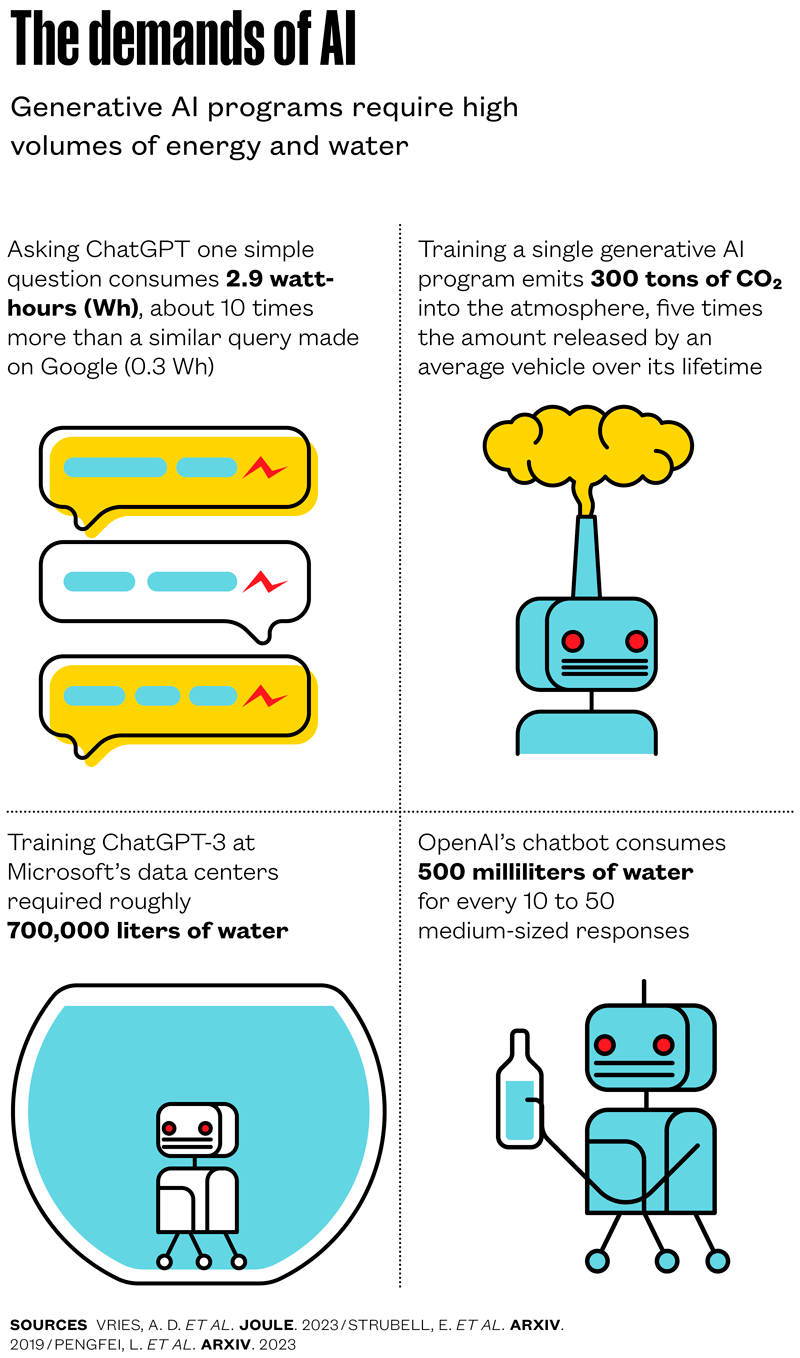

An increasing body of research suggests that the explosion of AI—whether generative, such as large language models (LLM) like ChatGPT, or predictive—and the associated infrastructure is a major cause of the sector’s growing environmental footprint. Every prompt given to an AI chatbot results in thousands of calculations being performed for the model to decide on the most appropriate words to present in response. This requires intense processing power.

A study published in Joule by Dutch researchers in October 2023 showed that asking ChatGPT one simple question consumes 3 watt-hours (Wh), almost 10 times more energy than a similar search made on Google. This may seem like a small number, but it is significant when considering that the chatbot has more than 400 million active users per week, making more than one billion queries per day.

Another study, by scientists from the University of California, Riverside, and the University of Texas at Arlington assessed the water footprint of AI tools. They estimated that training ChatGPT-3 at a Microsoft data center in the USA could consume up to 700,000 liters of clean water. When operating, the chatbot consumes a 500-milliliter bottle of water for every 10 to 50 medium-sized responses, depending on when and where the calculations are being performed.

The same study, shared on the arXiv platform in April 2023, estimated that in 2027, the global water demand for servers running AI programs around the world will be between 4.2 billion and 6.6 billion cubic meters, equivalent to half of the UK’s entire water consumption in 2023. “This is concerning, as freshwater scarcity has become one of the most pressing challenges,” the authors wrote. “To respond to the global water challenges, AI can, and also must, take social responsibility and lead by example by addressing its own water footprint.”

Zoran Milich / Getty ImagesAn employee examines electronic waste at a recycling facility in Massachusetts, USAZoran Milich / Getty Images

Researchers at the Chinese startup DeepSeek also attempted to reduce the energy consumption of AI systems. The DeepSeek-V3 chatbot, launched at the end of January, surprised many with its performance comparable to OpenAI and Google, but at a substantially lower cost.

“DeepSeek shows that it is possible to develop high-quality AI using less computational resources and energy,” says EACH-USP computer scientist Daniel de Angelis Cordeiro. “Investing in research into more efficient algorithms and improvements in the management of computational resources used in the training and inference stages could help create a more sustainable AI.”

According to a scientific article published by the developers of DeepSeek in January, they were able to reduce consumption by improving the load-balancing algorithms used in the training stage, which allowed for better use of computational resources without harming performance. Mixed-precision algorithms were also used to optimize processing and communications in the training stage. Load-balancing algorithms distribute a certain computational task among different servers, while mixed-precision algorithms speed up processing and reduce memory usage while maintaining calculation accuracy.

“The open-source platform is based on a large language model and was trained on data that generally have the typical structure and optimization techniques already adopted by other models, such as ChatGPT,” says electrical engineer Fabio Gagliardi Cozman, director of C4AI and a professor at USP’s Polytechnic School. “This is not a total revolution in the area, but it is a good example of analyzing the steps needed to develop the models, removing some of the stages, and emphasizing the use of quality data, particularly data obtained from other models,” he states, highlighting that a great effort was made to reduce the requirements for memory and data transmission during the training process. “All of this combined led to a significant reduction in the resources used by the team.”

Dado Galdieri / Bloomberg via Getty Images A lithium mine in Jequitinhonha Valley, Minas Gerais: exploration of minerals for use in the production of computers and batteries impacts the environmentDado Galdieri / Bloomberg via Getty Images

Approximate calculations

One proposed strategy for reducing the environmental impact of AI systems and computing as a whole is an approach known as approximate computing, in which algorithmic techniques or dedicated hardware (used for a single function) are used to obtain less precise but still acceptable solutions to computational tasks. By reducing the computational load needed to solve a certain problem and providing an approximate result, energy expenditure is reduced.

“Several algorithmic techniques can be applied, including reduced numerical precision, using values and arithmetic operations with a smaller number of decimal places to represent real numbers,” says Fazenda, from UNIFESP. Instead of representing a number with a precision of 20 decimal places, only the first 10 are considered. “This can cause unwanted rounding and discrepancies in results, compromising accuracy. However, if they are insignificant, it is possible to improve performance and save energy, since operations with reduced precision demand less computational power,” he says.

One of Fazenda’s studies sought to understand how a reduction in data accuracy would affect the quality of weather and climate simulations, and consequently, forecasting models. “The algorithms used in these simulations are highly demanding in terms of computing resources, and therefore also in terms of energy. And they require precise representations of the simulated data, resulting in the use of extensive numerical precisions, with 64-bit variables,” explains the researcher. “We wanted to evaluate the potential gain in time and energy provided by lower processing requirements by reducing numerical precision from 64 to 16 bits.”

The study, detailed in the annals of the XI São Paulo Regional High Performance School (ERAD-SP), an event held in 2020, was based on a systematic review of the topic. The research team’s objective was to identify which parts of a numerical weather and climate forecasting model could make positive use of energy-saving methods and for which parts it would be detrimental. Preliminary results suggested the techniques could be used to good effect, according to a summary published in the proceedings of the International Colloquium on Energy-Efficient and Sustainable Distributed Systems, held at UFRN in 2024.

Approximate computing can also be applied to hardware accelerators used in video decoding. Accelerators are specialized circuits or processors optimized to perform specific tasks more efficiently while consuming less energy. In this case, the result would be a video with some incorrect pixels, but nothing that would affect the viewer’s overall perception. Processing this way would consume less power than if a highly accurate decoder were to be used.

“The challenge of approximate computing is to incorporate these techniques into processors and different applications, clearly separating what can be approximated from what must be precise,” says Lucas Wanner, a computer scientist from the Institute of Computing at the University of Campinas (IC-UNICAMP) whose research focuses on the energy efficiency of hardware and software, with an emphasis on high-performance computing and approximate computing.

Another approach to green computing is to produce more energy-efficient equipment. “Several groups in Brazil and overseas are working on new types of hardware that offer the same computational performance but consume less energy,” says IME-USP computer scientist Fabio Kon.

Alexandre Affonso / Revista Pesquisa FAPESP

Alexandre Affonso / Revista Pesquisa FAPESP

Processors that require low power volumes were the subject of a study by Francesquini’s group at UFABC. “We examined the behavior of a specific application on four different hardware architectures in the oil and gas exploration industry,” he explains. The results, published in the Journal of Parallel and Distributed Computing in February 2015, showed an apparent contradiction: the best approach is often to accept slightly slower execution of the program—but still within the established constraints—in order to reduce energy consumption. In other cases, it may be necessary to increase power consumption to ensure an adequate execution time. “Depending on the application, the hardware available, and the limitations involved, it may not be possible to simultaneously minimize both energy expenditure and execution time,” he says.

In another investigation exploring new architectures and hardware models, Wanner’s group applied an energy saving technique known as duty cycling, which is simple and effective, but challenging to implement in practice. Duty cycling means turning off parts of a computer’s circuit when they are not being used. “This involves making small modifications to the hardware to allow chosen parts to be selectively deactivated to reduce the operating frequency, or completely turned off by disconnecting the power source,” explains the UNICAMP researcher. Currently, all computing systems incorporate a variation of this technique. Laptop screens turn off after a few minutes of inactivity, for example, while keyboards and mice go into standby when not in use and “wake up” when needed.

“For dedicated circuits, as our research indicates, the challenge is to wake up the system at the right time without negatively affecting the quality of the results for the application. Another obstacle is that duty cycling needs to occur autonomously, since there is no user to indicate when the system should be active and when it can ‘sleep’ like there is when somebody is using a personal computer.” The study was published in IEEE Embedded Systems Letters in June 2024.

GoogleCooling system at a Google data center: the blue pipes supply cold water and the red pipes return heated liquid to be cooledGoogle

Nonbinding recommendations

It is not only academia and industry that are concerned about the impacts of computing on the planet. International organizations and governments are also taking action. According to the United Nations Environment Programme (UNEP), more than 190 nations have already adopted nonbinding recommendations—meaning they are not mandatory—on the ethical use of AI that also cover issues related to the environment. The USA and the European Union have passed legislation to address the problem.

In a technical note, UNEP spoke of the need to establish standardized procedures to measure the environmental impacts of AI, given the shortage of reliable data on the issue. It also recommended that governments create regulations requiring companies in the sector to disclose the environmental consequences of AI products and services.

“Nonbinding recommendations will not be enough to regulate the rise of data centers and energy consumption by AI worldwide,” says social scientist João Paulo Cândia Veiga of the Institute of International Relations (IRI) at USP, who also does research at C4AI. “Decisions involving future regulation will be taken at the domestic level, probably based on international guidelines, such as those issued by the IEA and other organizations.”

The story above was published with the title “The environmental impact of the digital world” in issue in issue 349 of march/2025.

Projects

1. Artificial Intelligence Center (nº 19/07665-4); Grant Mechanism Engineering Research Centers; Cooperation agreement IBM Brazil; Principal Investigator Fabio Gagliardi Cozman (USP); Investment R$12,426,244.72.

2. CCD – Carbon Neutral Cities (nº 24/01115-0); Grant Mechanism Science and Development Center; Principal Investigator Liedi Legi Bariani Bernucci (USP); Investment R$3,347,593.43.

3. INCT 2014 – The internet of the future (nº 14/50937-1); Grant Mechanism Thematic Project; Principal Investigator Fabio Kon (USP); Investment R$2,121,398.08.

4.Trends in high-performance computing, from resource management to new computer architectures (nº 19/ 26702-8); Grant Mechanism Thematic Project; Principal Investigator Alfredo Goldman vel Lejbman (USP); Investment R$2,746,855.25.

5. EcoSustain: Data science and computing for the environment (nº 23/00811-0); Grant Mechanism Thematic Project; Principal Investigator Antonio Jorge Gomes Abelém (UFPA); Investment R$3,999,467.22.

Scientific articles

VRIES, A. D. et al. The growing energy footprint of artificial intelligence. Joule. Vol. 7, pp. 2191–94. Oct. 18, 2023.

FREITAG, C. et. al. The real climate and transformative impact of ICT: A critique of estimates, trends, and regulations. Patterns. Vol. 2, 100340. Sept. 10, 2021.

STRUBELL, E. et al. Energy and policy considerations for Deep Learning in NLP. arXiv. June 5, 2019.

PENGFEI, L. et al. Making AI less “thirsty”: Uncovering and addressing the secret water footprint of AI models. arXiv. Apr. 6, 2023.

GUO, D. et al. DeepSeek-R1: Incentivizing reasoning capability in LLMs via reinforcement learning. arXiv. Jan. 25, 2025.

SUDO, M. A. & FAZENDA, L. A. A review on approximate computing applied to meteorological forecast models using software-based techniques. Anais da XI Escola Regional de Alto Desempenho de São Paulo (Erad-SP). 2020.

FRANCESQUINI, E. et al. On the energy efficiency and performance of irregular application executions on multicore, Numa and manycore platforms. Journal of Parallel and Distributed Computing. Vol. 76, pp. 32–48. Feb. 2015.

L. CASTRO et al. Exploring dynamic duty cycling for energy efficiency in coherent DSP ASIC. IEEE Embedded Systems Letters. Vol. 16, no. 2, pp. 202–5. June 2024.

Reports

Electricity 2024: Analysis and forecast 2026. International Energy Agency (IEA). Jan. 2024.

Environmental Report 2024. Google. July 2024.