Arm has lifted the lid on its latest mobile platform, comprising new CPU and GPU designs plus rearchitected interconnect and memory management logic, all optimized with a coming wave of AI-enabled smartphones in mind.

The UK-based chip designer has been moving towards more integrated solutions rather than just offering cores for several years, and this year’s Lumex compute subsystem (CSS) is the latest evolution of that philosophy.

As with each generation, Arm manages to squeeze more performance and power efficiency out of its designs, claiming an average 15 percent step up in CPU and 20 percent in GPU, while saving 15 percent on power.

The key focus with Lumex is on Arm’s SME2 Scalable Matrix Extensions in the CPU cluster, which the firm is pushing as the preferred route for AI acceleration, and overall system-level optimizations to boost scalability for devices capable of running AI models.

Stefan Rosinger, Senior Director for CPUs, said that SME2 gives AI acceleration “an order of magnitude better” than before, and its advantage for a mobile device is that it uses less power and finishes calculations quicker.

According to Arm, we can expect to see Lumex implemented in smartphones and other devices later this year or early next year. It has been crafted with 3nm manufacturing in mind, and the firm says it expects chips produced by its licensees will run at upwards of 4 GHz clock speeds.

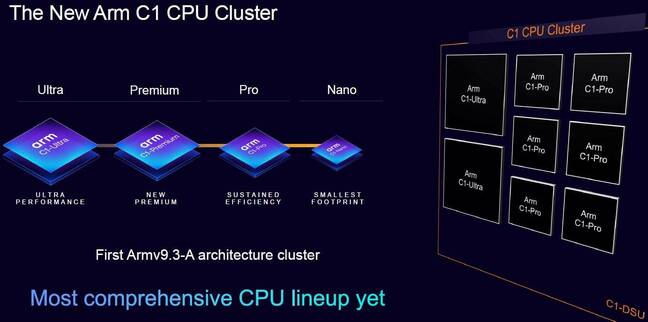

In a rare outbreak of common sense, all the cores in the Lumex CPU cluster are designated C1, with the maximum performance core design labelled the C1-Ultra.

Arm’s slide detailing its new C1 CPU cluster – Click to enlarge

Most smartphone chips now feature a mix of core types, with one or two performance cores for the demanding work, coupled with more power-optimized cores to handle other tasks. This started out as big.LITTLE many years ago.

With Lumex, Arm has given chip designers no fewer than four core types to choose from, adding C1-Premium as the next step down, followed by C1-Pro and finally C1-Nano as the smallest footprint core with the greatest power efficiency.

Chips aimed at flagship phones will likely see two C1-Ultra cores combined with six C1-Pro cores, Arm believes, while “sub-flagship” silicon might use a mix of two C1-Premium and six C1-Pro, while the mainstream could be served by a mix of four Pro and four Nano.

In the GPU department, the Lumex platform has the Mali G1, and like the CPU cores, these are graded from Mali G1-Ultra to Mali1 G1-Premium and Mali G1-Pro. The differences between these three tiers are in the number of shader cores, with Pro having one to five, Premium six to nine, and Ultra 10 cores or more.

Mali G1-Ultra is also the only tier with Arm’s redesigned Ray Tracing Unit (RTU), claimed to offer 40 percent higher performance than last year’s Immortalis-G925, plus higher quality for games.

The new GPU design is also claimed to accelerate in-game AI with support for half-precision (FP16) matrix multiplication, which Arm claims improves tensor processing while reducing memory bandwidth and lowering power consumption.

Arm has already given us a taste of the neural accelerator hardware it is bringing to its phone GPUs next year, but that is not part of the Mali G1-Ultra in Lumex this year.

What is included in this complete compute subsystem are a new purpose built System Interconnect (SI) and System Memory Management Unit (SMMU), designed to cope with the demands that running AI models on smartphones are likely to bring.

The System Interconnect has been redesigned with what Arm calls a channelized architecture that can give quality of service (QoS) priority to different traffic, while the System MMU has been optimized to reduce latency by up to 75 percent, the firm says.

It isn’t just about hardware of course, and Arm says it has been working behind the scenes to ensure that the various developer frameworks support the optimizations coming in its latest platforms.

Its KleidiAI libraries have been integrated with frameworks such as PyTorch, Llama, LiteRT and ONNX to enable support for SME2 acceleration when running AI workloads.

Of course, Arm believes that AI processing should be kept on the CPU, since “it’s the only compute unit in the mobile market you can rely on to be present in every mobile phone,” according to Geraint North, Arm Fellow in AI and Developer Platforms.

“As you start to move to GPUs and NPUs (neural processing units), you end up doing different work for different handsets,” he explained, as vendors may have opted for different GPUs and NPUs in their smartphone silicon.

This is a logical viewpoint, but it’s not one that everyone necessarily agrees with. Analyst Gartner explicitly defines a GenAI smartphone as a device equipped with a built-in neural engine or neural processing unit (NPU) capable of running small language models.

It proclaims that premium smartphones and basic smartphones (under $350) will fit this description, with only “utility smartphones” not expected to have NPU capabilities.

This will suit Qualcomm, no doubt, which features an integrated NPU in its smartphone chips and showed off a 7 billion parameter large language model running on an Android phone at last year’s MWC show.

Bob O’Donnell, President and Chief Analyst at TECHnalysis Research, said that Arm’s approach makes sense given where the market is right now.

“First, because of the wide range of different NPU architectures available and the lack of standardization, few software developers are actually using the NPUs for their AI applications. Instead, they’re defaulting to the CPU and GPU, and with the latest SME2 instructions and logic from Arm, they’re going to help accelerate those functions,” he told The Register.

“Second, many of Arm’s partners are choosing to differentiate with their own NPU designs and yet another option from Arm could actually make that NPU confusion worse. Hopefully we’ll start to see some standardized means for leveraging different NPU architectures soon so that NPUs can start being used more frequently, but I’m concerned that could still be several years away.”

Arm’s silicon licensees will have to place their bets now – will Arm’s approach with SME2 in the CPU prove more popular, or will smartphone makers and the buying public want a built-in NPU? ®