Researchers have demonstrated a new optical computing method that performs complex tensor operations in a single pass of light. The advance could reshape how modern AI systems process data and ease the growing strain on conventional digital hardware.

Tensor operations drive nearly every AI task today. GPUs handle them well, but the surge in data has exposed limits in speed, power efficiency and scalability.

This pressure pushed an international team led by Dr. Yufeng Zhang of Aalto University to look beyond electronic circuits.

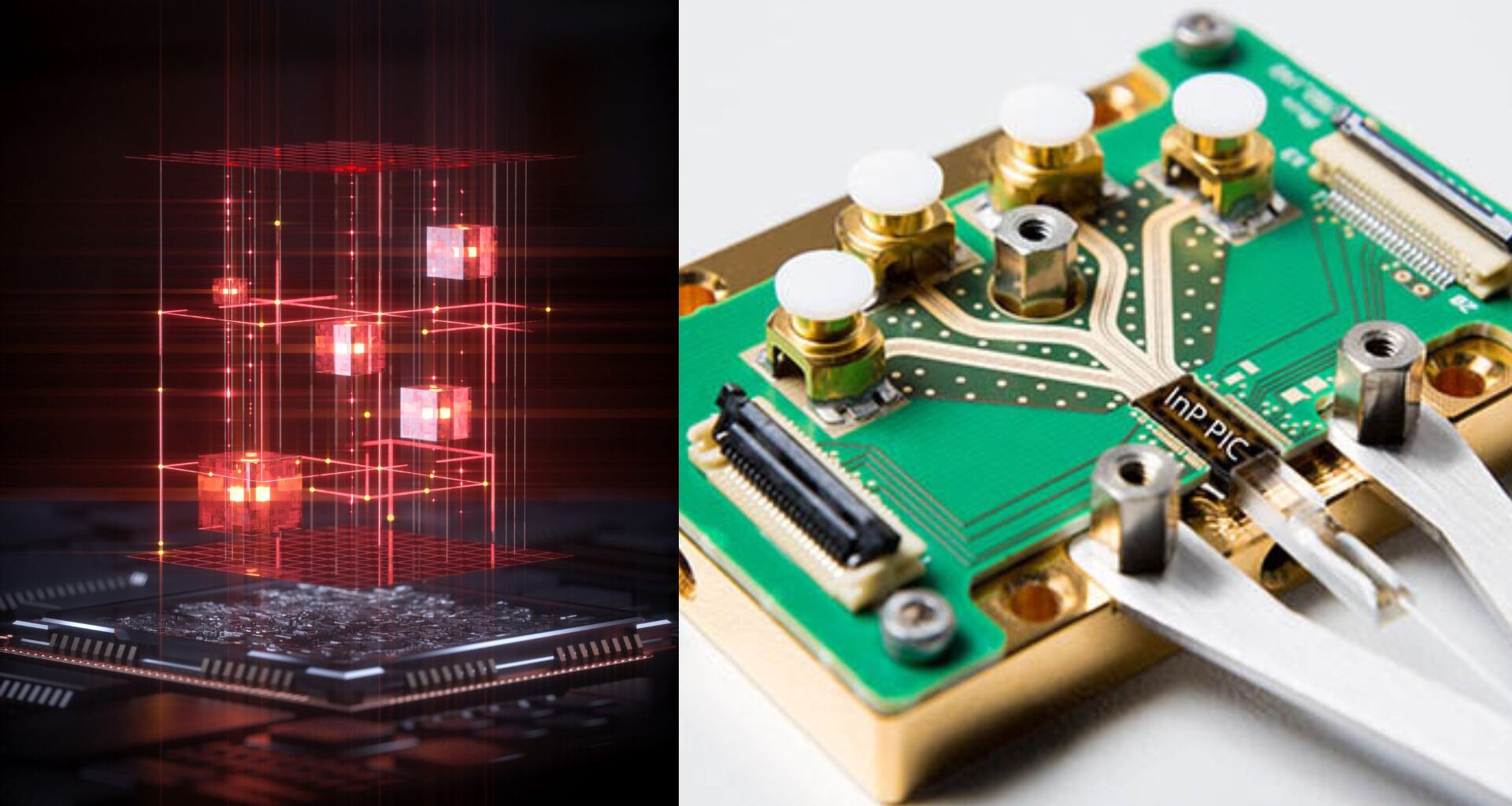

The group has developed “single-shot tensor computing,” a technique that uses the physical properties of light to process data. Light waves carry amplitude and phase.

The team encoded digital information into these properties and allowed the waves to interact as they travel. That interaction performs the same mathematical operations as deep learning systems.

“Our method performs the same kinds of operations that today’s GPUs handle, like convolutions and attention layers, but does them all at the speed of light,” says Dr. Zhang.

He adds that the system avoids electronic switching because the optical operations unfold naturally during propagation.

Multi-wavelength approach expands capability

The researchers pushed this method further by adding multiple wavelengths of light. Each wavelength behaves like its own computational channel, which lets the system process higher-order tensor operations in parallel.

Zhang compares the process to running a customs facility where every parcel goes through many checks. “Imagine you’re a customs officer who must inspect every parcel through multiple machines with different functions and then sort them into the right bins,” he explains.

The full quote continues with his analogy about merging parcels and machines into one step. The team says this mirrors how their “optical hooks” connect each input to the right output in a single operation.

Another advantage is simplicity. The interactions happen passively. No external control circuitry manages the computation. This reduces power use and makes the system easier to integrate.

Professor Zhipei Sun, who leads Aalto’s Photonics Group, says the method works on many optical platforms. “In the future, we plan to integrate this computational framework directly onto photonic chips, enabling light-based processors to perform complex AI tasks with extremely low power consumption,” he says.

Path to industry use

The team expects the technology to move into commercial hardware soon. Dr. Zhang says the goal is to deploy the method on platforms built by major companies. He estimates integration within three to five years.

The researchers argue that such systems could speed up AI workloads across fields that rely on real-time processing.

These include imaging, large language models, and scientific simulations. Optical methods also promise lower energy use, which is a growing concern as AI models expand.

“This will create a new generation of optical computing systems, significantly accelerating complex AI tasks across a myriad of fields,” Zhang concludes.

The study is published in the journal Nature Photonics.