If you are looking for someone to blame, it’s your friendly neighbourhood Chatbot. Yes, Artificial Intelligence is eating your PC upgrade.

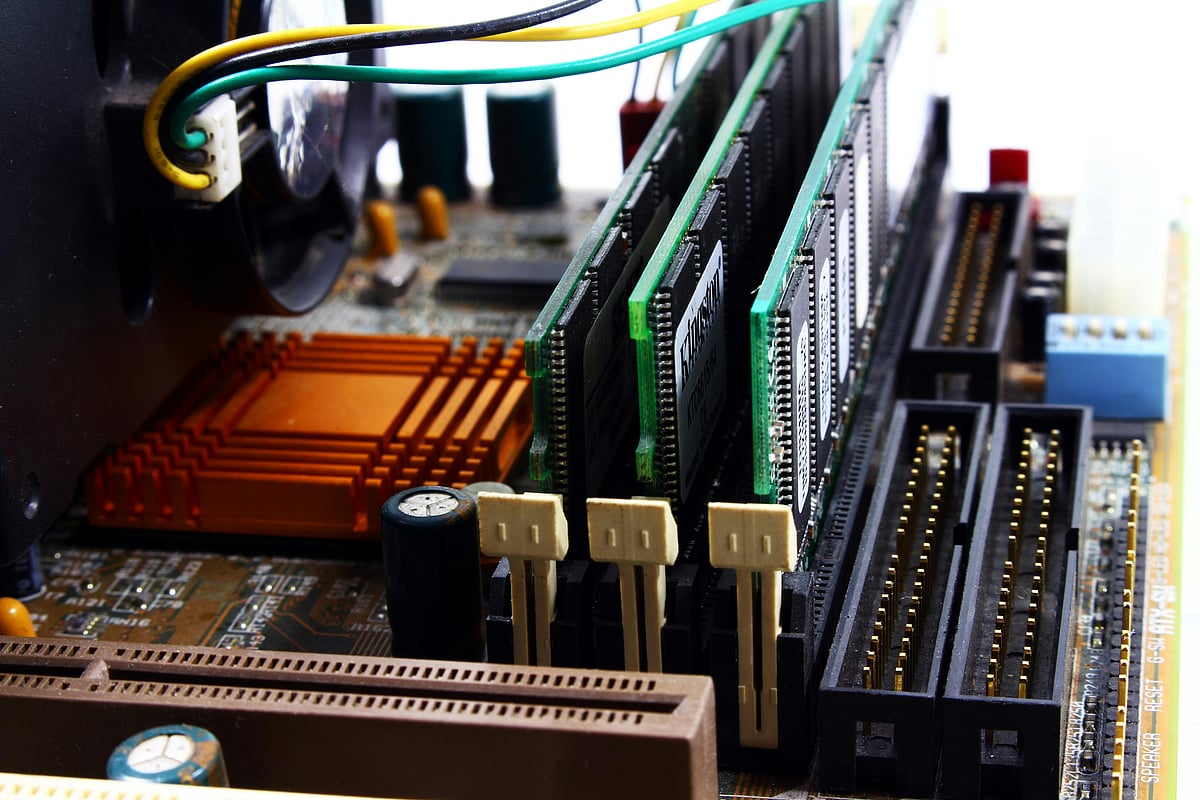

Companies that make memory chips for your gaming rig – Samsung, SK Hynix or Micron – have realised something important. They could sell their standard DDR5 RAM to you, a standard user, or they can retool their entire factory to make High Bandwidth Memory (HBM) for Nvidia’s AI super-chips and sell it to companies like Microsoft or Google for effectively infinite money.

Guess which one they picked?

As it turns out, training a Large Language Model to write bad poetry requires a staggering amount of memory. So, the manufacturing lines that used to churn out consumer DRAMs are now busy making memory for data centres. And now, the supply chains are all over the place, causing the good old supply vs demand gap.

We spoke to Fissal Oubida, General Manager – India, Middle East, Africa & CIS, Lexar Co., who explained how AI is at the forefront of the ongoing global RAM shortage.

“The rise in AI-based applications in data centres, consumer devices, and enterprise applications has increased the demand for high-performance memory. With AI models becoming larger and more compute-intensive, the availability of DRAM components is becoming more constrained; hence, costs are increasing continuously,” he said.

Rajan Arora, a Partner at Forvis Mazars, who is well versed in enterprise technologies and supply chains, says the fragile tech supply chain, coupled with the sudden AI boom, has caught vendors off-guard.

“The recent RAM shortage is really a story about AI colliding with fragile supply chains. AI workloads are memory-hungry, and the sudden spike in RAM demand caught many vendors off guard,” he said.