The internet is almost certainly the most complex machine humanity has ever created. You might not notice it simply because, most of the time, the internet and services like the Web work without a hitch, but under the hood there’s an enormous number of things that have to all work together seamlessly.

What makes it more mind-blowing is that many of the fundamental technologies that underpin the internet were created by accident, or at least not with the scalability that would be necessary in mind, just like these key examples.

4

Open Standards Beat Proprietary Systems

Plenty of private companies have tried to effectively close up and privatize the internet. Think of services like AOL and Compuserve, which tried to create walled gardens meant to act as the entire online experience.

Patrick Campanale / How-To Geek

However, the protocols that make the internet work—TCP/IP, SMTP, HTTP—weren’t handed down by corporations. They were published as RFCs (Requests for Comments), which anyone could contribute to or critique. RFCs capture the opinions and comments of different members of a technical community. Some from industry, some from the government, some from academia, and so on.

For example, RFC 761 outlined the proposal for TCP, and then, after input from outside stakeholders, was replaced by RFC 793. This community approach drove the open standards that make the open internet and web possible.

Since anyone can, for example, run their own server and connect it to the internet using these community-developed rules that are later adopted as open standards, it’s no surprise that the likes of AOL never managed to confine people to their own little corner of the world. Though you might argue that platforms like Facebook have succeeded where AOL failed, but nothing actually stops a Facebook user from simply visiting any other destination on the web!

3

The Rise of Open Source Software

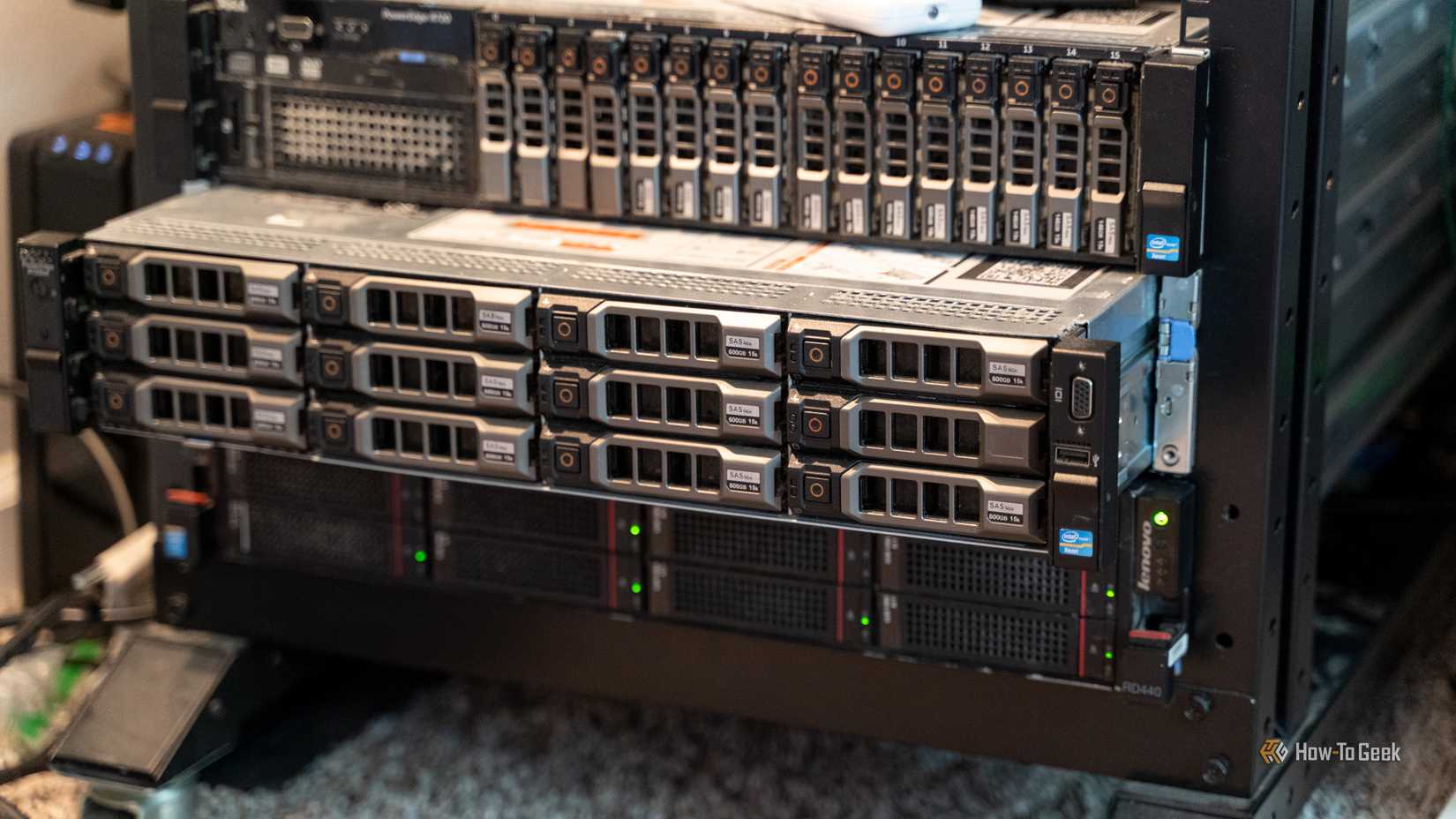

Lucas Gouveia/How-To Geek | thenatchdl/Shutterstock

Open-source software is the bedrock of internet technology, and most of it got started as hobby projects by bedroom coders. Linus Torvalds created the Linux kernel because he thought MS-DOS sucked, and he wanted a free UNIX-like OS for his new i386 PC. So he went ahead and created it. Now most of the servers on the internet run on Linux.

The beauty of open-source software is that anyone with the skills can contribute, and a small project can quickly become an essential software project that millions of people rely on. There are pros and cons to open-source software, but there’s no denying that it’s kept the internet free and open, and has secured a future path for technology stacks that could support the whole world’s tech needs if all proprietary software suddenly disappeared overnight. Like the mythical hydra, whenever one branch of an open-source project falters, it simply forks into more heads as needed.

2

Open Documentation and Knowledge Sharing

Corbin Davenport / Wikimedia

Think about how much of the information you read on the internet is free. You could argue that this started with the culture of RFCs I mentioned above and started the whole idea of open documents anyone could comment on and contribute to, but hobbyists on the internet—people not paid to write a single word—took that idea and really ran with it.

Of course, there are things like Linux MAN pages which someone had to write, but there are also video game guides, blogs, Wikis, forums and so much more. There’s a reason AI corporations set up their machine learning systems to hoover up the contents of Reddit, because smart people with little desire to profit from their knowledge keep giving it away for free.

Yet, it’s that open sharing of information with collaboration that means the internet has stood the test of time. At one point people thought the internet would be a one-way street, with large companies feeding you information from professional websites, but hobbyists fueled by pure passion (and a dash of neurodivergence) proved to be a much more powerful force.

1

Preservation Through Obsession

Game Informer via the Internet Archive

There’s a saying that goes: “the internet is forever” which could not be more wrong. When the servers go down and the hard drives die, that information is gone. Sometimes information is simply lost because no one knows how to read the format it was recorded in.

The creators and custodians of content and information on the web often seem unwilling or unable to preserve what they’ve made as soon as it no longer brings in any money, but that hasn’t stopped hobbyists from slurping up everything they can and preserving it for posterity.

One of the most obvious is the Internet Archive and its alternatives. Then there are sites that store abandonware, which is a legal grey area, and peer-to-peer networks that store anything you can think of, most often illegally, across the hard drives of millions of contributors.

There’s a pretty good chance that one day this obsession with backing up every little thing that’s ever been on the internet is going to save the bacon of some essential source code, application, or lead to the recovery of lost media.

In fact, I won’t be surprised if something a random geek saved on their hard drive turns out to be how we defeat a future alien invasion some day. Then who’s the weird one?