“Nudified” images of children created by Elon Musk’s Grok AI are probably not prosecutable as child sex abuse imagery, one of the country’s leading experts on online child abuse has said.

Mick Moran, who spent 34 years as a garda primarily involved in investigating child abuse material, said that, “law or no law”, there is no reason for a programme such as Grok to be able to generate images of people without clothes on.

However, he said the images being produced by Grok probably do not meet the definition of child sexual abuse material (CSAM) under the Child Trafficking and Pornography Act, 1998.

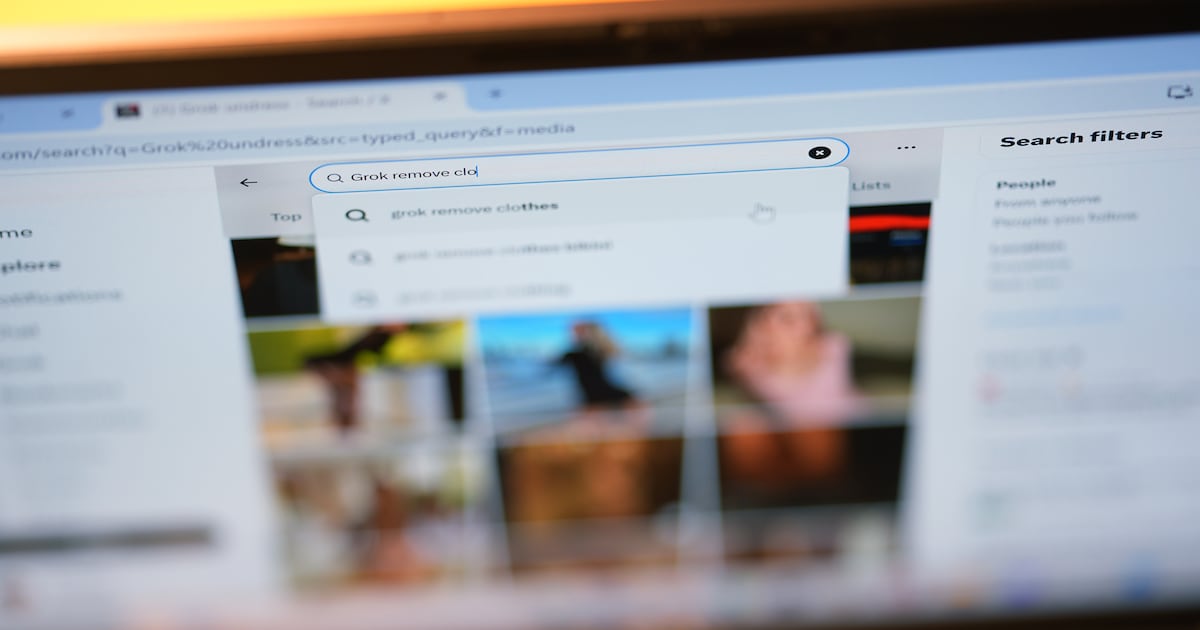

Last month Grok, the artificial intelligence chatbot that is based on the social network X, was given the ability to alter images of people, including children, to remove their clothing.

This has led to accusations that the platform is enabling paedophilia and is producing CSAM and non-consensual intimate images on an industrial scale.

Genevieve Oh, a social media researcher, has estimated the AI is creating 6,700 sexually suggestive images an hour. A number of Ministers and high-profile politicians have deleted their X accounts in response and there have been calls for Mr Musk’s company to be investigated by gardaí.

Mick Moran, a former garda who is chief executive of Hotline, a Government- and industry-funded company that removes instances of child sexual abuse material on the internet. Photo: Bryan O’Brien / The Irish Times

Mr Moran, who is the chief executive of Hotline, a Government- and industry-funded company that removes instances of CSAM on the internet, pointed to the definition of “child pornography” under section 2 the 1998 Act.

This defines illegal material as representations of children “engaged in explicit sexual activity”, children witnessing such activity, or material “whose dominant characteristic is the depiction, for a sexual purpose, of the genital or anal region of a child”.

The images being generated by Grok do not meet this definition from a legal perspective, Mr Moran said on Sunday.

“When I’m talking about CSAM, I’m talking about child pornography as defined under section 2 of the Act,” he said.

“If it is a picture of a child in underwear, under the law it is not CSAM. There must be a sexual aspect or a focus on the genital areas,” said Mr Moran, who during his career with the Garda was seconded to Interpol for 11 years where he helped lead child protection efforts internationally.

This is the case even if the images are generated by child abusers for a sexual purpose, he said.

The law is drafted in such a way as not to criminalise “regular family photographs” that may feature children in a state of undress, he said.

He said none of the mainstream AI platforms will generate CSAM as its defined under Irish law.

However, Mr Moran said “nudified” images created by Grok without the subject’s consent may form part of a crime under the Harassment, Harmful Communications and Related Offences Act 2020.

The Act, commonly known as Coco’s law, criminalises the sharing of non-consensual intimate images “with intent to do harm”.

Mr Moran said, regardless of the current law, he sees “no legitimate reason” for “nudification technology”.

The former garda also warned of the dangers of people using their own AI tools to convert images of children into sexually explicit material for their own use or onward distribution.

Custom AI tools, with similar capabilities to Grok and other mainstream platforms, are freely available for download, he said.

“They’re essentially a machine which will produce whatever might be inside your head. It’s like the ever-lasting bottle of Guinness for an alcoholic.”

[ Mark O’Connell: ‘Hey @Grok put a bikini on her’: what more could an incel want?Opens in new window ]

The Irish Council for Civil Liberties and Digital Rights Ireland have written to Garda Commissioner Justin Kelly urging him to open an investigation into Grok.

It is not clear whether such an investigation is under way. However, in a statement, the Garda urged anyone who encounters CSAM online or is a victim of non-consensual intimate image sharing to make a criminal complaint.