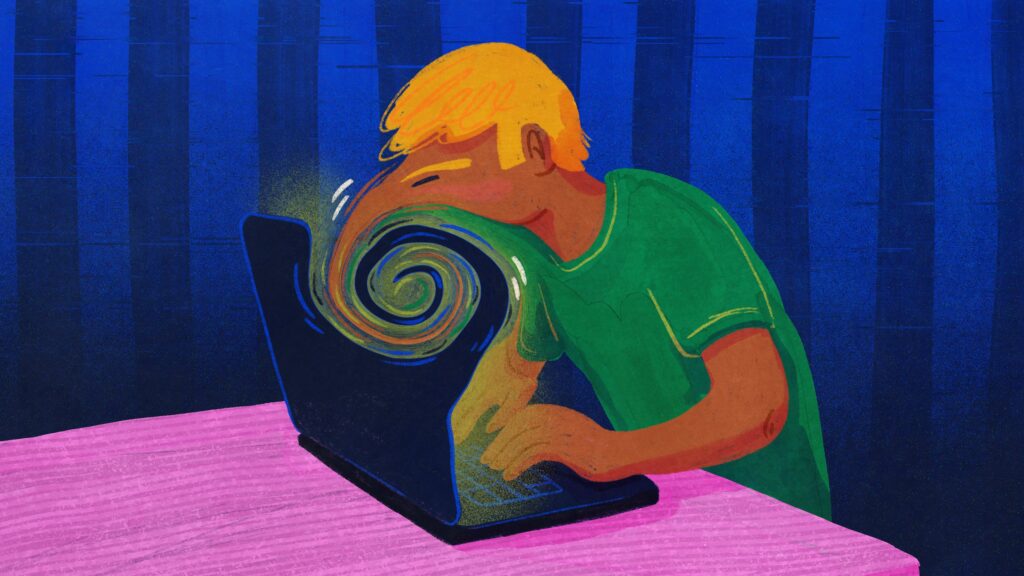

Messianic delusions. Paranoia. Suicide. Claims of AI chatbots potentially “triggering” mental illness and psychotic episodes have popped up in media reports and Reddit posts in recent months, even in seemingly healthy people with no prior history of these conditions. Parents of a teenage boy sued OpenAI last week, alleging ChatGPT encouraged him to end his life.

These anecdotes, especially when paired with sparse clinical research, have left psychologists, psychiatrists, and other mental health providers scrambling to better understand this emerging phenomenon.

STAT talked with a half-dozen medical professionals to understand what they are seeing in their clinics. Is this a new psychiatric condition, likely to appear in the next edition of diagnostic manual? Or is this something else? Most likely, it’s the latter, they said.

STAT+ Exclusive Story

Already have an account? Log in

This article is exclusive to STAT+ subscribers

Unlock this article — and get additional analysis of the technologies disrupting health care — by subscribing to STAT+.

Already have an account? Log in

Individual plans

Group plans

To read the rest of this story subscribe to STAT+.