If you’ve ever had a pet, you’ve probably had a moment where you looked at them and thought, “I wonder what’s going on in that stupid, furry, little, adorable brain of theirs.” Well, good news: you can keep wondering, because these smart glasses I saw at CES 2026 almost for sure won’t reveal anything.

Introducing the Syncglasses G2 from a company called Chmu Technology. They are, as I mentioned before, smart glasses, and they have displays, speakers, and cameras. It’s not the hardware that has me writing these words right now, though; it’s the (purported) features. The Syncglasses G2 bill themselves as, “the world’s first AI glasses with non-contact health monitoring,” and among their capabilities is—I kid you not—something called “pet translation.” Imagine my surprise while flipping through a brochure for the Syncglasses G2 and seeing this.

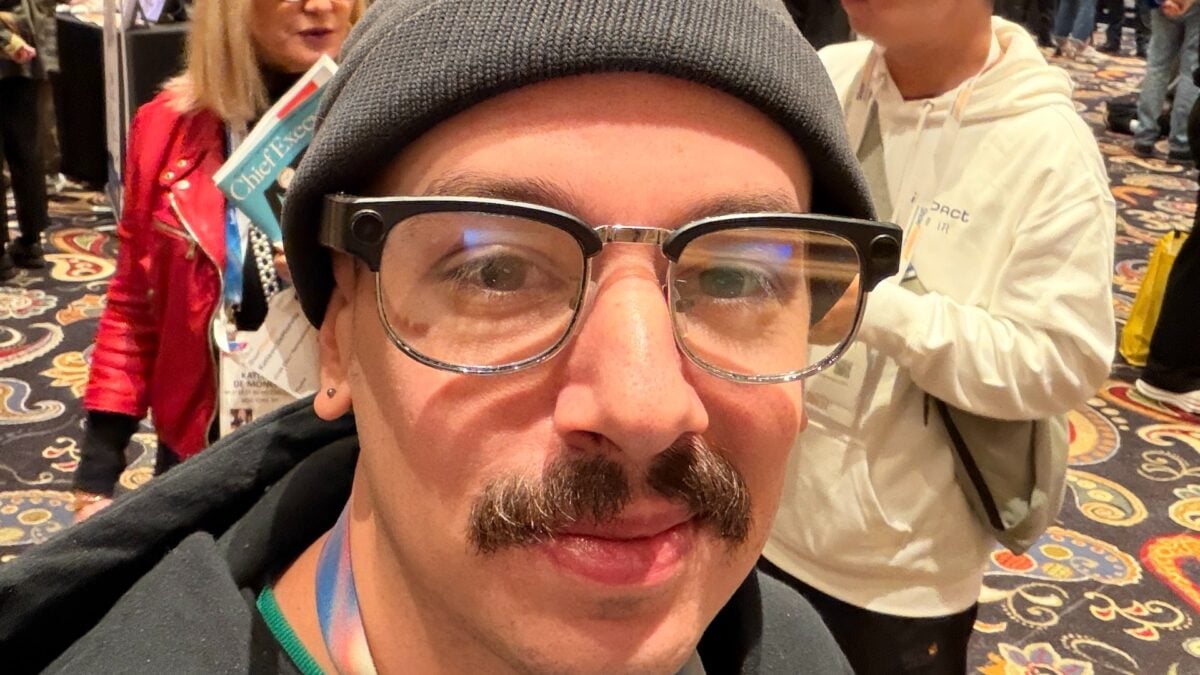

© James Pero / Gizmodo

© James Pero / Gizmodo

I incredulously inquired a representative for Chmu Technology after seeing this quirky little picture showing “pet translation,”by looking him in the eyes and uttering simply, “pet translation?!” He informed me that the Syncglasses G2 can allegedly use AI to understand pet behavior. To achieve this groundbreaking advancement in pet tech, Chmu Technology apparently bought data of animals, including videos, and then trained an AI to analyze it. Sure, okay!

There were no pets in the room for me to converse with, so I was shown a video demo of how the feature works that was almost certainly generated by AI. In this illuminating video, a (probably) AI cat mills around its (probably) AI litter box that is for some reason in the middle of the (probably) AI room until the glasses tell their (probably) AI wearer that they should… probably change the litter. Could you divine that the litter needs to be emptied by just looking at it? Most likely, but using AI is just so much more… AI.

If you read that and thought to yourself, “Sounds sus,” well, I’m right there with ya, fam. I’m no biologist, but I think truly understanding animal behavior is just slightly more complex than training an AI on watching videos scraped from TikTok of people’s pets doing random stuff. But hey, this is the AI age we live in.

I don’t mean to dismiss applications for AI in helping us do stuff, or computer vision for smart glasses that actually could be very helpful for people with low vision. But I’d be lying if I said I wasn’t tired of the deluge of AI in gadgets that don’t seem to really know how or when to use it. But hey, it’s CES after all, and if nothing else, stuff like this is good for telling us what the future almost certainly will not look like.

Gizmodo is on the ground in Las Vegas all week bringing you everything you need to know about the tech unveiled at CES 2026. You can follow our CES live blog here and find all our coverage here.