Israeli company Lightricks has open-sourced its 19-billion-parameter model LTX-2. The system generates synchronized audio-video content from text descriptions and claims to be faster than competitors.

According to the technical report, the model generates up to 20 seconds of video with synchronized stereo audio from a single text prompt. This includes lip-synced speech, background sounds, foley effects, and music matched to each scene. The full version of LTX-2 reaches 4K resolution at up to 50 frames per second, Lightricks says.

The researchers argue that existing approaches to audiovisual generation are fundamentally flawed. Many systems work sequentially – first generating video, then adding audio, or vice versa. These decoupled pipelines can’t capture the true joint distribution of both modalities. While lip synchronization depends primarily on audio, the acoustic environment is shaped by visual context. Only a unified model can handle these bidirectional dependencies.

Why asymmetric architecture matters for audio-video generation

LTX-2 runs on an asymmetric dual-stream transformer with 19 billion parameters total. The video stream gets 14 billion parameters – significantly more capacity than the audio stream’s 5 billion. According to the researchers, this split reflects the different information density of each modality.

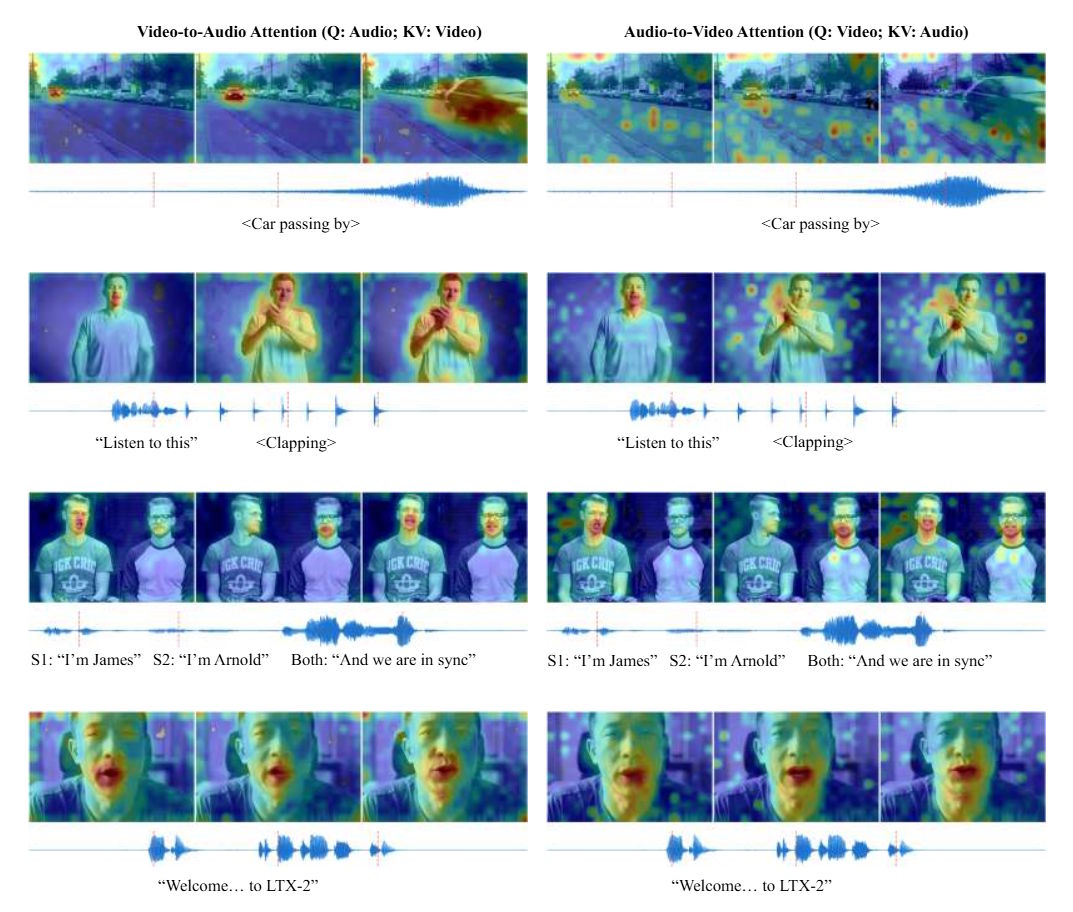

Both streams use separate variational autoencoders for their respective modalities. This decoupling enables modality-specific position encodings: 3D rotary position embeddings (RoPE) for the video’s spatial-temporal structure, and one-dimensional embeddings for audio’s purely temporal dimension. Bidirectional cross-attention layers connect both streams, precisely linking visual events like an object hitting the ground with corresponding sounds.

The cross-attention maps show how LTX-2 links visual and audio elements.

The cross-attention maps show how LTX-2 links visual and audio elements.

For text understanding, LTX-2 uses Gemma3-12B as a multilingual encoder. Instead of querying just the language model’s final layer, the system taps all decoder layers and combines their information. The model also uses “thinking tokens” – additional placeholders in the input sequence that give it more room to process complex prompts before generation begins.

Speed gains put LTX-2 ahead of competitors

LTX-2 shows significant advantages in inference speed, according to benchmarks. On an Nvidia H100 GPU, the model needs 1.22 seconds per step for 121 frames at 720p resolution. The comparable Wan2.2-14B, which generates video only without audio, takes 22.30 seconds. That makes LTX-2 18 times faster, according to Lightricks.

The maximum video length of 20 seconds also beats the competition: Google’s Veo 3 manages 12 seconds, OpenAI’s Sora 2 reaches 16 seconds, and Character.AI’s open-source model Ovi hits 10 seconds. In human preference studies, LTX-2 “significantly outperforms” open-source alternatives like Ovi and achieves results comparable to proprietary models like Veo 3 and Sora 2.

The researchers acknowledge several limitations, though. Quality varies by language – speech synthesis can be less precise for underrepresented languages or dialects. In scenes with multiple speakers, the model occasionally assigns spoken content to the wrong characters. Sequences over 20 seconds can suffer from temporal drift and degraded synchronization.

Open source release challenges closed API approach

Lightricks explains its decision to open-source the model as a critique of the current market. “I just like don’t see how you can achieve it with closed APIs,” says Lightricks founder Zeev Farbman in the announcement video about the promises of current video generation models. The industry is stuck in a gap: on one hand, you can create impressive results, but on the other, you’re far from the level of control professionals need.

The company also takes an explicitly ethical stance. “Artificial intelligence can augment human creativity and human intelligence. What concerns me is that someone else will own my augmentation,” Farbman continues. The goal is to run AI on your own hardware, on your own terms, and make ethical decisions with a broad community of creators rather than outsourcing them to a select group with its own interests.

Beyond the model weights, the release includes a distilled version, several LoRA adapters, and a modular training framework with multi-GPU support. The model is optimized for Nvidia’s RTX ecosystem and runs on consumer GPUs like the RTX 5090 as well as enterprise systems. Model weights and code are available on GitHub and Hugging Face, and there’s a demo on the company’s content platform after free registration.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive “AI Radar” Frontier Report 6× per year, access to comments, and our complete archive.