A recent study published in Communications Psychology (a Nature group journal) revealed a surprising twist: Artificial Intelligence (AI) generated responses were often perceived as more compassionate and empathetic than those from human mental health professionals, even when participants knew they were interacting with an AI (Chaudhry et al., 2024). This finding opens new avenues for mental health care, especially in India, where stigma continues to deter many from seeking conventional therapy.

Mental health(Image by Freepik)

Mental health(Image by Freepik)

Although awareness about mental health is growing, many individuals in India remain reluctant to seek professional support. A 2021 UNICEF-Gallup survey found that only 41% of Indian adolescents were open to seeking help for mental stress, underscoring persistent stigma and limited access to care (UNICEF & Gallup, 2021). In many families, mental health challenges are still perceived as personal failings or sources of shame, leaving numerous students to face anxiety, depression, and burnout in silence.

NCRB data

NCRB data

India’s education system is notoriously competitive. Entrance exams such as JEE, NEET, UPSC, and CUET often determine students’ academic and career futures, creating immense psychological strain. While some institutions have begun adopting a more holistic admissions approach—considering extracurricular activities, leadership, and emotional intelligence—test scores remain the primary criterion.

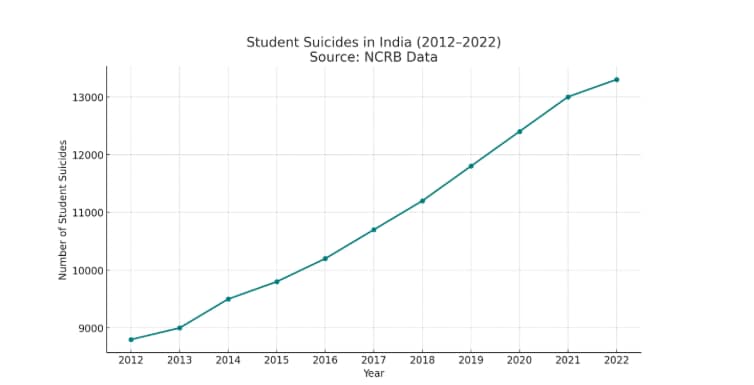

The consequences are severe. According to the National Crime Records Bureau (NCRB), over 13,000 student suicides were recorded in 2021 alone—an average of more than one every hour (NCRB, 2021). Mental health services in educational institutions remain inadequate, further exacerbating this crisis.

Within this high-pressure context, AI tools like ChatGPT have emerged as unexpected sources of comfort. Accessible 24/7 and providing anonymous, nonjudgmental interaction, these platforms create safe spaces for individuals hesitant to seek traditional therapy due to fear or shame.

While AI-powered tools hold great promise, implementing them effectively in India’s diverse socio-cultural landscape poses challenges. Limited internet access and digital literacy in rural areas may restrict AI therapy’s reach, exacerbating existing urban-rural mental health disparities. Additionally, AI algorithms can inherit biases from training data, potentially affecting cultural sensitivity and response appropriateness. Ensuring transparency, ethical safeguards, and continuous human oversight is essential to prevent unintended harm and maintain trust among users.

AI therapists present distinct advantages compared to their human counterparts. Unlike human therapists who may sometimes exhibit judgment, AI systems provide non-judgmental support. While human therapists have limited availability, AI-powered therapy is accessible around the clock. Cost barriers prevalent in traditional therapy are minimised, as AI therapy is typically low-cost or free. Furthermore, human therapists may experience emotional fatigue that affects the quality of care, whereas AI systems maintain consistent attentiveness. Finally, while privacy risks exist in human therapy due to varying confidentiality practices, AI platforms generally maintain controlled and secure data environments.

One reason AI therapy often feels more compassionate is that AI models are trained on extensive datasets of supportive, empathetic language derived from counselling transcripts, therapeutic literature, and social conversations. This enables AI to consistently generate responses that validate emotions, use comforting phrases, and avoid bias. Unlike humans, AI does not experience fatigue, stress, or distraction, allowing it to maintain a patient and attentive tone throughout every interaction. Moreover, AI can tailor its language to reflect cultural sensitivity and individual contexts when properly trained, making interactions feel personalised and understanding. This combination of consistent empathy, non-judgmental listening, and adaptability helps AI bridge the emotional gap many individuals feel with human therapists.

Research published in JMIR Mental Health (2024) found that third-party evaluators frequently rated AI responses as more compassionate than those of human therapists in text-based counselling (Zhouet al., 2024). Sometimes, simply being heard without judgment brings significant relief to struggling students.

Beyond measurable compassion ratings, it is worthwhile to examine the linguistic qualities that contribute to AI’s perceived empathy.

Phrases such as “I understand that this is difficult” or “It’s okay to feel that way” are commonly used by AI models like ChatGPT. These statements provide a sense of empathy, safety, and nonjudgment that many human therapists may unintentionally miss due to time constraints, personal biases, or emotional fatigue. Understanding these specific language patterns may help human therapists adopt some of AI’s linguistic strengths to bridge the empathy gap and improve mental health counselling across both human and AI platforms.

AI is not a substitute for trained mental health professionals, especially in cases involving trauma or severe mental health disorders. However, it can serve as a valuable first step, offering preliminary assessments, coping strategies, and emotional support to break the silence.

Initiatives like Kerala’s Jeevani Mental Health Programme demonstrate how technology can complement human care, particularly in educational environments where counselling resources remain scarce (Government of Kerala, 2022).

To actualise this hybrid potential, policymakers and educational institutions must prioritise expanding digital infrastructure and training mental health professionals to collaborate with AI tools. Integrating AI-based assessments into existing counselling services can effectively triage students, ensuring those at risk receive timely human intervention. Furthermore, establishing clear guidelines around data privacy, algorithmic transparency, and cultural customisation will help scale AI adoption responsibly. By fostering public-private partnerships and investing in awareness campaigns, India can create an inclusive ecosystem that destigmatises mental health and leverages AI innovation for lasting impact.

While AI may never fully replicate human empathy, it has the potential to normalise mental health discussions in a society still burdened by stigma. For many Indian students caught in an unforgiving academic system, having a space to express themselves without fear of judgment could mark the beginning of healing. As India progresses, combining AI’s accessibility with human expertise may offer a pragmatic, compassionate solution for supporting young people weighed down by invisible societal pressures.

This article is authored by Kushal Sachdeva, founder and CEO, Protect Paws NGO. He is a writer and a passionate learner of emerging technologies.