A group of Apple and Tel-Aviv University researchers figured out a way to speed up AI-based text-to-speech generation without sacrificing intelligibility. Here’s how they did it.

An interesting new approach to generating speech faster

In a new paper titled Principled Coarse-Grained Acceptance for Speculative Decoding in Speech, Apple researchers detail an interesting approach to generating speech from text.

While there are currently multiple approaches to generating speech from text, the researchers focused on autoregressive text-to-speech models, which generate speech tokens one at a time.

If you’ve ever looked up how most large language models work, you’re probably familiar with autoregressive models, which predict the next token based on all the tokens that came before.

Autoregressive speech generation works in a generally similar way, except that the tokens represent audio chunks rather than words or characters.

And while this is an efficient way to generate speech from text, this approach also creates a processing bottleneck, as Apple’s researchers explain:

However, for speech LLMs that generate acoustic tokens, exact token matching is overly restrictive: many discrete tokens are acoustically or semantically interchangeable, reducing acceptance rates and limiting speedups.

In other words, autoregressive speech models can be too strict, often rejecting predictions that would be good enough, simply because they don’t match the exact token the model expects. This, in turn, slows everything down.

Enter, Principled Coarse-Graining (PCG)

In a nutshell, Apple’s solution is based on the premise that many different tokens can produce nearly identical sounds.

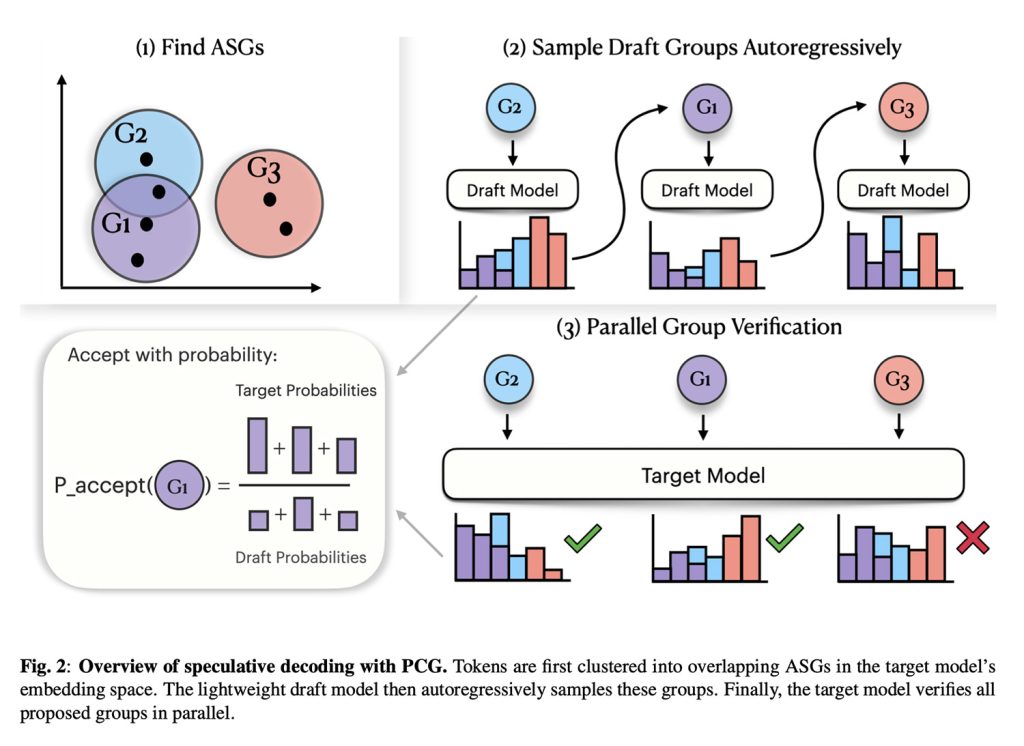

With that in mind, Apple groups speech tokens that sound similar, creating a more flexible verification step.

Put another way, rather than treating every possible sound as completely distinct, Apple’s approach allows the model to accept a token that belongs to the same general “acoustic similarity” group.

In fact, PCG is composed of two models: a smaller model that quickly proposes speech tokens, and a second, larger judge model that checks whether those tokens fall into the right acoustic group before accepting them.

The result is a framework that adapts the concepts of speculative decoding (SD) to LLMs that generate acoustic tokens, which in turn accelerates speech generation while ensuring intelligibility.

And speaking of results, the researchers show that PCG increased speech generation by about 40%, a significant improvement, given that applying standard speculative decoding to speech models barely improved speed at all.

At the same time, PCG kept word error rates at lower levels than prior speed-focused methods, preserved speaker similarity, and outperformed prior speed-focused approaches, achieving a 4.09 naturalness score (a standard 1–5 human rating of how natural the speech sounds).

In one stress test (Ablation on intra-group token substitution), the researchers replaced 91.4% of speech tokens with alternatives from the same acoustic group, and the audio still held up, with only a +0.007 increase in word error rate and a −0.027 drop in speaker similarity:

What PCG could mean in practice

While the study doesn’t discuss what its findings could mean in practice for Apple products and platform, this approach could be relevant for future voice features that need to balance speed, quality, and efficiency.

Importantly, this approach doesn’t require training the target model, since it is a decoding-time change. In other words, it is an adjustment that can be applied to existing speech models at inference time, rather than requiring retraining or architectural changes.

Moreover, PCG requires minimal additional resources (only about 37MB of memory to store the acoustic similarity groups), making it practical for deployment on devices with limited memory.

To find out more about PCG, including in-depth technical details on datasets and additional context on the evaluation methods, follow this link.

Accessory deals on Amazon

FTC: We use income earning auto affiliate links. More.