AI chatbots offering legal advice to parents on how to get support for children with special needs are operating without professional oversight, The Times has learnt.

Experts have raised serious concerns over the accuracy, accountability and regulation of artificial intelligence tools that are providing guidance to families.

One tool is AskEllie, an AI-powered “legal assistant” created by Oliver Lee, a father of two neurodiverse boys. He has no formal legal training.

Promoted as a free resource to help families navigate the complex special educational needs and disabilities (Send) system, AskEllie offers guidance on securing vital support, including legally binding education, health and care plans (EHCPs).

According to its website, the generative AI model is “trained on UK Send law and government guidance” and aims to help parents interpret legal documents and understand their rights but carries a disclaimer urging users to consult qualified professionals and acknowledging that errors may occur.

Lee said the tool was not “competing with experts but filling a gap” to access support where “none exists”.

• What is Send? How EHCP reform could lead to a fresh Labour row

However, a review of the bot’s responses has prompted concern among experts, who say it gives generic advice in one of the most complex areas of education law. In one test scenario, The Times asked the bot about the support available for a child who had anxiety, a condition that may not necessarily require an EHCP.

Demand for EHCPs is at a record high. Applications are rising and the system is buckling under the pressure. Ministers have said the present model is financially unsustainable. Reforms are expected this autumn.

In the test scenario, AskEllie recommended applying for one in the first instance and then shared a template letter. When asked whether behavioural examples from outside school could strengthen the case, it provided nine sample scenarios suitable for direct inclusion in an application.

“At a family gathering, my child became overwhelmed with the number of people and retreated to a quiet room,” one example read. “They struggled to interact with relatives and ended up feeling isolated, which affects their social development.”

• Age of anxiety: poll reveals teen views on stress, social media and school

While such examples may resonate with parents, Rob Price, a specialist in Send and education law, cautioned that packaged AI content risked undermining the case-by-case nature of the law.

The HCB solicitor said: “Send law is extremely complex and nuanced, so you’ve got to be careful with trusting what an AI chatbot says and how accurate it is to your particular circumstances.”

Price said specific legal guidance cannot reliably be replicated by an AI tool, adding that doing so got “into really dangerous territory”.

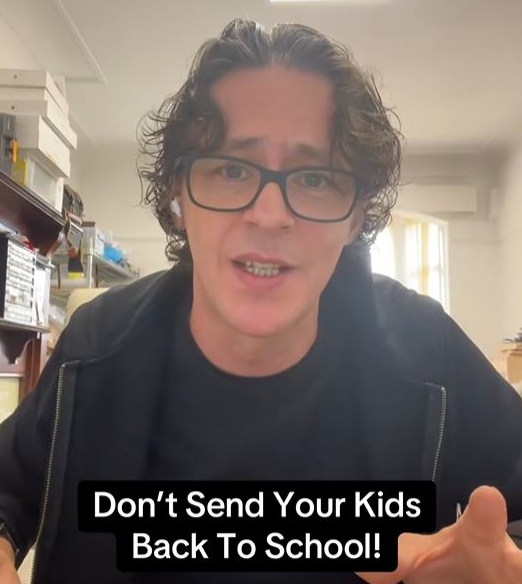

AskEllie has proven especially popular on TikTok, where it has attracted more than 24,000 followers, and Lee has been nominated for a National Diversity Award for his work. On the social media platform, AskEllie has pinned a video titled “Don’t Send Your Kids Back to School!” that has been viewed more than half a million times.

• Alice Thomson: Special needs children deserve a better start

The one-minute post, published last month, addresses false rumours circulating on social media that schools are implementing lockdown procedures in response to threats of violence from migrants. The video confirms there is “no official evidence” to support the rumours, but the context is only explained fully in a separate video further down the feed.

The pinned video has more than 700 comments citing further confusion about rumours and prompting accusations of “scaremongering”.

Lee defended the video and its headline, saying it aimed to counter widespread misinformation circulating at the time.

Price said the growing reliance on social media and AI tools reflected “a symptom of a broken system in urgent need of reform”.

Given the complexity of securing appropriate support, he said it was “unsurprising” that parents turned to unregulated resources, even though these often lacked the qualified advice such matters required.

Contact, the national Send charity, said AI tools often gave inaccurate or misleading advice, sometimes wrongly attributed to it. It has heard from parents misinformed by AI summaries surfaced in search results and stressed that such tools can strip away vital context.

Independent Provider of Special Education Advice, England’s leading Send legal charity, said AI tools were “not yet reliable in interpreting and applying legal definitions and principles” in Send law.

Lee said he “take[s] the responsibility of building this platform seriously,” adding: “If AskEllie ever gave wrong or dangerous advice, I’d want to know and fix it.” He explained that AskEllie was “not a company or commercial product” but something he built “entirely on my own as a parent trying to help other parents”.

He added: “I don’t charge for the site. I make no money from it. There are no ads, no data collection and no financial motive. It exists because families are struggling.”

The Department for Education said: “Parents seeking advice about support for their child should do so through official channels, to ensure information is reliable …We’re already taking action to reform the broken Send system this government inherited and make sure that evidence-based support is available at the earliest stage — from significant investment in places for children with Send, to improved teacher training, to embedding Send leads in our ‘best start family hubs’ in every local area.”