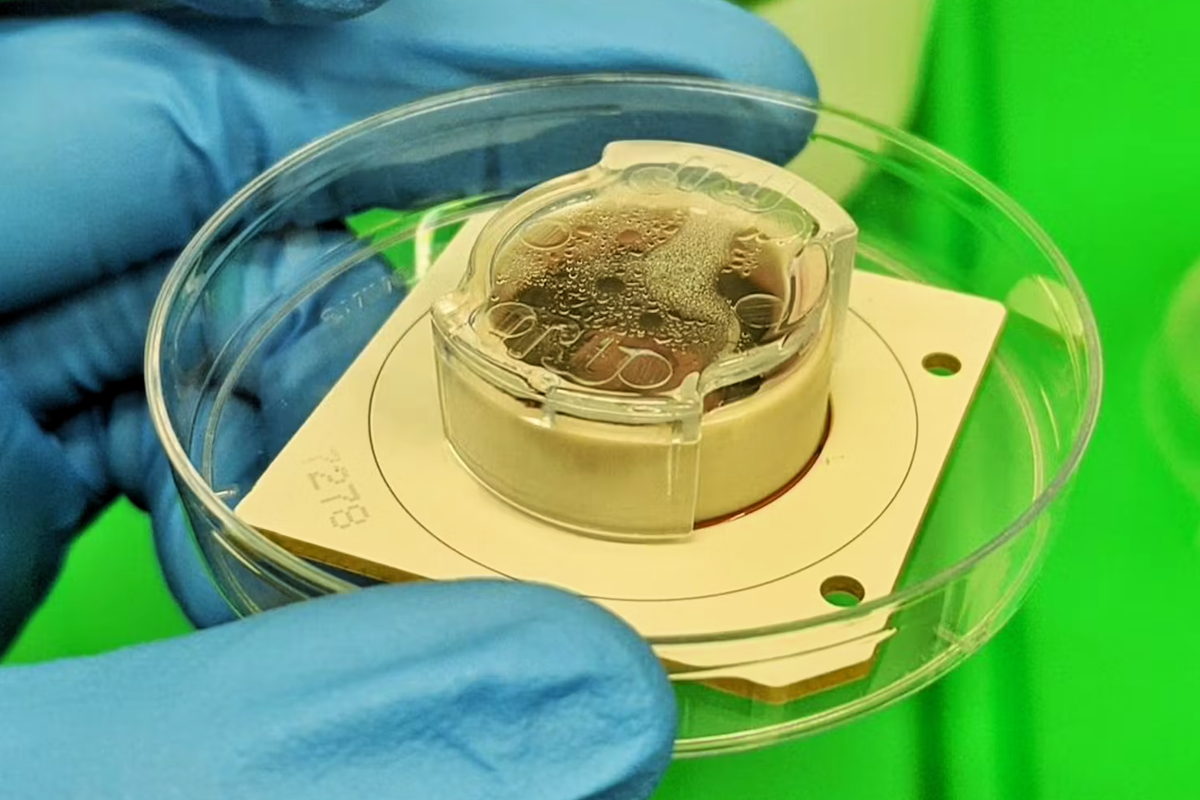

In 2021, a dish of living human brain cells figured out how to play the 1970s arcade game Pong. It took just five minutes for the collection of neurons, called DishBrain, to learn how to move the paddle and hit the ball, marking the first time that a lab-grown neural network had ever completed a goal-oriented task.

In a study published the following year, researchers at the Australian startup Cortical Labs said the breakthrough not only offered new insights into how the brain works, but could provide the platform for a new era of ultra-intelligent biological computers capable of thinking like a human.

Cortical Labs referred to it not as artificial intelligence, but actual intelligence. By combining real neurons with hardware, biocomputers have the potential to solve general tasks that current AI systems struggle with, while also requiring just a fraction of the energy.

Hundreds of millions of years of evolution have made the human brain extremely energy efficient. The 86 billion neurons of an average brain require just 20 watts of power to function – roughly the same amount as an LED bulb.

By contrast, the inefficient architecture of current artificial intelligence systems means that even simple tasks require massive amounts of power. Facial recognition, for example, requires thousands of times more energy for an AI to perform compared to a human simply recognising a face.

open image in gallery

Global energy consumption for AI has risen tenfold since OpenAI launched ChatGPT, according to estimates from Deloitte (ClearView AI)

One week before the DishBrain study was published in the journal Neuron, OpenAI launched ChatGPT. The AI chatbot quickly became a phenomenon, breaking user growth records and shifting the entire tech industry’s focus towards artificial intelligence.

Global energy consumption for AI has since risen tenfold, according to estimates from Deloitte, with that growth rate expected to continue until at least 2030.

This week, OpenAI announced a landmark AI infrastructure deal with chip maker Nvidia, which will finance the first multi-gigawatt data centres to power artificial intelligence. Nvidia founder and CEO Jensen Huang described it as “the biggest AI infrastructure project in history”.

The first part of the investment will be used to build 10 gigawatts of computing power for AI – 10GW is equivalent to Canada’s entire data centre capacity, and that covers everything from online banking and business operations, to social media and streaming.

In July, Meta founder Mark Zuckerberg announced that his company will also be spending hundreds of billions of dollars to build vast AI data centres to pursue “superintelligence” – AI that can outsmart any human at any task.

The first facility, named Prometheus, will cover the same area as Manhattan. It comes at not only a massive financial cost, but also an environmental one. A recent study by the World Resources Institute estimated that AI infrastructure will consume up to 1.7 trillion gallons of freshwater annually by 2030.

The power requirements could also set back green energy initiatives, as companies force polluting power stations to stay online to meet the growing demand. OpenAI boss Sam Altman has claimed that a new power source will be necessary to keep pace with AI development.

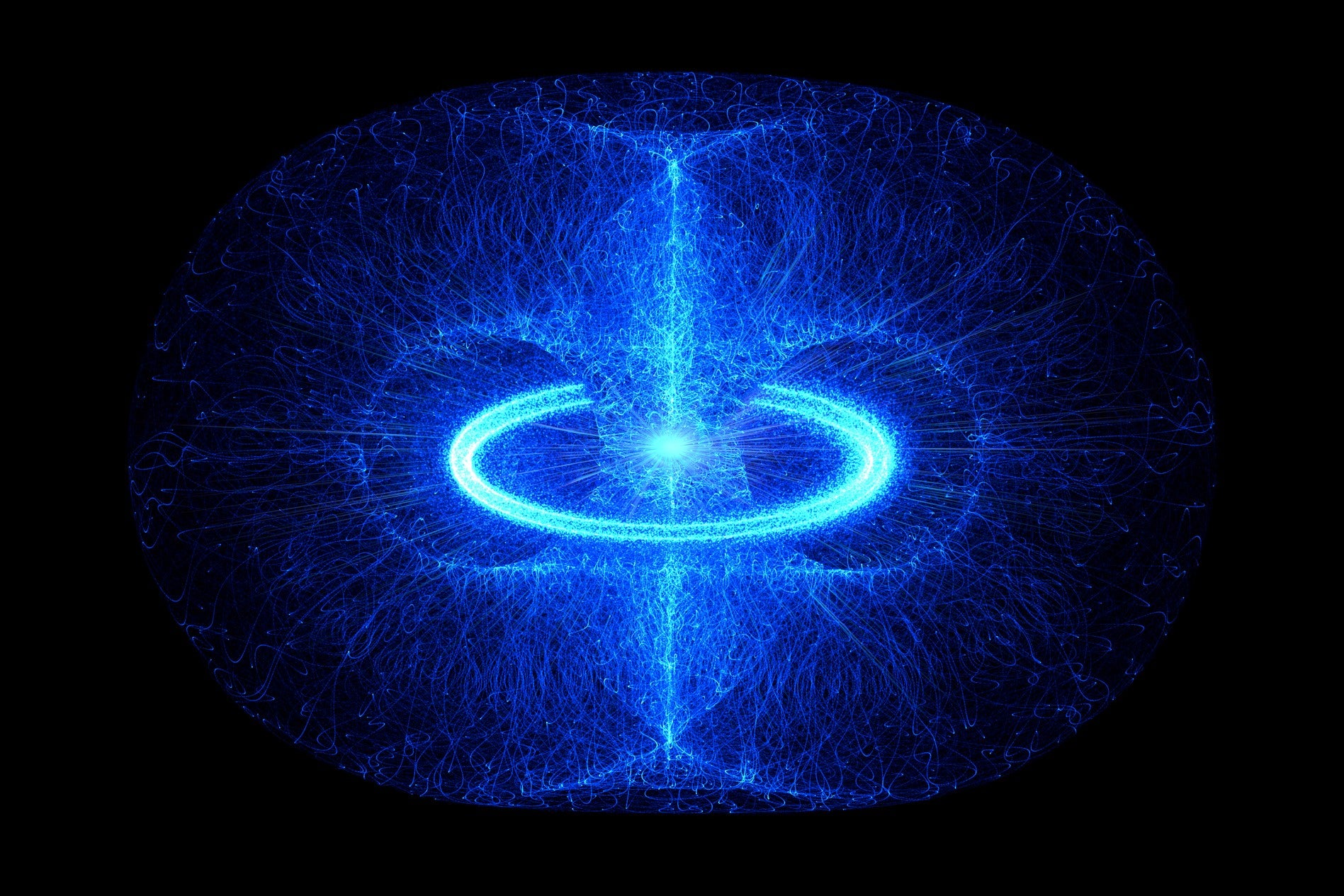

Speaking on a podcast last year, Altman suggested that nuclear fusion, which mimics the natural reactions that occur within the Sun to produce near-limitless energy, could be one solution.

“Energy is the hardest part,” he said. “Building data centres is also hard, the supply chain is hard, and then of course, fabricating enough chips is hard. But we’re going to want an amount of compute that’s just hard to reason about right now.”

The tech billionaire has invested hundreds of millions of dollars into the technology; however, it could be decades before its potential is ever realised.

open image in gallery

Hot plasma within a nuclear fusion reaction needs to be kept stable in order to generate energy (Getty/iStock)

The alternative to seeking out huge amounts of power would be to change the current status quo of silicon-based technologies. By replacing digital processors with living ones made of human neurons, energy demands would plummet.

“Living neurons are one million times more energy efficient than silicon,” Dr Ewelina Kurtys, a scientist at the biocomputing startup FinalSpark, told The Independent. “Apart from possible improvements in AI model generalisation, we could also reduce greenhouse emissions without sacrificing technological progress.”

Dr Kurtys acknowledges that there are still some major hurdles to overcome before biocomputers can be used as a replacement for conventional computers. There is still no way to program them, and there is no formal framework for neurons to encode and process information.

“Contrary to digital computers, biocomputers are [a] real black box,” she said. “For this reason, we need a lot of experimentation to make them work. But if we find the way to control those black boxes, they can become truly powerful tools for computing.”

Dr Kurtys estimates that it will take 10 years for biocomputing systems to be realised on a commercial scale.

Cortical Labs, the company behind the Pong-playing petri dish, has said that another problem with its system is that the neurons only live a few months in a liquid supply of nutrients. Once they die, they need to be replaced, but with no way to perform memory transfer, they have to restart from the beginning.

Earlier this year, the startup unveiled the world’s first commercial biological computer that runs on living human brain cells. Billed as a “body in a box”, the $35,000 CL1 machine is currently only available to researchers and still a long way off from being used to power real-world applications.

“It’s the foundation for the next stage of innovation,” said Cortical founder and chief executive Dr Hon Weng Chong. “The real impact and the real implications will come from every researcher, academic or innovator that builds on top of it.”