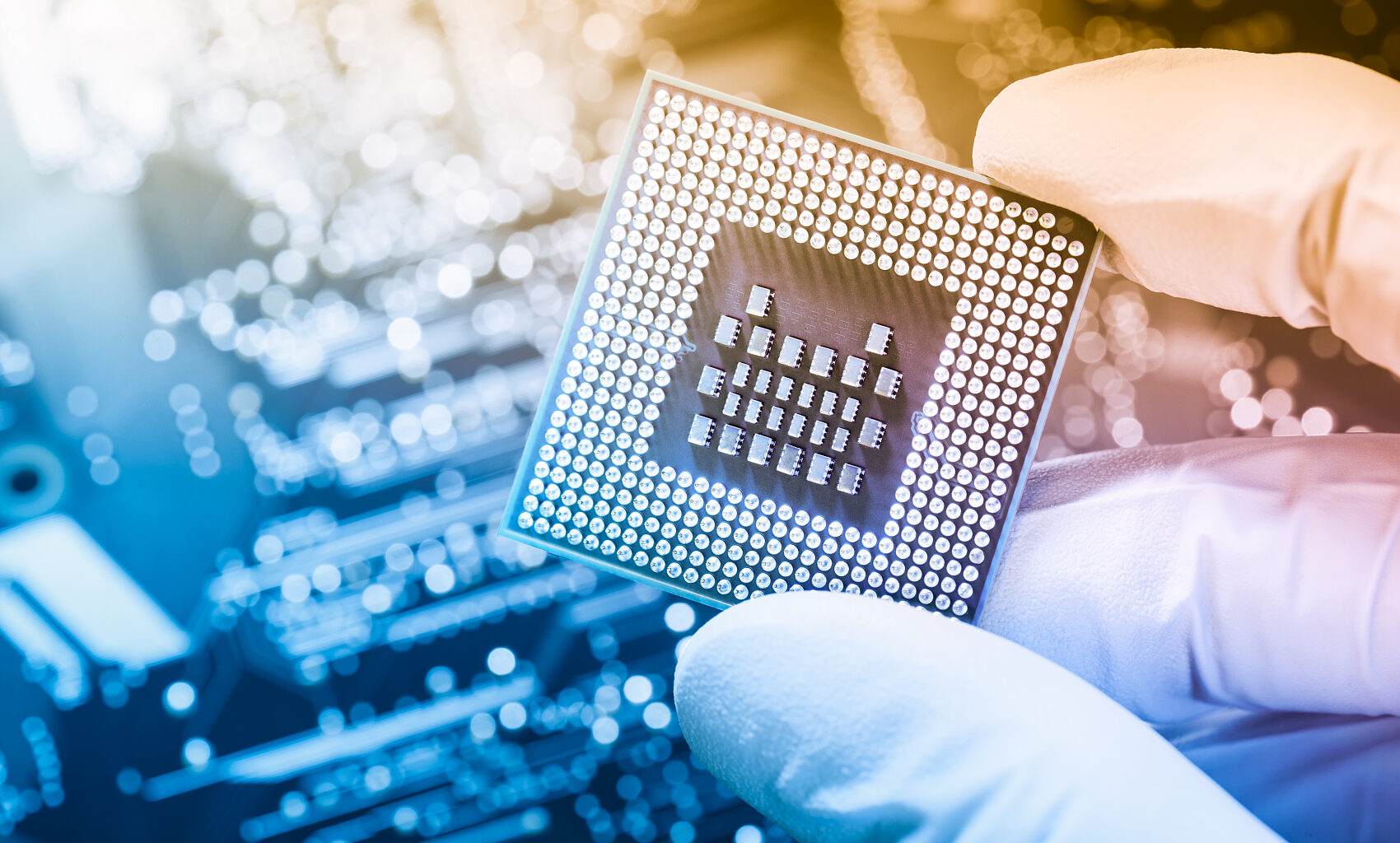

The computational power revolution drives industrial synergy.

Core Conclusion: The computing power revolution leads to industrial resonance, with structural opportunities spanning the entire value chain.

Core Conclusion: The computing power revolution leads to industrial resonance, with structural opportunities spanning the entire value chain.

In Q3 2025, the semiconductor and AI industries are experiencing a triple resonance of ‘accelerated technological iteration, demand structure upgrades, and increased capital expenditures.’ In the semiconductor sector, technological barriers in equipment are strengthening, manufacturing capacity is tilting toward advanced processes, and demand for HBM (High Bandwidth Memory) is surging. In the AI sector, inference demand is shifting from ‘quantitative change’ to ‘qualitative change,’ with intelligent AI driving a hundredfold increase in computational needs. Cloud vendors’ total capital expenditure is expected to exceed USD 360 billion in 2025, with growth projected to surpass 30% in 2026.

From an investment logic perspective, the core industry contradiction has shifted from ‘supply constraints’ to ‘demand stratification.’ Short-term focus should be on AI chip iterations and geopolitical policies, while long-term investments should target firms offering ‘technological barriers + demand resilience.’ It is recommended to allocate ‘mid-to-high single-digit percentages’ to semiconductor leaders and AI computing power chain assets, employing a ‘pyramid accumulation + inverted pyramid selling’ strategy to manage volatility and capture long-term value.

Semiconductor Sector: Technological barriers build moats, demand differentiation reveals opportunities.

As the ‘physical foundation’ of AI computing power, this quarter’s various sub-sectors of semiconductors exhibit characteristics of ‘leaders taking the lead, niche areas booming,’ driven by technological iteration and demand upgrades.

As the ‘physical foundation’ of AI computing power, this quarter’s various sub-sectors of semiconductors exhibit characteristics of ‘leaders taking the lead, niche areas booming,’ driven by technological iteration and demand upgrades.

(1) Equipment End: ASML Holding monopolizes high-end markets, with technological iteration solidifying long-term advantages.

ASML Holding, the global leader in lithography systems, reported Q2 revenue of EUR 7.69 billion (up 23% year-over-year, exceeding expectations of EUR 7.51 billion), with a gross margin of 53.7% (up 2.2% year-over-year). However, its Q3 revenue guidance of EUR 7.4-7.9 billion fell short of expectations (EUR 8.21 billion), and management warned that macroeconomic and geopolitical factors may hinder growth prospects by 2026.

Technically, the shipment of the first next-generation high-NA EUV system (TWINSCAN EXE:5200B) in Q2 solidified its monopoly on 2nm and below process nodes. Its annual R&D investment exceeding €3 billion (2024 data) supports over 70% of the global EUV market share. Regionally, China accounts for more than 25% of revenue, with the CEO stating that ‘the impact of U.S. tariffs on China is less than expected,’ highlighting the symbiotic relationship of ‘collaboration and competition’ within the supply chain.

(II) Manufacturing End: Taiwan Semiconductor Leads in Advanced Process Nodes, Balancing Capacity Expansion with Short-Term Profitability

Taiwan Semiconductor reported a net profit of NT$398.3 billion in Q2 (up 61% year-on-year, surpassing expectations of NT$376.42 billion). High-performance computing revenue increased by 14% quarter-on-quarter, prompting an upward revision of the 2025 revenue growth forecast to 30% (from 25%).

In terms of technology and capacity planning, 2nm process mass production is expected in H2 2025, with 30% of capacity located in Arizona, USA. Progress on the second wafer fab in Japan and the Dresden, Germany plant is proceeding smoothly as part of a forward-looking strategy to mitigate geopolitical risks and meet global AI compute demand. In the short term, the Q3 gross margin guidance of 55.5%-57.5% (below expectations of 57.2%) reflects the impact of New Taiwan Dollar appreciation and lower margins at overseas plants (initially 2%-3% annually). However, the long-term gross margin target remains above 53%, reflecting the logic of ‘short-term concessions for long-term market share.’

(III) Memory End: Surging HBM Demand, SK Hynix Leads Structural Growth

The memory segment experienced ‘volume and price increases’ in Q2, with SK Hynix (SK Hynix) emerging as the key beneficiary. Q2 revenue reached KRW 22.23 trillion (up 35% year-on-year, up 26% quarter-on-quarter), and operating profit hit KRW 9.21 trillion (up 68% year-on-year), setting a new quarterly record. DRAM contributed 77% of revenue, driven by robust HBM demand. The company maintains its plan to double HBM sales in 2025, with the M15X wafer fab scheduled to commence operations in Q4 2025, supplying HBM from 2026 onward.

In the market landscape, the global HBM market is dominated by SK Hynix, Micron, and Samsung. SK Hynix held over 40% of the HBM market share in Q2 (according to Counterpoint data), leveraging its technological advantages in 321-layer NAND and HBM3E, underscoring that ‘the speed of technological iteration determines corporate survival.’

(IV) Design End: NVIDIA Monopolizes AI Chips, AMD Differentiates Through Competition

AI chip design exhibits a ‘one superpower, multiple strong players’ structure. NVIDIA’s Q2 data center business revenue hit $41.1 billion (up 56% year-on-year), with the GB300 NVL72 system achieving 10x higher energy efficiency and 10x improved inference performance compared to Hopper. Major forecasts predict the 2028 AI accelerator Total Addressable Market (TAM) will reach $563 billion, with NVIDIA maintaining over 85% market share. Its CUDA ecosystem serves as a core competitive advantage or ‘moat.’

AMD focuses on differentiated competition in inference scenarios, raising the average selling price of the MI350 AI chip to $25,000 (up 67% year-on-year). HSBC assesses it as ‘competitive with NVIDIA’s B200,’ with AI chip sales projected to reach $15.1 billion in 2026 (significantly higher than the previous estimate of $9.6 billion), showcasing the wisdom of ‘differentiated competition.’

AI Sector: Intensified Investment in Computing Power Infrastructure, Demand Structure Undergoing an ‘Inference Revolution’

By Q3 2025, the AI industry will shift from being ‘training-driven’ to ‘dual-driven by training and inference,’ with the proliferation of agent-based AI driving a hundredfold increase in computational demand. Cloud providers are continuously increasing capital expenditures to offer ‘reliable infrastructure support.’

(1) Computing Power Infrastructure: Record-high Capital Expenditures by Cloud Providers, Strong Growth Outlook for 2026

In 2025, the combined capital expenditures of the five major cloud providers will exceed $360 billion, representing a 45% increase from 2024: Google raised its 2025 capital expenditure forecast to $85 billion (from $75 billion), with Q2 spending reaching $22.45 billion (a year-over-year increase of 70%), and 2026 growth expected at 30%; Meta revised its 2025 forecast to $66-72 billion (from $60-65 billion), signaling over $100 billion in 2026; Microsoft’s FY2025 figure reached $88.2 billion, with FY2026 Q1 projected to exceed $30 billion.

Structurally, 70% is allocated to AI servers and data centers, 20% to networking equipment (such as NVIDIA’s Spectrum-X Ethernet), and 10% to software ecosystems, ensuring that computing power is ‘both abundant and high-quality,’ avoiding the inefficiencies of ‘prioritizing hardware over software.’

(2) Demand Structure: Explosive Growth in Inference Demand, Agent-based AI Initiates a ‘Qualitative Leap in Computing Power’

Inference computing has achieved a breakthrough in scale. Jensen Huang, CEO of NVIDIA, noted that computational demand for agent-based AI is 100-1000 times greater than traditional chatbots (depending on task complexity); Meta’s AI glasses generate over 1,000 daily inference requests per device, a tenfold increase compared to traditional mobile apps, marking a ‘functional revolution’ rather than mere quantitative growth.

Demand stratification is evident: The EU plans to invest €20 billion in building 20 AI factories, with NVIDIA’s sovereign AI revenue forecasted to exceed $20 billion in 2025 (doubling year-over-year); on the enterprise side, Microsoft Copilot has over 800 million monthly active users, covering 70% of Fortune 100 companies, providing long-term stable growth driven by ‘government + enterprise’ dual demand.

(3) Application Deployment: From ‘Lab’ to ‘Industry,’ AI Reshapes Traditional Sectors

Q3 saw AI applications penetrate deeper into industries. Sales of Meta’s Ray-Ban AI glasses accelerated, with plans to launch Oakley sports models, covering ‘daily + professional scenarios’; WhatsApp Business Edition is under testing, enabling enterprises to reach 1.5 billion daily active users, with US ‘click-to-message’ revenue growing 40% year-over-year. Microsoft’s Dragon Copilot in healthcare recorded 13 million patient-doctor interactions in Q2 (a sevenfold year-over-year increase), saving 100,000 hours; manufacturing clients using Azure AI optimized production lines, improving equipment utilization by 20%, demonstrating the core value of ‘AI-enabled cost reduction and efficiency improvement.’

(IV) Enterprise-Level AI: Palantir’s Cross-Domain Penetration and the Synergistic Revolution of Data and AI

Palantir (PLTR) AIP platform emerges as a game-changer: a five-year extension in partnership with Lear Corporation in manufacturing facilitates cross-departmental collaboration across its global factories, saving over USD 30 million by 2025; in healthcare, collaboration with TeleTracking builds a hospital ‘digital nervous system,’ enabling AI-driven resource allocation. Pilot hospitals are expected to reduce emergency waiting times by over 20%.

Financially, Q3 revenue guidance stands at USD 1.083-1.087 billion (a year-over-year increase of 50%). U.S. commercial revenue growth is projected to exceed 85%, with a Rule of 40 score reaching 94% (far surpassing the industry benchmark of 40%), demonstrating the monetization logic of ‘breaking data silos + anchoring use cases.’

(V) AI Advertising Ecosystem: AppLovin’s Closed-Loop Empowerment and Technological Reconstruction of Ad Efficiency

AppLovin (APP) leverages the AXON 2.0 machine learning engine to build an ecosystem loop: on the demand side, AppDiscovery analyzes billions of data points for precise user targeting for advertisers; on the supply side, MAX aggregates traffic from over 80,000 developers, matched via ALX programmatic trading. Q3 revenue guidance stands at USD 1.32-1.34 billion, with adjusted EBITDA margin exceeding 81%. Case studies show Lion Studios utilized its AI tools to complete 100 experiments in five weeks, increasing global installations by 20%; cross-border e-commerce clients reduced customer acquisition costs by 35%, achieving ‘precise irrigation’ in advertising.

2030 Outlook for the Three Major AI Giants

(I) NVIDIA: AI Factories Leading the Computational Ecosystem Revolution

NVIDIA’s 2030 strategy focuses on ‘AI Factories.’ Jensen Huang stated that annual global AI infrastructure spending will reach USD 3-4 trillion (an upward revision). Each 1-gigawatt AI factory requires 500,000 GPUs, covering 60%-70% market share, corresponding to USD 35 billion in hardware demand per scenario. Susquehanna forecasts NVIDIA will maintain a 67% market share in AI GPUs by 2030. Strategically, Spectrum-XGS Ethernet will connect global data centers, collaborating with OpenAI to deploy 10-gigawatt data centers, betting on GPU energy efficiency advantages, transitioning from a hardware provider to a rule-setter in computational power.

(II) Broadcom: Breakthroughs in AI Customization and Network Synergy

Broadcom’s 2030 focus is on ‘customized AI computing + ultra-high-speed networking.’ CEO Hock Tan has set a fiscal 2030 AI revenue target of USD 120 billion (a fivefold increase from USD 20 billion in 2025, tied to executive incentives). Strategically, customized ASIC chips will be supplied to seven core LLM creators, while enterprise-level inference needs will be handled by general-purpose GPUs. On the networking front, Ethernet will serve as the standard for AI clusters, with bandwidth demand reaching 100 terabits by 2030. Optical solutions are planned for deployment between 2026-2027, positioning Broadcom as a ‘key enabler’ of cloud giants’ AI infrastructure.

(III) Oracle: AI Cloud Ecosystem and Dominance in the Inference Market

Oracle has set its sights on dominating ‘AI cloud infrastructure + the inference market’ by 2030. Management explicitly stated a revenue target of $144 billion for OCI (Oracle Cloud Infrastructure) in fiscal year 2030 (a sevenfold increase from $18 billion in 2025), with AI-related revenue expected to rise to 60%. Strategically, Oracle is leveraging over $450 billion in remaining performance obligations (RPO) with partners like OpenAI to secure the training market, betting that the inference market will surpass the training market in size. By utilizing AI database vectorization technology, it aims to integrate enterprise data with public models. Supporting measures include cumulative capital expenditures of $405 billion between 2026 and 2030 to build liquid-cooled data centers and global computing nodes. Despite short-term operating profit margins declining from 44% to 38%, Oracle remains committed to a ‘scale-first’ strategy, transforming into a core provider of enterprise-grade AI computing power.

Investment Logic and Strategy: Anchoring Long-Term Certainty While Adapting Tactics to Volatility

(I) Core Investment Logic: Locking Targets Through Three Dimensions of Certainty

Certainty of Technical Barriers: Select ‘irreplaceable’ leaders, such as ASML Holding (with over 90% market share in EUV), Taiwan Semiconductor (with over 60% market share in advanced process foundry), and NVIDIA (with over 80% market share in AI chips). Technical barriers are the core of risk resistance.

Certainty of Demand Resilience: Focus on the ‘AI computing chain + industrial AI,’ such as SK Hynix (HBM demand up 100% YoY), CRWV (AI computing lease RPO exceeding $30 billion), and Microsoft (Azure AI growth rate of 39%). Demand serves as the growth engine.

Certainty of Cash Flow: Prioritize companies with stable free cash flow, such as Taiwan Semiconductor ($13.5 billion in free cash flow in Q2) and Microsoft (over $80 billion in FY25). Cash flow acts as a ‘safety net’ in a high-interest-rate environment.

(II) Specific Operational Strategies: Pyramid Buying and Inverted Pyramid Selling

Pyramid Buying: For semiconductor leaders (e.g., NVIDIA, Taiwan Semiconductor), add two shares when the stock price drops 2%, four shares at 4%, and six shares at 6%. This ‘buy more as it falls’ approach averages costs while maintaining position control without overexposure to one-sided bets.

Inverted Pyramid Selling: Sell 10% of holdings when the stock rises 30% from its bottom, 20% at 40%, and 30% at 50%. Gradually lock in profits without aiming to ‘sell at the peak,’ ensuring realized gains.

Risk Alert: Beware of Three Major Uncertainties

Geopolitical risks: The US may escalate chip export controls on China, casting doubt over shipments of products like H20; the EU’s DMA bill could impact Meta and Google’s revenue in Europe (which accounts for over 20%), requiring ongoing policy monitoring.

Valuation divergence risks: NVIDIA’s expected PE ratio for 2026 stands at 35x (higher than the semiconductor industry average of 25x); compute-leasing targets like CRWV trade at 8x P/S (versus an industry median of 5x). High valuations require robust earnings support, with potential corrections if expectations are not met.

Capacity bottleneck risks: Taiwan Semiconductor’s CoWoS capacity is projected to face a 20% shortfall by 2025, while ASML Holding’s High-NA EUV capacity will only meet demand by 2026. Cloud vendors’ data centers are constrained by power and land availability, causing delays in some projects that could limit compute power expansion.

Conclusion: The Long-Term Perspective on the Compute Revolution

The semiconductor and AI industries are entering a critical phase of ‘quantitative change to qualitative change’ by Q3 2025. Semiconductor technology provides the ‘physical foundation’ for AI, while AI demand fuels ‘growth momentum’ for semiconductors—creating a mutually reinforcing ecosystem. Investment should adopt a ‘long-term perspective,’ resisting short-term fluctuations and geopolitical policies. Anchoring value through technological barriers and managing risks via scientific strategies is essential. Technological iterations and evolving demands are absolute trends, while short-term volatility represents relative phenomena. Only by controlling greed and fear, using a margin of safety as a shield and long-term value as a spear, can one capture returns in the compute revolution.

Note: The companies mentioned in this article are for case analysis only and do not constitute any investment recommendation. The market carries risks; investment should be approached with caution, and decisions must be made based on independent assessment.