OpenAI launched the latest iteration of its artificial intelligence-powered video generator on Tuesday, adding a social feed that allows people to share their realistic videos.

Within hours of Sora 2’s, release, though, many of the videos populating the feed and spilling over to older social media platforms depicted copyrighted characters in compromising situations as well as graphic scenes of violence and racism. OpenAI’s own terms of service for Sora as well as ChatGPT’s image or text generation prohibit content that “promotes violence” or, more broadly, “causes harm”.

In prompts and clips reviewed by the Guardian, Sora generated several videos of bomb and mass-shooting scares, with panicked people screaming and running across college campuses and in crowded places like New York’s Grand Central Station. Other prompts created scenes from war zones in Gaza and Myanmar, where children fabricated by AI spoke about their homes being burned. One video with the prompt “Ethiopia footage civil war news style” had a reporter in a bulletproof vest speaking into a microphone saying the government and rebel forces were exchanging fire in residential neighborhoods. Another video, created with only the prompt “Charlottesville rally”, showed a Black protester in a gas mask, helmet and goggles yelling: “You will not replace us” – a white supremacist slogan.

OpenAI Sora 2 generated video 1

OpenAI Sora 2 generated video 1

The video generator is invite-only and not yet available to the general public. Even still, in the three days since its limited release, it skyrocketed to the No 1 spot in Apple’s App Store, beating out OpenAI’s own ChapGPT.

“It’s been epic to see what the collective creativity of humanity is capable of so far,” Bill Peebles, the head of Sora, posted on X on Friday. “We’re sending more invite codes soon, I promise!”

The Sora app gives a glimpse into a near future where separating truth from fiction could become increasingly difficult, should the videos spread widely beyond the AI-only feed, as they have begun to. Misinformation researchers say that such lifelike scenes could obfuscate the truth and create situations where these AI videos could be used for fraud, bullying and intimidation.

“It has no fidelity to history, it has no relationship to the truth,” said Joan Donovan, an assistant professor at Boston University who studies media manipulation and misinformation. “When cruel people get their hands on tools like this, they will use them for hate, harassment and incitement.”

Slop engine or ‘ChatGPT for creativity’?

OpenAI’s CEO Sam Altman described the launch of Sora 2 as “really great”, saying in a blog post that “this feels to many of us like the ‘ChatGPT for creativity’ moment, and it feels fun and new”.

Altman admitted to “some trepidation”, acknowledging how social media can be addictive and used for bullying and that AI video generation can create what’s known as “slop”, a slew of repetitive, low-quality videos that can overwhelm a platform.

“The team has put great care and thought into trying to figure out how to make a delightful product that doesn’t fall into that trap,” Altman wrote. He said OpenAI had also put in place mitigations on using someone’s likeness and safeguards for disturbing or illegal content. For example, the app refused to make a video of Donald Trump and Vladimir Putin sharing cotton candy.

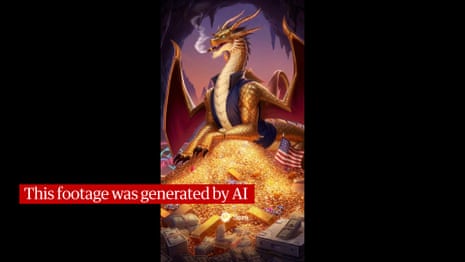

OpenAI Sora 2 generated video 2

OpenAI Sora 2 generated video 2

In the three days since Sora’s launch, however, many of these videos have already made their way elsewhere online. Drew Harwell, a reporter for the Washington Post, created a video of Altman himself as a second world war military leader. Harwell also said he was able to make videos with “ragebait, fake crimes and women splattered with white goo”.

Sora’s feed is full of videos of copyrighted characters from shows like SpongeBob SquarePants, South Park and Rick and Morty. The app had no trouble generating videos of Pikachu raising tariffs on China, stealing roses from the White House Rose Garden or participating in a Black Lives Matter protest alongside SpongeBob, who, in another video, declared and planned a war on the United States. In a video documented by 404 Media, SpongeBob was dressed like Adolf Hitler.

Paramount, Warner Bros and Pokémon Co did not return requests for comment.

David Karpf, an associate professor at George Washington University’s School of Media and Public Affairs, said he had viewed videos of copyrighted characters promoting cryptocurrency scams. He said it’s clear OpenAI’s safeguards and mitigations for Sora aren’t working.

skip past newsletter promotion

A weekly dive in to how technology is shaping our lives

Privacy Notice: Newsletters may contain information about charities, online ads, and content funded by outside parties. If you do not have an account, we will create a guest account for you on theguardian.com to send you this newsletter. You can complete full registration at any time. For more information about how we use your data see our Privacy Policy. We use Google reCaptcha to protect our website and the Google Privacy Policy and Terms of Service apply.

after newsletter promotion

OpenAI Sora 2 generated video 4

OpenAI Sora 2 generated video 4

“The guardrails are not real if people are already creating copyrighted characters promoting fake crypto scams,” Karpf said. “In 2022, [the tech companies] would have made a big deal about how they were hiring content moderators … In 2025, this is the year that tech companies have decided they don’t give a shit.”

Copyright, copycat

Shortly before OpenAI released Sora 2, the company reached out to talent agencies and studios, alerting them that if they didn’t want their copyrighted material replicated by the video generator, they would have to opt out, according to a report by the Wall Street Journal.

OpenAI told the Guardian that content owners can flag copyright infringement using a “copyright disputes form”, but that individual artists or studios cannot have a blanket opt-out. Varun Shetty, OpenAI’s head of media partnerships, said: “We’ll work with rights holders to block characters from Sora at their request and respond to takedown requests.”

Emily Bender, a professor at the University of Washington and author of the book The AI Con, said Sora is creating a dangerous situation where it’s “harder to find trustworthy sources and harder to trust them once found”.

“Synthetic media machines, whether designed to extrude text, images or video, are a scourge on our information ecosystem,” Bender said. “Their outputs function analogously to an oil spill, flowing through connections of technical and social infrastructure, weakening and breaking relationships of trust.”

Nick Robins-Early contributed reporting

Quick GuideContact us about this storyShow

The best public interest journalism relies on first-hand accounts from people in the know.

If you have something to share on this subject, you can contact us confidentially using the following methods.

Secure Messaging in the Guardian app

The Guardian app has a tool to send tips about stories. Messages are end to end encrypted and concealed within the routine activity that every Guardian mobile app performs. This prevents an observer from knowing that you are communicating with us at all, let alone what is being said.

If you don’t already have the Guardian app, download it (iOS/Android) and go to the menu. Select ‘Secure Messaging’.

SecureDrop, instant messengers, email, telephone and post

If you can safely use the Tor network without being observed or monitored, you can send messages and documents to the Guardian via our SecureDrop platform.

Finally, our guide at theguardian.com/tips lists several ways to contact us securely, and discusses the pros and cons of each.

Illustration: Guardian Design / Rich Cousins

Thank you for your feedback.