One only need look at the incredible revenues and profits of the datacenter business at Nvidia to know that the world’s biggest compute customers – the hyperscalers, the cloud builders, and now the biggest model builders – need to bend the price/performance curve to boost their own profits.

And Amazon, with the Trainium AI accelerator, which appears to be used to do AI inferencing as well as the AI training that gives the product its name on the company’s SageMaker and Bedrock AI stacks. This seems to imply that AWS is sidelining the related Inferentia line of inference accelerators during the GenAI era. (Perhaps they should just call it AInium?)

The central them from the datacenter on the call with Wall Street analysts going over the financial results for Amazon and its Amazon Web Services cloud was that Trainium2 is going like gangbusters, and that the Trainium3 accelerator, which has been in development in conjunction with model builder and tight partner Anthropic and which was previewed last December at the re:Invent 2024 conference, it getting ready to ramp.

We did a preview of the Trainium2 chip way back in December 2023, and we need to update this with the actual specs of the chip. We don’t know much about Trainium3, except that it is etched using 3 nanometer processes from Taiwan Semiconductor Manufacturing Co, will have 2X the performance of the current Trainium2 chip, and deliver 40 percent better energy efficiency (which we presume means better flops per watt).

Amazon, like other clouds, is trying to strike a happy balance using its own accelerators to drive profits and to underpin AI platform services while at the same time offering massive GPU capacity from Nvidia and sometimes AMD for those who want raw capacity to build their own platforms on the cloud. Thus far, only Google with the TPU and AWS with the Trainium have widely deployed their homegrown AI training accelerators. Microsoft is still working on its Maia chips and Meta Platforms is similarly not there yet with the training variants of its MTIA accelerators. (The Chinese hyperscalers and cloud builders are also working on their own CPUs and XPUs in varying degrees, or working with third parties like Huawei Technology’s HiSilicon unit to wean themselves off of Nvidia GPUs.)

Andy Jassy, who is now chief executive officer of Amazon but who ran AWS for more than a decade since its founding, said that Trainium2 capacity is fully subscribed and now represents a business with a multi-billion dollar run rate, with revenues up 2.5X sequentially from the second quarter.

Jassy said that a small number of large customers are using most of the Trainium2 capacity on its clouds, which he claimed offer 30 percent to 40 percent better bang for the buck on AI workloads “than other options out there,” as he put it. And because customers want to get better price/performance as they deploy AI applications in production, there is a lot of demand for Trainium2 instances on AWS. Jassy added that “the majority of token usage in Amazon Bedrock is already running on Trainium,” by which we think he meant to say that the majority of context tokens processed and the majority of output tokens generated on Bedrock are being chewed on and created by computations on Tranium2 and sometimes Trainium1 or Inferentia2.

Jassy also said that Anthropic was training its latest Claude models in the 4.X generation using the “project Ranier” supercluster that the company revealed back in December 2024. At the time, AWS and Anthropic said that Project Ranier would have “hundreds of thousands” of Trainium2 chips and would have 5X the performance of the GPU clusters that Anthropic had used to train its Claude 3 generation of models.

As it turns out, Ranier is more of a beast than people might have thought, with 500,000 Tranium2 chips, according to Jassy and a plan to expand that to 1 million Trainium2 chips by the end of this year.

As for Trainium3, Jassy said that it will be in preview by the end of the year (which means we can expect to see more about it at the re:Invent 2025 conference in December), with “fuller volumes coming in the beginning of 2026,” as he put it, adding that AWS has a lot of large and medium-sized customers “who are quite interested in Trainium3.” That stands to reason if instances on AWS will offer 4X the aggregate capacity and 2X the per chip capacity compared to Trainium2 UltraClusters. Companies like Anthropic can string together much larger collections of instances, much as OpenAI has gotten very large clusters on Microsoft Azure historically than other customers can rent.

“So we have to, of course, deliver the chip,” Jassy quipped, referring to Trainium3. “We have to deliver it in volumes and deliver it quickly. And we have to continue to work on the software ecosystem, which gets better all the time. And as we have more proof points like we have with Project Rainier with what Anthropic is doing on Trainium2, it builds increasing credibility for Trainium. And I think customers are very bullish about it. I’m bullish about it as well.”

The other interesting thing that Jassy talked about on the call with Wall Street was the amount of datacenter capacity that AWS is bringing online. Jassy said that “in the last year,” by which we think he meant the trailing twelve months – a metric Amazon uses a lot – AWS had fired up 3.8 GW of datacenter capacity, and it has another 1 GW coming online in the fourth quarter. Jassy did not give a figure for total installed capacity of AWS datacenters, but said that it would double between now and the end of 2027. It doubled from the end of 2022 to the present.

“So we are bringing in quite a bit of capacity today,” Jassy explained. “And overall in the industry, maybe the bottleneck is power. I think at some point, it may move to chips, but we’re bringing in quite a bit of capacity. And as fast as we’re bringing in right now, we are monetizing it.”

Given this, let’s say that AWS had maybe 4 GW of total datacenter capacity at the end of 2022 and will have 10 GW by the end of 2025. That would imply maybe somewhere around 20 GW total two years from now. For AI datacenters, you are talking about somewhere on the order of $50 billion per GW for Nvidia infrastructure, and maybe $37 billion per GW for homegrown accelerators like the Trainiums. An incremental 10 GW, assuming half GPUs and half Trainiums represents something on the order of $435 billion in datacenter spending for 2026 and 2027. This sounds nuts.

To match a mere 40 percent growth in GW capacity for both 2026 and 2027, assuming AWS will spend $106.7 billion in IT gear in 2025 – the vast majority of its expected $125 billion in capital expenses for the year, and almost all of that for AI infrastructure – you have to start with 1.95 GW as 2022 comes to a close, you hit 5.9 GW as 2025 closes and that means 11.8 GW by the end of 2027, with $256.7 billion in IT spending over 2026 and 2027 inclusive. This sounds relatively more sane, and it also implies that megawatts very quickly became small potatoes in this modern GenAI era when this was the capacity of big datacenters over the past decade or two.

And with all of that as a backdrop, let’s dig into the Amazon Web Services numbers for the third quarter of 2025.

Amazon overall, including its retail, advertising, media, and cloud businesses, raked in $180.17 billion, up 13.4 percent, with net income of $21.19 billion, up 38.2 percent.

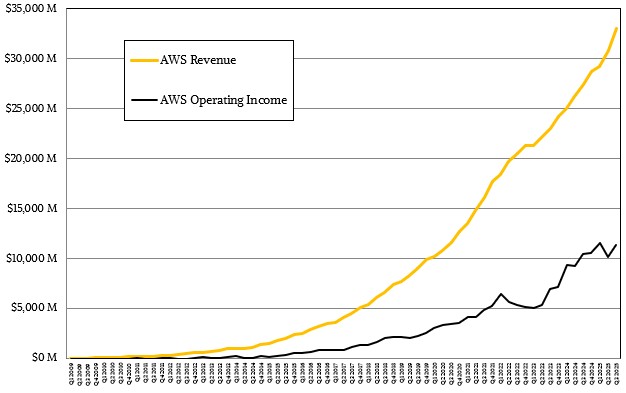

The AWS cloud accelerated its revenue growth a bit, thanks in large part to the GenAI boom. AWS revenues rose by 20.2 percent to just a smidgen over $33 billion, and operating income was under pressure and only grew 9.4 percent to $11.43 billion.

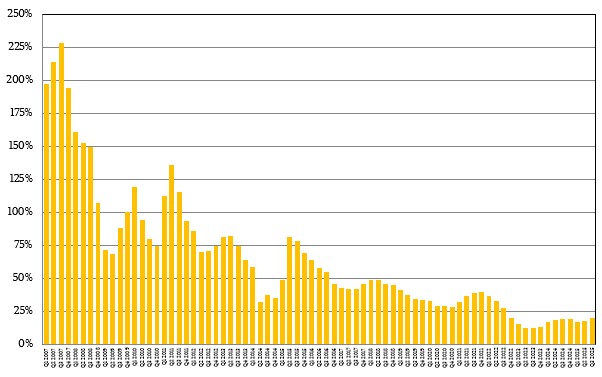

Year on year growth for AWS is on the upswing, but it seems unlikely to rise to past glory.

Year on year growth for AWS is on the upswing, but it seems unlikely to rise to past glory.

That operating income represented 34.6 percent of revenues in the period, still a little bit lower than average but not surprising given the cost of ramping “Blackwell” GPU systems at the same time as the Trainium2 machinery in its datacenters and regions.

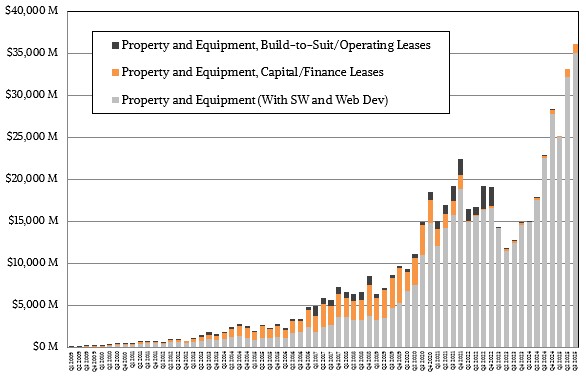

Parent company Amazon had a little over $36 billion in capital expenses, and we think that $26.4 billion of that of that was for IT infrastructure, with $28.2 billion of that being for AI clusters and $2.6 billion being for other kinds of IT infrastructure for web and data analytics workloads based on CPU architectures.

Amazon capital expenses over time, including warehouses, transport, and datacenters.

Amazon capital expenses over time, including warehouses, transport, and datacenters.

It is hard to say for sure, but we think that spending on Trainium accelerators might have been somewhere around 35 percent of the AI spending, with the other 65 percent on GPU-based systems. We would not be surprised if by the end of 2026 or early in 2027 that half of the AI compute engine capacity installed by was for Trainium gear, but more than half of the money being allocated for GPU gear that rents at a much higher price on AWS.

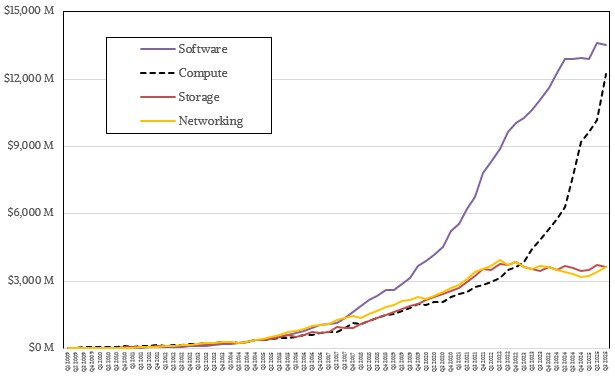

We have been using spreadsheet witchcraft for many years to try to estimate how revenue streams at AWS split across software, compute, networking, and storage, and we think, as you can see in the chart below, compute is catching up to software during the GenAI boom:

In fact, it will not be long before we flash back to the future of the past of AWS when EC2 was a bigger business than S3 and compute, in the aggregate across CPU, GPU, and Trainium instances will match software revenues for things like databases and data stores or Lambda serverless processing or AI frameworks and models like those gathered up in the SageMaker and Bedrock services.

One thing: When an AWS revenue line in the chart above is flat, that means it is growing modestly because AWS is continually trying to lower the cost of a unit of compute, storage, or networking capacity to make it easier to consume more. And if a line is going up, capacity is rising faster than that line for the same reason over the course of years.

That leaves us with our estimation of the “real” systems business of AWS, which is the combination of compute, storage, and networking. When you add this up, the core AWS systems business drove $19.47 billion in sales, according to our model, up 33.8 percent, with operating income of $5.73 billion, up 21.8 percent.

Featuring highlights, analysis, and stories from the week directly from us to your inbox with nothing in between.

Subscribe now

Related Articles